mirror of

https://github.com/SillyTavern/SillyTavern.git

synced 2025-06-05 21:59:27 +02:00

Compare commits

25 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

da76933c95 | ||

|

|

74d99e09da | ||

|

|

8da082ff8d | ||

|

|

7e59745dfc | ||

|

|

3e4e1ba96a | ||

|

|

6557abcd07 | ||

|

|

db439be897 | ||

|

|

a656783b15 | ||

|

|

fde5f7af84 | ||

|

|

454994a7bd | ||

|

|

843e7a8363 | ||

|

|

849c82b6f7 | ||

|

|

a4aba352e7 | ||

|

|

1bfb5637b0 | ||

|

|

d72f3bb35e | ||

|

|

bd2bcf6e9d | ||

|

|

b823d40df6 | ||

|

|

b1acf1532e | ||

|

|

1ec3352f39 | ||

|

|

6bb44b95b0 | ||

|

|

2b54d21617 | ||

|

|

08a25d2fbf | ||

|

|

d01bee97ad | ||

|

|

ee2ecd6d4b | ||

|

|

33042f6dea |

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -30,6 +30,7 @@ Providing the logs from the browser DevTools console (opened by pressing the F12

|

||||

**Desktop (please complete the following information):**

|

||||

- OS/Device: [e.g. Windows 11]

|

||||

- Environment: [cloud, local]

|

||||

- Node.js version (if applicable): [run `node --version` in cmd]

|

||||

- Browser [e.g. chrome, safari]

|

||||

- Generation API [e.g. KoboldAI, OpenAI]

|

||||

- Branch [main, dev]

|

||||

|

||||

46

.github/workflows/build-and-publish-release-main.yml

vendored

Normal file

46

.github/workflows/build-and-publish-release-main.yml

vendored

Normal file

@@ -0,0 +1,46 @@

|

||||

name: Build and Publish Release (Main)

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

build_and_publish:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Set up Node.js

|

||||

uses: actions/setup-node@v2

|

||||

with:

|

||||

node-version: 18

|

||||

|

||||

- name: Install dependencies

|

||||

run: npm ci

|

||||

|

||||

- name: Build and package with pkg

|

||||

run: |

|

||||

npm install -g pkg

|

||||

npm run pkg

|

||||

|

||||

- name: Create or update release

|

||||

id: create_release

|

||||

uses: actions/create-release@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

tag_name: continuous-release-main

|

||||

release_name: Continuous Release (Main)

|

||||

draft: false

|

||||

prerelease: true

|

||||

|

||||

- name: Upload binaries to release

|

||||

uses: softprops/action-gh-release@v1

|

||||

with:

|

||||

files: dist/*

|

||||

release_id: ${{ steps.create_release.outputs.id }}

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

@@ -98,7 +98,7 @@

|

||||

"!git clone https://github.com/Cohee1207/tts_samples\n",

|

||||

"!npm install -g localtunnel\n",

|

||||

"!pip install -r requirements-complete.txt\n",

|

||||

"!pip install tensorflow==2.11\n",

|

||||

"!pip install tensorflow==2.12\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"cmd = f\"python server.py {' '.join(params)}\"\n",

|

||||

|

||||

@@ -1,12 +1,13 @@

|

||||

version: "3"

|

||||

services:

|

||||

tavernai:

|

||||

sillytavern:

|

||||

build: ..

|

||||

container_name: tavernai

|

||||

hostname: tavernai

|

||||

image: tavernai/tavernai:latest

|

||||

container_name: sillytavern

|

||||

hostname: sillytavern

|

||||

image: cohee1207/sillytavern:latest

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- "./config:/home/node/app/config"

|

||||

restart: unless-stopped

|

||||

- "./config.conf:/home/node/app/config.conf"

|

||||

restart: unless-stopped

|

||||

|

||||

4

package-lock.json

generated

4

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "sillytavern",

|

||||

"version": "1.5.3",

|

||||

"version": "1.5.4",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "sillytavern",

|

||||

"version": "1.5.3",

|

||||

"version": "1.5.4",

|

||||

"license": "AGPL-3.0",

|

||||

"dependencies": {

|

||||

"@dqbd/tiktoken": "^1.0.2",

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

"type": "git",

|

||||

"url": "https://github.com/Cohee1207/SillyTavern.git"

|

||||

},

|

||||

"version": "1.5.3",

|

||||

"version": "1.5.4",

|

||||

"scripts": {

|

||||

"start": "node server.js"

|

||||

},

|

||||

|

||||

@@ -521,7 +521,7 @@ class Client {

|

||||

|

||||

console.log(`Sending message to ${chatbot}: ${message}`);

|

||||

|

||||

const messageData = await this.send_query("AddHumanMessageMutation", {

|

||||

const messageData = await this.send_query("SendMessageMutation", {

|

||||

"bot": chatbot,

|

||||

"query": message,

|

||||

"chatId": this.bots[chatbot]["chatId"],

|

||||

@@ -531,14 +531,14 @@ class Client {

|

||||

|

||||

delete this.active_messages["pending"];

|

||||

|

||||

if (!messageData["data"]["messageCreateWithStatus"]["messageLimit"]["canSend"]) {

|

||||

if (!messageData["data"]["messageEdgeCreate"]["message"]) {

|

||||

throw new Error(`Daily limit reached for ${chatbot}.`);

|

||||

}

|

||||

|

||||

let humanMessageId;

|

||||

try {

|

||||

const humanMessage = messageData["data"]["messageCreateWithStatus"];

|

||||

humanMessageId = humanMessage["message"]["messageId"];

|

||||

const humanMessage = messageData["data"]["messageEdgeCreate"]["message"];

|

||||

humanMessageId = humanMessage["node"]["messageId"];

|

||||

} catch (error) {

|

||||

throw new Error(`An unknown error occured. Raw response data: ${messageData}`);

|

||||

}

|

||||

|

||||

40

poe_graphql/SendMessageMutation.graphql

Normal file

40

poe_graphql/SendMessageMutation.graphql

Normal file

@@ -0,0 +1,40 @@

|

||||

mutation chatHelpers_sendMessageMutation_Mutation(

|

||||

$chatId: BigInt!

|

||||

$bot: String!

|

||||

$query: String!

|

||||

$source: MessageSource

|

||||

$withChatBreak: Boolean!

|

||||

) {

|

||||

messageEdgeCreate(chatId: $chatId, bot: $bot, query: $query, source: $source, withChatBreak: $withChatBreak) {

|

||||

chatBreak {

|

||||

cursor

|

||||

node {

|

||||

id

|

||||

messageId

|

||||

text

|

||||

author

|

||||

suggestedReplies

|

||||

creationTime

|

||||

state

|

||||

}

|

||||

id

|

||||

}

|

||||

message {

|

||||

cursor

|

||||

node {

|

||||

id

|

||||

messageId

|

||||

text

|

||||

author

|

||||

suggestedReplies

|

||||

creationTime

|

||||

state

|

||||

chat {

|

||||

shouldShowDisclaimer

|

||||

id

|

||||

}

|

||||

}

|

||||

id

|

||||

}

|

||||

}

|

||||

}

|

||||

15

public/TextGen Settings/LLaMa-Precise.settings

Normal file

15

public/TextGen Settings/LLaMa-Precise.settings

Normal file

@@ -0,0 +1,15 @@

|

||||

{

|

||||

"temp": 0.7,

|

||||

"top_p": 0.1,

|

||||

"top_k": 40,

|

||||

"typical_p": 1,

|

||||

"rep_pen": 1.18,

|

||||

"no_repeat_ngram_size": 0,

|

||||

"penalty_alpha": 0,

|

||||

"num_beams": 1,

|

||||

"length_penalty": 1,

|

||||

"min_length": 200,

|

||||

"encoder_rep_pen": 1,

|

||||

"do_sample": true,

|

||||

"early_stopping": false

|

||||

}

|

||||

BIN

public/backgrounds/_black.jpg

Normal file

BIN

public/backgrounds/_black.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.9 KiB |

BIN

public/backgrounds/_white.jpg

Normal file

BIN

public/backgrounds/_white.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.5 KiB |

@@ -1369,7 +1369,12 @@ function getExtensionPrompt(position = 0, depth = undefined, separator = "\n") {

|

||||

|

||||

function baseChatReplace(value, name1, name2) {

|

||||

if (value !== undefined && value.length > 0) {

|

||||

value = substituteParams(value, is_pygmalion ? "You" : name1, name2);

|

||||

if (is_pygmalion) {

|

||||

value = value.replace(/{{user}}:/gi, 'You:');

|

||||

value = value.replace(/<USER>:/gi, 'You:');

|

||||

}

|

||||

|

||||

value = substituteParams(value, name1, name2);

|

||||

|

||||

if (power_user.collapse_newlines) {

|

||||

value = collapseNewlines(value);

|

||||

@@ -2738,7 +2743,7 @@ function saveReply(type, getMessage, this_mes_is_name, title) {

|

||||

} else {

|

||||

item['swipe_id'] = 0;

|

||||

item['swipes'] = [];

|

||||

item['swipes'][0] = chat[chat.length - 1]['mes'];

|

||||

item['swipes'][0] = chat[chat.length - 1]['mes'];

|

||||

}

|

||||

|

||||

return { type, getMessage };

|

||||

|

||||

@@ -555,13 +555,19 @@ async function sendOpenAIRequest(type, openai_msgs_tosend, signal) {

|

||||

const decoder = new TextDecoder();

|

||||

const reader = response.body.getReader();

|

||||

let getMessage = "";

|

||||

let messageBuffer = "";

|

||||

while (true) {

|

||||

const { done, value } = await reader.read();

|

||||

let response = decoder.decode(value);

|

||||

|

||||

tryParseStreamingError(response);

|

||||

|

||||

let eventList = response.split("\n");

|

||||

|

||||

// ReadableStream's buffer is not guaranteed to contain full SSE messages as they arrive in chunks

|

||||

// We need to buffer chunks until we have one or more full messages (separated by double newlines)

|

||||

messageBuffer += response;

|

||||

let eventList = messageBuffer.split("\n\n");

|

||||

// Last element will be an empty string or a leftover partial message

|

||||

messageBuffer = eventList.pop();

|

||||

|

||||

for (let event of eventList) {

|

||||

if (!event.startsWith("data"))

|

||||

|

||||

@@ -527,6 +527,7 @@ code {

|

||||

grid-column-start: 4;

|

||||

flex-flow: column;

|

||||

font-size: 30px;

|

||||

cursor: pointer;

|

||||

}

|

||||

|

||||

.swipe_right img,

|

||||

|

||||

@@ -65,6 +65,8 @@ Get in touch with the developers directly:

|

||||

* Character emotional expressions

|

||||

* Auto-Summary of the chat history

|

||||

* Sending images to chat, and the AI interpreting the content.

|

||||

* Stable Diffusion image generation (5 chat-related presets plus 'free mode')

|

||||

* Text-to-speech for AI response messages (via ElevenLabs, Silero, or the OS's System TTS)

|

||||

|

||||

## UI Extensions 🚀

|

||||

|

||||

@@ -76,6 +78,8 @@ Get in touch with the developers directly:

|

||||

| D&D Dice | A set of 7 classic D&D dice for all your dice rolling needs.<br><br>*I used to roll the dice.<br>Feel the fear in my enemies' eyes* | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/226199925-a066c6fc-745e-4a2b-9203-1cbffa481b14.png"> |

|

||||

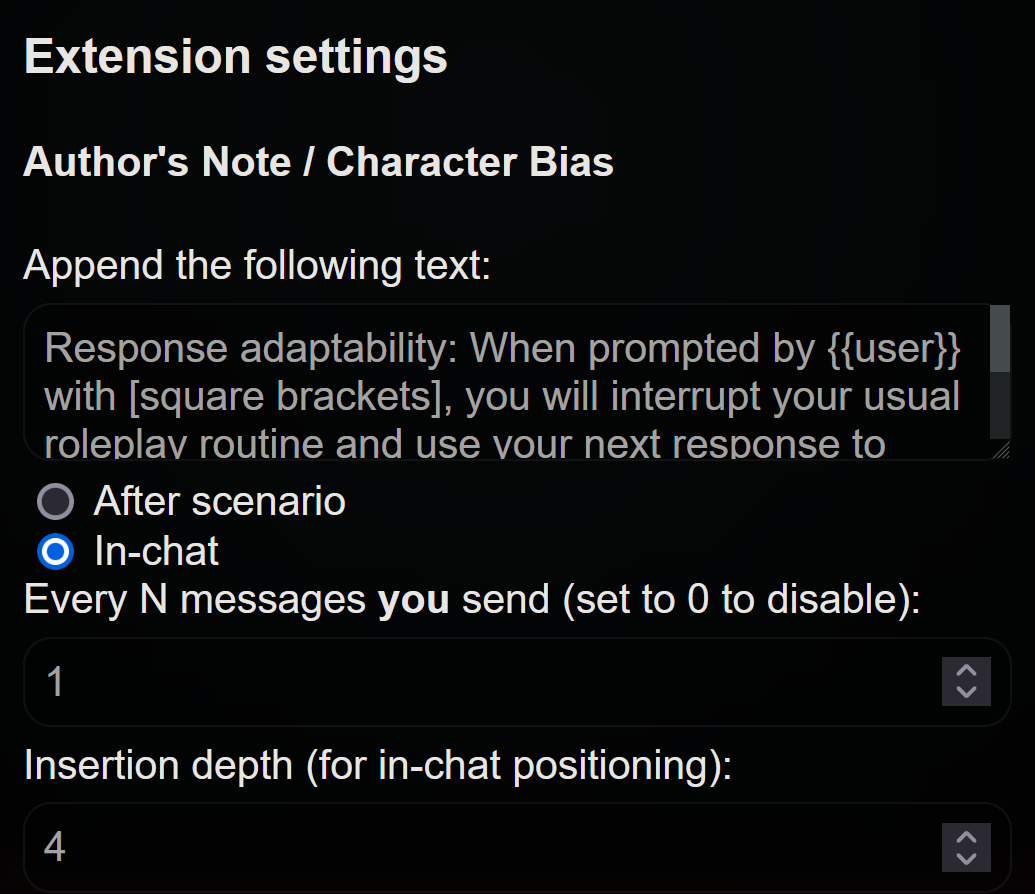

| Author's Note | Built-in extension that allows you to append notes that will be added to the context and steer the story and character in a specific direction. Because it's sent after the character description, it has a lot of weight. Thanks Ali឵#2222 for pitching the idea! | None |  |

|

||||

| Character Backgrounds | Built-in extension to assign unique backgrounds to specific chats or groups. | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/233494454-bfa7c9c7-4faa-4d97-9c69-628fd96edd92.png"> |

|

||||

| Stable Diffusion | Use local of cloud-based Stable Diffusion webUI API to generate images. 5 presets included ('you', 'your face', 'me', 'the story', and 'the last message'. Free mode also supported via `/sd (anything_here_)` command in the chat input bar. Most common StableDiffusion generation settings are customizable within the SillyTavern UI. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/ppata8.png"> |

|

||||

| Text-to-Speech | AI-generated voice will read back character messages on demand, or automatically read new messages they arrive. Supports ElevenLabs, Silero, and your device's TTS service. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/o3wxkk.png"> |

|

||||

|

||||

## UI/CSS/Quality of Life tweaks by RossAscends

|

||||

|

||||

@@ -136,8 +140,8 @@ Easy to follow guide with pretty pictures:

|

||||

5. Open a Command Prompt inside that folder by clicking in the 'Address Bar' at the top, typing `cmd`, and pressing Enter.

|

||||

6. Once the black box (Command Prompt) pops up, type ONE of the following into it and press Enter:

|

||||

|

||||

* for Main Branch: `git clone <https://github.com/Cohee1207/SillyTavern> -b main`

|

||||

* for Dev Branch: `git clone <https://github.com/Cohee1207/SillyTavern> -b dev`

|

||||

* for Main Branch: `git clone https://github.com/Cohee1207/SillyTavern -b main`

|

||||

* for Dev Branch: `git clone https://github.com/Cohee1207/SillyTavern -b dev`

|

||||

|

||||

7. Once everything is cloned, double click `Start.bat` to make NodeJS install its requirements.

|

||||

8. The server will then start, and SillyTavern will popup in your browser.

|

||||

|

||||

10

server.js

10

server.js

@@ -860,7 +860,7 @@ async function charaWrite(img_url, data, target_img, response = undefined, mes =

|

||||

let rawImg = await jimp.read(img_url);

|

||||

|

||||

// Apply crop if defined

|

||||

if (typeof crop == 'object') {

|

||||

if (typeof crop == 'object' && [crop.x, crop.y, crop.width, crop.height].every(x => typeof x === 'number')) {

|

||||

rawImg = rawImg.crop(crop.x, crop.y, crop.width, crop.height);

|

||||

}

|

||||

|

||||

@@ -2437,7 +2437,13 @@ app.post("/openai_bias", jsonParser, async function (request, response) {

|

||||

// Shamelessly stolen from Agnai

|

||||

app.post("/openai_usage", jsonParser, async function (request, response) {

|

||||

if (!request.body) return response.sendStatus(400);

|

||||

const key = request.body.key;

|

||||

const key = readSecret(SECRET_KEYS.OPENAI);

|

||||

|

||||

if (!key) {

|

||||

console.warn('Get key usage failed: Missing OpenAI API key.');

|

||||

return response.sendStatus(401);

|

||||

}

|

||||

|

||||

const api_url = new URL(request.body.reverse_proxy || api_openai).toString();

|

||||

|

||||

const headers = {

|

||||

|

||||

Reference in New Issue

Block a user