mirror of

https://github.com/SillyTavern/SillyTavern.git

synced 2025-06-05 21:59:27 +02:00

Compare commits

56 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

da76933c95 | ||

|

|

74d99e09da | ||

|

|

8da082ff8d | ||

|

|

7e59745dfc | ||

|

|

3e4e1ba96a | ||

|

|

6557abcd07 | ||

|

|

db439be897 | ||

|

|

a656783b15 | ||

|

|

fde5f7af84 | ||

|

|

454994a7bd | ||

|

|

843e7a8363 | ||

|

|

849c82b6f7 | ||

|

|

a4aba352e7 | ||

|

|

1bfb5637b0 | ||

|

|

d72f3bb35e | ||

|

|

bd2bcf6e9d | ||

|

|

b823d40df6 | ||

|

|

b1acf1532e | ||

|

|

1ec3352f39 | ||

|

|

6bb44b95b0 | ||

|

|

2b54d21617 | ||

|

|

08a25d2fbf | ||

|

|

d01bee97ad | ||

|

|

ee2ecd6d4b | ||

|

|

33042f6dea | ||

|

|

419afc783e | ||

|

|

88a726d9ad | ||

|

|

bd6255c758 | ||

|

|

07e7028269 | ||

|

|

a36c843752 | ||

|

|

36803cf473 | ||

|

|

6c2a52dfff | ||

|

|

03d5c5ed2a | ||

|

|

a26e835e64 | ||

|

|

3a1bf3ef81 | ||

|

|

da62edb0cc | ||

|

|

3643bc58f2 | ||

|

|

035e8033f3 | ||

|

|

aeea230e8f | ||

|

|

334b654338 | ||

|

|

7b2b000c0a | ||

|

|

531414df0d | ||

|

|

62434d41b9 | ||

|

|

92328583a4 | ||

|

|

89520ebd84 | ||

|

|

502421e756 | ||

|

|

3e95adc2fa | ||

|

|

039fd8d6c9 | ||

|

|

45b6c95633 | ||

|

|

567caf7ef6 | ||

|

|

4264bebe13 | ||

|

|

bfaf8e6aa1 | ||

|

|

6c971386b2 | ||

|

|

b752cd0228 | ||

|

|

6d3abe2cf0 | ||

|

|

a08a899f35 |

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

1

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -30,6 +30,7 @@ Providing the logs from the browser DevTools console (opened by pressing the F12

|

||||

**Desktop (please complete the following information):**

|

||||

- OS/Device: [e.g. Windows 11]

|

||||

- Environment: [cloud, local]

|

||||

- Node.js version (if applicable): [run `node --version` in cmd]

|

||||

- Browser [e.g. chrome, safari]

|

||||

- Generation API [e.g. KoboldAI, OpenAI]

|

||||

- Branch [main, dev]

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,7 +1,7 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

title: "[Feature Request] "

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

|

||||

46

.github/workflows/build-and-publish-release-main.yml

vendored

Normal file

46

.github/workflows/build-and-publish-release-main.yml

vendored

Normal file

@@ -0,0 +1,46 @@

|

||||

name: Build and Publish Release (Main)

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

build_and_publish:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Set up Node.js

|

||||

uses: actions/setup-node@v2

|

||||

with:

|

||||

node-version: 18

|

||||

|

||||

- name: Install dependencies

|

||||

run: npm ci

|

||||

|

||||

- name: Build and package with pkg

|

||||

run: |

|

||||

npm install -g pkg

|

||||

npm run pkg

|

||||

|

||||

- name: Create or update release

|

||||

id: create_release

|

||||

uses: actions/create-release@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

tag_name: continuous-release-main

|

||||

release_name: Continuous Release (Main)

|

||||

draft: false

|

||||

prerelease: true

|

||||

|

||||

- name: Upload binaries to release

|

||||

uses: softprops/action-gh-release@v1

|

||||

with:

|

||||

files: dist/*

|

||||

release_id: ${{ steps.create_release.outputs.id }}

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

@@ -16,6 +16,7 @@ Method 1 - GIT

|

||||

We always recommend users install using 'git'. Here's why:

|

||||

|

||||

When you have installed via `git clone`, all you have to do to update is type `git pull` in a command line in the ST folder.

|

||||

Alternatively, if the command prompt gives you problems (and you have GitHub Desktop installed), you can use the 'Repository' menu and select 'Pull'.

|

||||

The updates are applied automatically and safely.

|

||||

|

||||

Method 2 - ZIP

|

||||

@@ -52,4 +53,4 @@ If you insist on installing via a zip, here is the tedious process for doing the

|

||||

|

||||

6. Start SillyTavern once again with the method appropriate to your OS, and pray you got it right.

|

||||

|

||||

7. If everything shows up, you can safely delete the old ST folder.

|

||||

7. If everything shows up, you can safely delete the old ST folder.

|

||||

|

||||

@@ -10,6 +10,19 @@

|

||||

"SillyTavern community Discord (support and discussion): https://discord.gg/RZdyAEUPvj"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"#@title <-- Tap this if you run on Mobile { display-mode: \"form\" }\n",

|

||||

"#Taken from KoboldAI colab\n",

|

||||

"%%html\n",

|

||||

"<b>Press play on the audio player to keep the tab alive. (Uses only 13MB of data)</b><br/>\n",

|

||||

"<audio src=\"https://henk.tech/colabkobold/silence.m4a\" controls>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

@@ -42,10 +55,13 @@

|

||||

"#@markdown Enables Silero text-to-speech module\n",

|

||||

"extras_enable_sd = True #@param {type:\"boolean\"}\n",

|

||||

"#@markdown Enables SD picture generation\n",

|

||||

"SD_Model = \"ckpt/anything-v4.5-vae-swapped\" #@param [ \"ckpt/anything-v4.5-vae-swapped\", \"philz1337/clarity\", \"ckpt/sd15\" ]\n",

|

||||

"SD_Model = \"ckpt/anything-v4.5-vae-swapped\" #@param [ \"ckpt/anything-v4.5-vae-swapped\", \"hakurei/waifu-diffusion\", \"philz1337/clarity\", \"prompthero/openjourney\", \"ckpt/sd15\", \"stabilityai/stable-diffusion-2-1-base\" ]\n",

|

||||

"#@markdown * ckpt/anything-v4.5-vae-swapped - anime style model\n",

|

||||

"#@markdown * hakurei/waifu-diffusion - anime style model\n",

|

||||

"#@markdown * philz1337/clarity - realistic style model\n",

|

||||

"#@markdown * prompthero/openjourney - midjourney style model\n",

|

||||

"#@markdown * ckpt/sd15 - base SD 1.5\n",

|

||||

"#@markdown * stabilityai/stable-diffusion-2-1-base - base SD 2.1\n",

|

||||

"\n",

|

||||

"import subprocess\n",

|

||||

"\n",

|

||||

@@ -79,9 +95,10 @@

|

||||

"%cd /\n",

|

||||

"!git clone https://github.com/Cohee1207/SillyTavern-extras\n",

|

||||

"%cd /SillyTavern-extras\n",

|

||||

"!git clone https://github.com/Cohee1207/tts_samples\n",

|

||||

"!npm install -g localtunnel\n",

|

||||

"!pip install -r requirements-complete.txt\n",

|

||||

"!pip install tensorflow==2.11\n",

|

||||

"!pip install tensorflow==2.12\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"cmd = f\"python server.py {' '.join(params)}\"\n",

|

||||

|

||||

@@ -1,12 +1,13 @@

|

||||

version: "3"

|

||||

services:

|

||||

tavernai:

|

||||

sillytavern:

|

||||

build: ..

|

||||

container_name: tavernai

|

||||

hostname: tavernai

|

||||

image: tavernai/tavernai:latest

|

||||

container_name: sillytavern

|

||||

hostname: sillytavern

|

||||

image: cohee1207/sillytavern:latest

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- "./config:/home/node/app/config"

|

||||

restart: unless-stopped

|

||||

- "./config.conf:/home/node/app/config.conf"

|

||||

restart: unless-stopped

|

||||

|

||||

4

package-lock.json

generated

4

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "sillytavern",

|

||||

"version": "1.5.1",

|

||||

"version": "1.5.4",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "sillytavern",

|

||||

"version": "1.5.1",

|

||||

"version": "1.5.4",

|

||||

"license": "AGPL-3.0",

|

||||

"dependencies": {

|

||||

"@dqbd/tiktoken": "^1.0.2",

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

"type": "git",

|

||||

"url": "https://github.com/Cohee1207/SillyTavern.git"

|

||||

},

|

||||

"version": "1.5.1",

|

||||

"version": "1.5.4",

|

||||

"scripts": {

|

||||

"start": "node server.js"

|

||||

},

|

||||

|

||||

@@ -521,7 +521,7 @@ class Client {

|

||||

|

||||

console.log(`Sending message to ${chatbot}: ${message}`);

|

||||

|

||||

const messageData = await this.send_query("AddHumanMessageMutation", {

|

||||

const messageData = await this.send_query("SendMessageMutation", {

|

||||

"bot": chatbot,

|

||||

"query": message,

|

||||

"chatId": this.bots[chatbot]["chatId"],

|

||||

@@ -531,14 +531,14 @@ class Client {

|

||||

|

||||

delete this.active_messages["pending"];

|

||||

|

||||

if (!messageData["data"]["messageCreateWithStatus"]["messageLimit"]["canSend"]) {

|

||||

if (!messageData["data"]["messageEdgeCreate"]["message"]) {

|

||||

throw new Error(`Daily limit reached for ${chatbot}.`);

|

||||

}

|

||||

|

||||

let humanMessageId;

|

||||

try {

|

||||

const humanMessage = messageData["data"]["messageCreateWithStatus"];

|

||||

humanMessageId = humanMessage["message"]["messageId"];

|

||||

const humanMessage = messageData["data"]["messageEdgeCreate"]["message"];

|

||||

humanMessageId = humanMessage["node"]["messageId"];

|

||||

} catch (error) {

|

||||

throw new Error(`An unknown error occured. Raw response data: ${messageData}`);

|

||||

}

|

||||

|

||||

40

poe_graphql/SendMessageMutation.graphql

Normal file

40

poe_graphql/SendMessageMutation.graphql

Normal file

@@ -0,0 +1,40 @@

|

||||

mutation chatHelpers_sendMessageMutation_Mutation(

|

||||

$chatId: BigInt!

|

||||

$bot: String!

|

||||

$query: String!

|

||||

$source: MessageSource

|

||||

$withChatBreak: Boolean!

|

||||

) {

|

||||

messageEdgeCreate(chatId: $chatId, bot: $bot, query: $query, source: $source, withChatBreak: $withChatBreak) {

|

||||

chatBreak {

|

||||

cursor

|

||||

node {

|

||||

id

|

||||

messageId

|

||||

text

|

||||

author

|

||||

suggestedReplies

|

||||

creationTime

|

||||

state

|

||||

}

|

||||

id

|

||||

}

|

||||

message {

|

||||

cursor

|

||||

node {

|

||||

id

|

||||

messageId

|

||||

text

|

||||

author

|

||||

suggestedReplies

|

||||

creationTime

|

||||

state

|

||||

chat {

|

||||

shouldShowDisclaimer

|

||||

id

|

||||

}

|

||||

}

|

||||

id

|

||||

}

|

||||

}

|

||||

}

|

||||

15

public/TextGen Settings/LLaMa-Precise.settings

Normal file

15

public/TextGen Settings/LLaMa-Precise.settings

Normal file

@@ -0,0 +1,15 @@

|

||||

{

|

||||

"temp": 0.7,

|

||||

"top_p": 0.1,

|

||||

"top_k": 40,

|

||||

"typical_p": 1,

|

||||

"rep_pen": 1.18,

|

||||

"no_repeat_ngram_size": 0,

|

||||

"penalty_alpha": 0,

|

||||

"num_beams": 1,

|

||||

"length_penalty": 1,

|

||||

"min_length": 200,

|

||||

"encoder_rep_pen": 1,

|

||||

"do_sample": true,

|

||||

"early_stopping": false

|

||||

}

|

||||

BIN

public/backgrounds/_black.jpg

Normal file

BIN

public/backgrounds/_black.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.9 KiB |

BIN

public/backgrounds/_white.jpg

Normal file

BIN

public/backgrounds/_white.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.5 KiB |

@@ -176,7 +176,7 @@

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

|

||||

<div class="max_context_unlocked_block">

|

||||

<label class="checkbox_label">

|

||||

<input id="max_context_unlocked" type="checkbox" />

|

||||

@@ -185,7 +185,7 @@

|

||||

<b class="neutral_warning">ATTENTION!</b>

|

||||

Only select models support context sizes greater than 2048 tokens.

|

||||

Proceed only if you know what you're doing.

|

||||

</div>

|

||||

</div>

|

||||

</label>

|

||||

</div>

|

||||

</div>

|

||||

@@ -1012,7 +1012,7 @@

|

||||

<input id="horde_api_key" name="horde_api_key" class="text_pole flex1" maxlength="500" type="text" placeholder="0000000000">

|

||||

<div title="Clear your API key" class="menu_button fa-solid fa-circle-xmark clear-api-key" data-key="api_key_horde"></div>

|

||||

</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will hidden after you reload the page.</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will be hidden after you reload the page.</div>

|

||||

<h4 class="horde_model_title">

|

||||

Model

|

||||

<div id="horde_refresh" title="Refresh models" class="right_menu_button">

|

||||

@@ -1046,7 +1046,7 @@

|

||||

<input id="api_key_novel" name="api_key_novel" class="text_pole flex1" maxlength="500" size="35" type="text">

|

||||

<div title="Clear your API key" class="menu_button fa-solid fa-circle-xmark clear-api-key" data-key="api_key_novel"></div>

|

||||

</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will hidden after you reload the page.</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will be hidden after you reload the page.</div>

|

||||

<input id="api_button_novel" class="menu_button" type="submit" value="Connect">

|

||||

<div id="api_loading_novel" class="api-load-icon fa-solid fa-hourglass fa-spin"></div>

|

||||

<h4>Novel AI Model

|

||||

@@ -1103,7 +1103,7 @@

|

||||

<input id="api_key_openai" name="api_key_openai" class="text_pole flex1" maxlength="500" value="" type="text">

|

||||

<div title="Clear your API key" class="menu_button fa-solid fa-circle-xmark clear-api-key" data-key="api_key_openai"></div>

|

||||

</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will hidden after you reload the page.</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will be hidden after you reload the page.</div>

|

||||

<input id="api_button_openai" class="menu_button" type="submit" value="Connect">

|

||||

<div id="api_loading_openai" class=" api-load-icon fa-solid fa-hourglass fa-spin"></div>

|

||||

</form>

|

||||

@@ -1143,7 +1143,7 @@

|

||||

<input id="poe_token" class="text_pole flex1" type="text" maxlength="100" />

|

||||

<div title="Clear your API key" class="menu_button fa-solid fa-circle-xmark clear-api-key" data-key="api_key_poe"></div>

|

||||

</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will hidden after you reload the page.</div>

|

||||

<div class="neutral_warning">For privacy reasons, your API key will be hidden after you reload the page.</div>

|

||||

</div>

|

||||

|

||||

<input id="poe_connect" class="menu_button" type="button" value="Connect" />

|

||||

@@ -2032,7 +2032,7 @@

|

||||

<hr>

|

||||

<h4>Personality summary</h4>

|

||||

<h5>A brief description of the personality <a href="/notes#personalitysummary" class="notes-link" target="_blank"><span class="note-link-span">?</span></a></h5>

|

||||

<textarea id="personality_textarea" name="personality" placeholder="" form="form_create" class="text_pole" autocomplete="off" rows="2"></textarea>

|

||||

<textarea id="personality_textarea" name="personality" placeholder="" form="form_create" class="text_pole" autocomplete="off" rows="2" maxlength="20000"></textarea>

|

||||

</div>

|

||||

|

||||

<div id="scenario_div">

|

||||

@@ -2042,7 +2042,7 @@

|

||||

<span class="note-link-span">?</span>

|

||||

</a>

|

||||

</h5>

|

||||

<textarea id="scenario_pole" name="scenario" class="text_pole" maxlength="9999" value="" autocomplete="off" form="form_create" rows="2"></textarea>

|

||||

<textarea id="scenario_pole" name="scenario" class="text_pole" maxlength="20000" value="" autocomplete="off" form="form_create" rows="2"></textarea>

|

||||

</div>

|

||||

|

||||

<div id="talkativeness_div">

|

||||

@@ -2062,7 +2062,7 @@

|

||||

<h4>Examples of dialogue</h4>

|

||||

<h5>Forms a personality more clearly <a href="/notes#examplesofdialogue" class="notes-link" target="_blank"><span class="note-link-span">?</span></a></h5>

|

||||

</div>

|

||||

<textarea id="mes_example_textarea" name="mes_example" placeholder="" form="form_create"></textarea>

|

||||

<textarea id="mes_example_textarea" name="mes_example" placeholder="" form="form_create" maxlength="20000"></textarea>

|

||||

</div>

|

||||

<div id="character_popup_ok" class="menu_button">Save</div>

|

||||

|

||||

|

||||

@@ -22,6 +22,7 @@ You definitely installed via git, so just 'git pull' inside the SillyTavern dire

|

||||

We always recommend users install using 'git'. Here's why:

|

||||

|

||||

When you have installed via 'git clone', all you have to do to update is type 'git pull' in a command line in the ST folder.

|

||||

Alternatively, if the command prompt gives you problems (and you have GitHub Desktop installed), you can use the 'Repository' menu and select 'Pull'.

|

||||

The updates are applied automatically and safely.

|

||||

|

||||

### Method 2 - ZIP

|

||||

|

||||

131

public/script.js

131

public/script.js

@@ -1369,7 +1369,12 @@ function getExtensionPrompt(position = 0, depth = undefined, separator = "\n") {

|

||||

|

||||

function baseChatReplace(value, name1, name2) {

|

||||

if (value !== undefined && value.length > 0) {

|

||||

value = substituteParams(value, is_pygmalion ? "You:" : name1, name2);

|

||||

if (is_pygmalion) {

|

||||

value = value.replace(/{{user}}:/gi, 'You:');

|

||||

value = value.replace(/<USER>:/gi, 'You:');

|

||||

}

|

||||

|

||||

value = substituteParams(value, name1, name2);

|

||||

|

||||

if (power_user.collapse_newlines) {

|

||||

value = collapseNewlines(value);

|

||||

@@ -1386,9 +1391,10 @@ function appendToStoryString(value, prefix) {

|

||||

}

|

||||

|

||||

function isStreamingEnabled() {

|

||||

return (main_api == 'openai' && oai_settings.stream_openai)

|

||||

return ((main_api == 'openai' && oai_settings.stream_openai)

|

||||

|| (main_api == 'poe' && poe_settings.streaming)

|

||||

|| (main_api == 'textgenerationwebui' && textgenerationwebui_settings.streaming);

|

||||

|| (main_api == 'textgenerationwebui' && textgenerationwebui_settings.streaming))

|

||||

&& !isMultigenEnabled(); // Multigen has a quasi-streaming mode which breaks the real streaming

|

||||

}

|

||||

|

||||

class StreamingProcessor {

|

||||

@@ -1568,7 +1574,18 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

|

||||

const isImpersonate = type == "impersonate";

|

||||

const isInstruct = power_user.instruct.enabled;

|

||||

message_already_generated = isImpersonate ? `${name1}: ` : `${name2}: `;

|

||||

|

||||

// Name for the multigen prefix

|

||||

const magName = isImpersonate ? (is_pygmalion ? 'You' : name1) : name2;

|

||||

|

||||

if (isInstruct) {

|

||||

message_already_generated = formatInstructModePrompt(magName, isImpersonate);

|

||||

} else {

|

||||

message_already_generated = `${magName}: `;

|

||||

}

|

||||

|

||||

// To trim after multigen ended

|

||||

const magFirst = message_already_generated;

|

||||

|

||||

const interruptedByCommand = processCommands($("#send_textarea").val(), type);

|

||||

|

||||

@@ -1596,6 +1613,14 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

hideSwipeButtons();

|

||||

}

|

||||

|

||||

// Set empty promise resolution functions

|

||||

if (typeof resolve !== 'function') {

|

||||

resolve = () => {};

|

||||

}

|

||||

if (typeof reject !== 'function') {

|

||||

reject = () => {};

|

||||

}

|

||||

|

||||

if (selected_group && !is_group_generating) {

|

||||

generateGroupWrapper(false, type, { resolve, reject, quiet_prompt, force_chid });

|

||||

return;

|

||||

@@ -1994,8 +2019,16 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

const isBottom = j === mesSend.length - 1;

|

||||

mesSendString += mesSend[j];

|

||||

|

||||

// Add quiet generation prompt at depth 0

|

||||

if (isBottom && quiet_prompt && quiet_prompt.length) {

|

||||

const name = is_pygmalion ? 'You' : name1;

|

||||

const quietAppend = isInstruct ? formatInstructModeChat(name, quiet_prompt, true) : `\n${name}: ${quiet_prompt}`;

|

||||

mesSendString += quietAppend;

|

||||

}

|

||||

|

||||

if (isInstruct && isBottom && tokens_already_generated === 0) {

|

||||

mesSendString += formatInstructModePrompt(isImpersonate);

|

||||

const name = isImpersonate ? (is_pygmalion ? 'You' : name1) : name2;

|

||||

mesSendString += formatInstructModePrompt(name, isImpersonate);

|

||||

}

|

||||

|

||||

if (!isInstruct && isImpersonate && isBottom && tokens_already_generated === 0) {

|

||||

@@ -2148,11 +2181,6 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

}

|

||||

}

|

||||

|

||||

// Add quiet generation prompt at depth 0

|

||||

if (quiet_prompt && quiet_prompt.length) {

|

||||

finalPromt += `\n${quiet_prompt}`;

|

||||

}

|

||||

|

||||

finalPromt = finalPromt.replace(/\r/gm, '');

|

||||

|

||||

if (power_user.collapse_newlines) {

|

||||

@@ -2267,20 +2295,6 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

hideSwipeButtons();

|

||||

let getMessage = await streamingProcessor.generate();

|

||||

|

||||

if (isMultigenEnabled()) {

|

||||

tokens_already_generated += this_amount_gen; // add new gen amt to any prev gen counter..

|

||||

message_already_generated += getMessage;

|

||||

promptBias = '';

|

||||

if (!streamingProcessor.isStopped && shouldContinueMultigen(getMessage)) {

|

||||

streamingProcessor.isFinished = false;

|

||||

runGenerate(getMessage);

|

||||

console.log('returning to make generate again');

|

||||

return;

|

||||

}

|

||||

|

||||

getMessage = message_already_generated;

|

||||

}

|

||||

|

||||

if (streamingProcessor && !streamingProcessor.isStopped && streamingProcessor.isFinished) {

|

||||

streamingProcessor.onFinishStreaming(streamingProcessor.messageId, getMessage);

|

||||

streamingProcessor = null;

|

||||

@@ -2303,16 +2317,23 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

|

||||

let this_mes_is_name;

|

||||

({ this_mes_is_name, getMessage } = extractNameFromMessage(getMessage, force_name2, isImpersonate));

|

||||

if (tokens_already_generated == 0) {

|

||||

console.log("New message");

|

||||

({ type, getMessage } = saveReply(type, getMessage, this_mes_is_name, title));

|

||||

}

|

||||

else {

|

||||

console.log("Should append message");

|

||||

({ type, getMessage } = saveReply('append', getMessage, this_mes_is_name, title));

|

||||

|

||||

if (!isImpersonate) {

|

||||

if (tokens_already_generated == 0) {

|

||||

console.log("New message");

|

||||

({ type, getMessage } = saveReply(type, getMessage, this_mes_is_name, title));

|

||||

}

|

||||

else {

|

||||

console.log("Should append message");

|

||||

({ type, getMessage } = saveReply('append', getMessage, this_mes_is_name, title));

|

||||

}

|

||||

} else {

|

||||

let chunk = cleanUpMessage(message_already_generated, true);

|

||||

let extract = extractNameFromMessage(chunk, force_name2, isImpersonate);

|

||||

$('#send_textarea').val(extract.getMessage).trigger('input');

|

||||

}

|

||||

|

||||

if (shouldContinueMultigen(getMessage)) {

|

||||

if (shouldContinueMultigen(getMessage, isImpersonate)) {

|

||||

hideSwipeButtons();

|

||||

tokens_already_generated += this_amount_gen; // add new gen amt to any prev gen counter..

|

||||

getMessage = message_already_generated;

|

||||

@@ -2321,7 +2342,7 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

return;

|

||||

}

|

||||

|

||||

getMessage = message_already_generated;

|

||||

getMessage = message_already_generated.substring(magFirst.length);

|

||||

}

|

||||

|

||||

//Formating

|

||||

@@ -2332,6 +2353,7 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

if (getMessage.length > 0) {

|

||||

if (isImpersonate) {

|

||||

$('#send_textarea').val(getMessage).trigger('input');

|

||||

generatedPromtCache = "";

|

||||

}

|

||||

else if (type == 'quiet') {

|

||||

resolve(getMessage);

|

||||

@@ -2379,13 +2401,14 @@ async function Generate(type, { automatic_trigger, force_name2, resolve, reject,

|

||||

showSwipeButtons();

|

||||

setGenerationProgress(0);

|

||||

$('.mes_buttons:last').show();

|

||||

|

||||

if (type !== 'quiet') {

|

||||

resolve();

|

||||

}

|

||||

};

|

||||

|

||||

function onError(jqXHR, exception) {

|

||||

if (type == 'quiet') {

|

||||

reject(exception);

|

||||

}

|

||||

|

||||

reject(exception);

|

||||

$("#send_textarea").removeAttr('disabled');

|

||||

is_send_press = false;

|

||||

activateSendButtons();

|

||||

@@ -2480,12 +2503,24 @@ function getGenerateUrl() {

|

||||

return generate_url;

|

||||

}

|

||||

|

||||

function shouldContinueMultigen(getMessage) {

|

||||

const nameString = is_pygmalion ? 'You:' : `${name1}:`;

|

||||

return message_already_generated.indexOf(nameString) === -1 && //if there is no 'You:' in the response msg

|

||||

message_already_generated.indexOf('<|endoftext|>') === -1 && //if there is no <endoftext> stamp in the response msg

|

||||

tokens_already_generated < parseInt(amount_gen) && //if the gen'd msg is less than the max response length..

|

||||

getMessage.length > 0; //if we actually have gen'd text at all...

|

||||

function shouldContinueMultigen(getMessage, isImpersonate) {

|

||||

if (power_user.instruct.enabled && power_user.instruct.stop_sequence) {

|

||||

if (message_already_generated.indexOf(power_user.instruct.stop_sequence) !== -1) {

|

||||

return false;

|

||||

}

|

||||

}

|

||||

|

||||

// stopping name string

|

||||

const nameString = isImpersonate ? `${name2}:` : (is_pygmalion ? 'You:' : `${name1}:`);

|

||||

// if there is no 'You:' in the response msg

|

||||

const doesNotContainName = message_already_generated.indexOf(nameString) === -1;

|

||||

//if there is no <endoftext> stamp in the response msg

|

||||

const isNotEndOfText = message_already_generated.indexOf('<|endoftext|>') === -1;

|

||||

//if the gen'd msg is less than the max response length..

|

||||

const notReachedMax = tokens_already_generated < parseInt(amount_gen);

|

||||

//if we actually have gen'd text at all...

|

||||

const msgHasText = getMessage.length > 0;

|

||||

return doesNotContainName && isNotEndOfText && notReachedMax && msgHasText;

|

||||

}

|

||||

|

||||

function extractNameFromMessage(getMessage, force_name2, isImpersonate) {

|

||||

@@ -2601,6 +2636,12 @@ function cleanUpMessage(getMessage, isImpersonate) {

|

||||

getMessage = getMessage.substring(0, getMessage.indexOf(power_user.instruct.stop_sequence));

|

||||

}

|

||||

}

|

||||

if (power_user.instruct.enabled && power_user.instruct.input_sequence && isImpersonate) {

|

||||

getMessage = getMessage.replaceAll(power_user.instruct.input_sequence, '');

|

||||

}

|

||||

if (power_user.instruct.enabled && power_user.instruct.output_sequence && !isImpersonate) {

|

||||

getMessage = getMessage.replaceAll(power_user.instruct.output_sequence, '');

|

||||

}

|

||||

// clean-up group message from excessive generations

|

||||

if (selected_group) {

|

||||

getMessage = cleanGroupMessage(getMessage);

|

||||

@@ -2699,6 +2740,10 @@ function saveReply(type, getMessage, this_mes_is_name, title) {

|

||||

const item = chat[chat.length - 1];

|

||||

if (item['swipe_id'] !== undefined) {

|

||||

item['swipes'][item['swipes'].length - 1] = item['mes'];

|

||||

} else {

|

||||

item['swipe_id'] = 0;

|

||||

item['swipes'] = [];

|

||||

item['swipes'][0] = chat[chat.length - 1]['mes'];

|

||||

}

|

||||

|

||||

return { type, getMessage };

|

||||

|

||||

@@ -237,9 +237,17 @@ function getQuietPrompt(mode, trigger) {

|

||||

function processReply(str) {

|

||||

str = str.replaceAll('"', '')

|

||||

str = str.replaceAll('“', '')

|

||||

str = str.replaceAll('\n', ' ')

|

||||

str = str.replaceAll('\n', ', ')

|

||||

str = str.replace(/[^a-zA-Z0-9,:]+/g, ' ') // Replace everything except alphanumeric characters and commas with spaces

|

||||

str = str.replace(/\s+/g, ' '); // Collapse multiple whitespaces into one

|

||||

str = str.trim();

|

||||

|

||||

str = str

|

||||

.split(',') // list split by commas

|

||||

.map(x => x.trim()) // trim each entry

|

||||

.filter(x => x) // remove empty entries

|

||||

.join(', '); // join it back with proper spacing

|

||||

|

||||

return str;

|

||||

}

|

||||

|

||||

@@ -259,7 +267,7 @@ async function generatePicture(_, trigger) {

|

||||

const prompt = processReply(await new Promise(

|

||||

async function promptPromise(resolve, reject) {

|

||||

try {

|

||||

await context.generate('quiet', { resolve, reject, quiet_prompt });

|

||||

await context.generate('quiet', { resolve, reject, quiet_prompt, force_name2: true, });

|

||||

}

|

||||

catch {

|

||||

reject();

|

||||

@@ -269,6 +277,8 @@ async function generatePicture(_, trigger) {

|

||||

context.deactivateSendButtons();

|

||||

hideSwipeButtons();

|

||||

|

||||

console.log('Processed Stable Diffusion prompt:', prompt);

|

||||

|

||||

const url = new URL(getApiUrl());

|

||||

url.pathname = '/api/image';

|

||||

const result = await fetch(url, {

|

||||

@@ -295,7 +305,7 @@ async function generatePicture(_, trigger) {

|

||||

sendMessage(prompt, base64Image);

|

||||

}

|

||||

} catch (err) {

|

||||

console.error(err);

|

||||

console.trace(err);

|

||||

throw new Error('SD prompt text generation failed.')

|

||||

}

|

||||

finally {

|

||||

|

||||

@@ -48,10 +48,8 @@ async function moduleWorker() {

|

||||

return;

|

||||

}

|

||||

|

||||

// Chat/character/group changed

|

||||

// Chat changed

|

||||

if (

|

||||

(context.groupId && lastGroupId !== context.groupId) ||

|

||||

context.characterId !== lastCharacterId ||

|

||||

context.chatId !== lastChatId

|

||||

) {

|

||||

currentMessageNumber = context.chat.length ? context.chat.length : 0

|

||||

@@ -241,9 +239,17 @@ async function processTtsQueue() {

|

||||

|

||||

console.debug('New message found, running TTS')

|

||||

currentTtsJob = ttsJobQueue.shift()

|

||||

const text = extension_settings.tts.narrate_dialogues_only

|

||||

let text = extension_settings.tts.narrate_dialogues_only

|

||||

? currentTtsJob.mes.replace(/\*[^\*]*?(\*|$)/g, '').trim() // remove asterisks content

|

||||

: currentTtsJob.mes.replaceAll('*', '').trim() // remove just the asterisks

|

||||

|

||||

if (extension_settings.tts.narrate_quoted_only) {

|

||||

const special_quotes = /[“”]/g; // Extend this regex to include other special quotes

|

||||

text = text.replace(special_quotes, '"');

|

||||

const matches = text.match(/".*?"/g); // Matches text inside double quotes, non-greedily

|

||||

text = matches ? matches.join(' ... ... ... ') : text;

|

||||

}

|

||||

console.log(`TTS: ${text}`)

|

||||

const char = currentTtsJob.name

|

||||

|

||||

try {

|

||||

@@ -288,6 +294,7 @@ function loadSettings() {

|

||||

extension_settings.tts.enabled

|

||||

)

|

||||

$('#tts_narrate_dialogues').prop('checked', extension_settings.tts.narrate_dialogues_only)

|

||||

$('#tts_narrate_quoted').prop('checked', extension_settings.tts.narrate_quoted_only)

|

||||

}

|

||||

|

||||

const defaultSettings = {

|

||||

@@ -380,6 +387,13 @@ function onNarrateDialoguesClick() {

|

||||

saveSettingsDebounced()

|

||||

}

|

||||

|

||||

|

||||

function onNarrateQuotedClick() {

|

||||

extension_settings.tts.narrate_quoted_only = $('#tts_narrate_quoted').prop('checked');

|

||||

saveSettingsDebounced()

|

||||

}

|

||||

|

||||

|

||||

//##############//

|

||||

// TTS Provider //

|

||||

//##############//

|

||||

@@ -459,6 +473,10 @@ $(document).ready(function () {

|

||||

<input type="checkbox" id="tts_narrate_dialogues">

|

||||

Narrate dialogues only

|

||||

</label>

|

||||

<label class="checkbox_label" for="tts_narrate_quoted">

|

||||

<input type="checkbox" id="tts_narrate_quoted">

|

||||

Narrate quoted only

|

||||

</label>

|

||||

</div>

|

||||

<label>Voice Map</label>

|

||||

<textarea id="tts_voice_map" type="text" class="text_pole textarea_compact" rows="4"

|

||||

@@ -481,6 +499,7 @@ $(document).ready(function () {

|

||||

$('#tts_apply').on('click', onApplyClick)

|

||||

$('#tts_enabled').on('click', onEnableClick)

|

||||

$('#tts_narrate_dialogues').on('click', onNarrateDialoguesClick);

|

||||

$('#tts_narrate_quoted').on('click', onNarrateQuotedClick);

|

||||

$('#tts_voices').on('click', onTtsVoicesClick)

|

||||

$('#tts_provider_settings').on('input', onTtsProviderSettingsInput)

|

||||

for (const provider in ttsProviders) {

|

||||

|

||||

@@ -46,6 +46,7 @@ import {

|

||||

menu_type,

|

||||

select_selected_character,

|

||||

cancelTtsPlay,

|

||||

isMultigenEnabled,

|

||||

} from "../script.js";

|

||||

import { appendTagToList, createTagMapFromList, getTagsList, applyTagsOnCharacterSelect } from './tags.js';

|

||||

|

||||

@@ -426,7 +427,7 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

let lastMessageText = lastMessage.mes;

|

||||

let activationText = "";

|

||||

let isUserInput = false;

|

||||

let isQuietGenDone = false;

|

||||

let isGenerationDone = false;

|

||||

|

||||

if (userInput && userInput.length && !by_auto_mode) {

|

||||

isUserInput = true;

|

||||

@@ -438,6 +439,23 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

}

|

||||

}

|

||||

|

||||

const resolveOriginal = params.resolve;

|

||||

const rejectOriginal = params.reject;

|

||||

|

||||

if (typeof params.resolve === 'function') {

|

||||

params.resolve = function () {

|

||||

isGenerationDone = true;

|

||||

resolveOriginal.apply(this, arguments);

|

||||

};

|

||||

}

|

||||

|

||||

if (typeof params.reject === 'function') {

|

||||

params.reject = function () {

|

||||

isGenerationDone = true;

|

||||

rejectOriginal.apply(this, arguments);

|

||||

}

|

||||

}

|

||||

|

||||

const activationStrategy = Number(group.activation_strategy ?? group_activation_strategy.NATURAL);

|

||||

let activatedMembers = [];

|

||||

|

||||

@@ -450,16 +468,6 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

activatedMembers = activateListOrder(group.members.slice(0, 1));

|

||||

}

|

||||

|

||||

const resolveOriginal = params.resolve;

|

||||

const rejectOriginal = params.reject;

|

||||

params.resolve = function () {

|

||||

isQuietGenDone = true;

|

||||

resolveOriginal.apply(this, arguments);

|

||||

};

|

||||

params.reject = function () {

|

||||

isQuietGenDone = true;

|

||||

rejectOriginal.apply(this, arguments);

|

||||

}

|

||||

}

|

||||

else if (type === "swipe") {

|

||||

activatedMembers = activateSwipe(group.members);

|

||||

@@ -482,13 +490,14 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

|

||||

// now the real generation begins: cycle through every character

|

||||

for (const chId of activatedMembers) {

|

||||

isGenerationDone = false;

|

||||

const generateType = type == "swipe" || type == "impersonate" || type == "quiet" ? type : "group_chat";

|

||||

setCharacterId(chId);

|

||||

setCharacterName(characters[chId].name)

|

||||

|

||||

await Generate(generateType, { automatic_trigger: by_auto_mode, ...(params || {}) });

|

||||

|

||||

if (type !== "swipe" && type !== "impersonate") {

|

||||

if (type !== "swipe" && type !== "impersonate" && !isMultigenEnabled()) {

|

||||

// update indicator and scroll down

|

||||

typingIndicator

|

||||

.find(".typing_indicator_name")

|

||||

@@ -499,9 +508,10 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

});

|

||||

}

|

||||

|

||||

// TODO: This is awful. Refactor this

|

||||

while (true) {

|

||||

// if not swipe - check if message generated already

|

||||

if (type !== "swipe" && chat.length == messagesBefore) {

|

||||

if (type !== "swipe" && !isMultigenEnabled() && chat.length == messagesBefore) {

|

||||

await delay(100);

|

||||

}

|

||||

// if swipe - see if message changed

|

||||

@@ -514,6 +524,13 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

else if (isMultigenEnabled()) {

|

||||

if (isGenerationDone) {

|

||||

break;

|

||||

} else {

|

||||

await delay(100);

|

||||

}

|

||||

}

|

||||

else {

|

||||

if (lastMessageText === chat[chat.length - 1].mes) {

|

||||

await delay(100);

|

||||

@@ -532,6 +549,13 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

break;

|

||||

}

|

||||

}

|

||||

else if (isMultigenEnabled()) {

|

||||

if (isGenerationDone) {

|

||||

break;

|

||||

} else {

|

||||

await delay(100);

|

||||

}

|

||||

}

|

||||

else {

|

||||

if (!$("#send_textarea").val() || $("#send_textarea").val() == userInput) {

|

||||

await delay(100);

|

||||

@@ -542,7 +566,15 @@ async function generateGroupWrapper(by_auto_mode, type = null, params = {}) {

|

||||

}

|

||||

}

|

||||

else if (type === 'quiet') {

|

||||

if (isQuietGenDone) {

|

||||

if (isGenerationDone) {

|

||||

break;

|

||||

} else {

|

||||

await delay(100);

|

||||

}

|

||||

}

|

||||

else if (isMultigenEnabled()) {

|

||||

if (isGenerationDone) {

|

||||

messagesBefore++;

|

||||

break;

|

||||

} else {

|

||||

await delay(100);

|

||||

|

||||

@@ -555,13 +555,19 @@ async function sendOpenAIRequest(type, openai_msgs_tosend, signal) {

|

||||

const decoder = new TextDecoder();

|

||||

const reader = response.body.getReader();

|

||||

let getMessage = "";

|

||||

let messageBuffer = "";

|

||||

while (true) {

|

||||

const { done, value } = await reader.read();

|

||||

let response = decoder.decode(value);

|

||||

|

||||

tryParseStreamingError(response);

|

||||

|

||||

let eventList = response.split("\n");

|

||||

|

||||

// ReadableStream's buffer is not guaranteed to contain full SSE messages as they arrive in chunks

|

||||

// We need to buffer chunks until we have one or more full messages (separated by double newlines)

|

||||

messageBuffer += response;

|

||||

let eventList = messageBuffer.split("\n\n");

|

||||

// Last element will be an empty string or a leftover partial message

|

||||

messageBuffer = eventList.pop();

|

||||

|

||||

for (let event of eventList) {

|

||||

if (!event.startsWith("data"))

|

||||

|

||||

@@ -624,10 +624,10 @@ function loadInstructMode() {

|

||||

}

|

||||

|

||||

export function formatInstructModeChat(name, mes, isUser) {

|

||||

const includeNames = power_user.instruct.names || (selected_group && !isUser);

|

||||

const includeNames = power_user.instruct.names || !!selected_group;

|

||||

const sequence = isUser ? power_user.instruct.input_sequence : power_user.instruct.output_sequence;

|

||||

const separator = power_user.instruct.wrap ? '\n' : '';

|

||||

const textArray = includeNames ? [sequence, name, ': ', mes, separator] : [sequence, mes, separator];

|

||||

const textArray = includeNames ? [sequence, `${name}: ${mes}`, separator] : [sequence, mes, separator];

|

||||

const text = textArray.filter(x => x).join(separator);

|

||||

return text;

|

||||

}

|

||||

@@ -641,10 +641,11 @@ export function formatInstructStoryString(story) {

|

||||

return text;

|

||||

}

|

||||

|

||||

export function formatInstructModePrompt(isImpersonate) {

|

||||

export function formatInstructModePrompt(name, isImpersonate) {

|

||||

const includeNames = power_user.instruct.names || !!selected_group;

|

||||

const sequence = isImpersonate ? power_user.instruct.input_sequence : power_user.instruct.output_sequence;

|

||||

const separator = power_user.instruct.wrap ? '\n' : '';

|

||||

const text = separator + sequence;

|

||||

const text = includeNames ? (separator + sequence + separator + `${name}:`) : (separator + sequence);

|

||||

return text;

|

||||

}

|

||||

|

||||

|

||||

@@ -527,6 +527,7 @@ code {

|

||||

grid-column-start: 4;

|

||||

flex-flow: column;

|

||||

font-size: 30px;

|

||||

cursor: pointer;

|

||||

}

|

||||

|

||||

.swipe_right img,

|

||||

|

||||

135

readme.md

135

readme.md

@@ -1,18 +1,23 @@

|

||||

# SillyTavern

|

||||

|

||||

## Based on a fork of TavernAI 1.2.8

|

||||

|

||||

### Brought to you by Cohee, RossAscends and the SillyTavern community

|

||||

|

||||

NOTE: We have added [a FAQ](faq.md) to answer most of your questions and help you get started.

|

||||

|

||||

### What is SillyTavern or TavernAI?

|

||||

|

||||

Tavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create.

|

||||

|

||||

SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

|

||||

|

||||

### What do I need other than Tavern?

|

||||

|

||||

On its own Tavern is useless, as it's just a user interface. You have to have access to an AI system backend that can act as the roleplay character. There are various supported backends: OpenAPI API (GPT), KoboldAI (either running locally or on Google Colab), and more. You can read more about this in [the FAQ](faq.md).

|

||||

|

||||

### Do I need a powerful PC to run Tavern?

|

||||

|

||||

Since Tavern is only a user interface, it has tiny hardware requirements, it will run on anything. It's the AI system backend that needs to be powerful.

|

||||

|

||||

## Mobile support

|

||||

@@ -21,13 +26,13 @@ Since Tavern is only a user interface, it has tiny hardware requirements, it wil

|

||||

|

||||

> **This fork can be run natively on Android phones using Termux. Please refer to this guide by ArroganceComplex#2659:**

|

||||

|

||||

https://rentry.org/STAI-Termux

|

||||

<https://rentry.org/STAI-Termux>

|

||||

|

||||

**.webp character cards import/export is not supported in Termux. Use either JSON or PNG formats instead.**

|

||||

|

||||

## Questions or suggestions?

|

||||

|

||||

### We now have a community Discord server!

|

||||

### We now have a community Discord server

|

||||

|

||||

Get support, share favorite characters and prompts:

|

||||

|

||||

@@ -36,11 +41,13 @@ Get support, share favorite characters and prompts:

|

||||

***

|

||||

|

||||

Get in touch with the developers directly:

|

||||

|

||||

* Discord: Cohee#1207 or RossAscends#1779

|

||||

* Reddit: /u/RossAscends or /u/sillylossy

|

||||

* [Post a GitHub issue](https://github.com/Cohee1207/SillyTavern/issues)

|

||||

|

||||

## This version includes

|

||||

|

||||

* A heavily modified TavernAI 1.2.8 (more than 50% of code rewritten or optimized)

|

||||

* Swipes

|

||||

* Group chats: multi-bot rooms for characters to talk to you or each other

|

||||

@@ -54,12 +61,15 @@ Get in touch with the developers directly:

|

||||

* Prompt generation formatting tweaking

|

||||

* webp character card interoperability (PNG is still an internal format)

|

||||

* Extensibility support via [SillyLossy's TAI-extras](https://github.com/Cohee1207/TavernAI-extras) plugins

|

||||

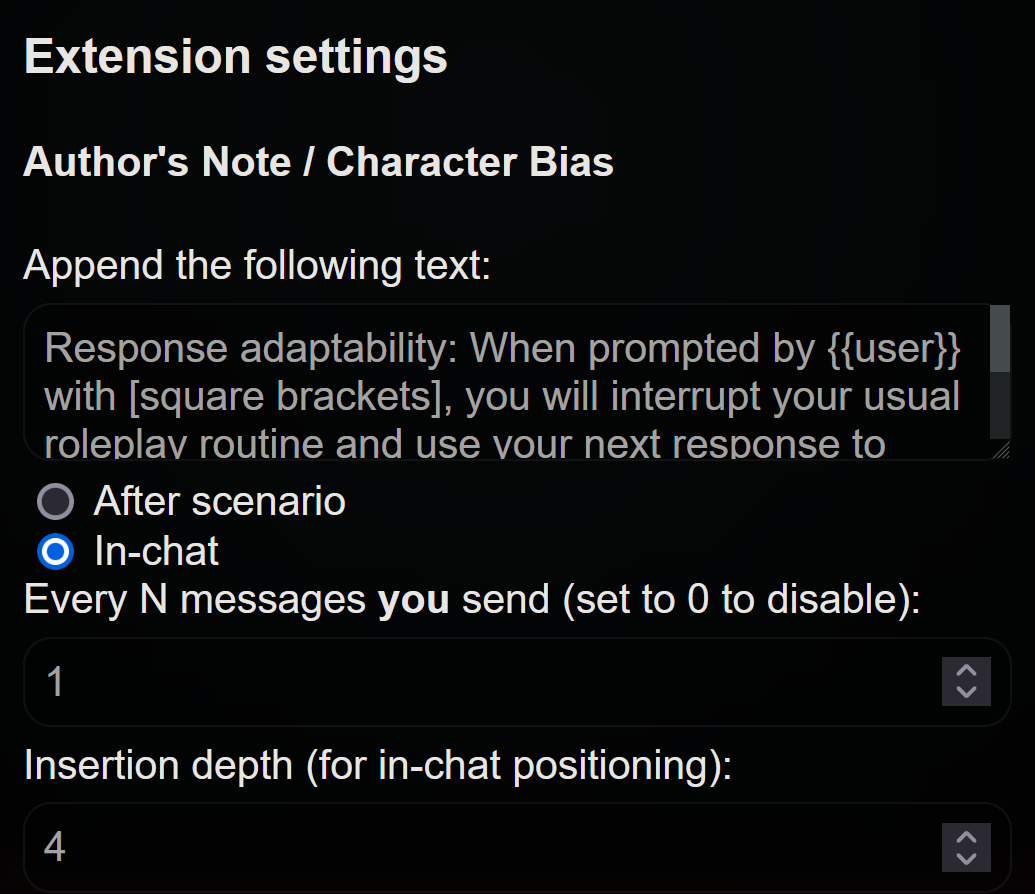

* Author's Note / Character Bias

|

||||

* Character emotional expressions

|

||||

* Auto-Summary of the chat history

|

||||

* Sending images to chat, and the AI interpreting the content.

|

||||

* Author's Note / Character Bias

|

||||

* Character emotional expressions

|

||||

* Auto-Summary of the chat history

|

||||

* Sending images to chat, and the AI interpreting the content.

|

||||

* Stable Diffusion image generation (5 chat-related presets plus 'free mode')

|

||||

* Text-to-speech for AI response messages (via ElevenLabs, Silero, or the OS's System TTS)

|

||||

|

||||

## UI Extensions 🚀

|

||||

|

||||

| Name | Description | Required <a href="https://github.com/Cohee1207/TavernAI-extras#modules" target="_blank">Extra Modules</a> | Screenshot |

|

||||

| ---------------- | ---------------------------------| ---------------------------- | ---------- |

|

||||

| Image Captioning | Send a cute picture to your bot!<br><br>Picture select option will appear beside the "Message send" button. | `caption` | <img src="https://user-images.githubusercontent.com/18619528/224161576-ddfc51cd-995e-44ec-bf2d-d2477d603f0c.png" style="max-width:200px" /> |

|

||||

@@ -68,6 +78,8 @@ Get in touch with the developers directly:

|

||||

| D&D Dice | A set of 7 classic D&D dice for all your dice rolling needs.<br><br>*I used to roll the dice.<br>Feel the fear in my enemies' eyes* | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/226199925-a066c6fc-745e-4a2b-9203-1cbffa481b14.png"> |

|

||||

| Author's Note | Built-in extension that allows you to append notes that will be added to the context and steer the story and character in a specific direction. Because it's sent after the character description, it has a lot of weight. Thanks Ali឵#2222 for pitching the idea! | None |  |

|

||||

| Character Backgrounds | Built-in extension to assign unique backgrounds to specific chats or groups. | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/233494454-bfa7c9c7-4faa-4d97-9c69-628fd96edd92.png"> |

|

||||

| Stable Diffusion | Use local of cloud-based Stable Diffusion webUI API to generate images. 5 presets included ('you', 'your face', 'me', 'the story', and 'the last message'. Free mode also supported via `/sd (anything_here_)` command in the chat input bar. Most common StableDiffusion generation settings are customizable within the SillyTavern UI. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/ppata8.png"> |

|

||||

| Text-to-Speech | AI-generated voice will read back character messages on demand, or automatically read new messages they arrive. Supports ElevenLabs, Silero, and your device's TTS service. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/o3wxkk.png"> |

|

||||

|

||||

## UI/CSS/Quality of Life tweaks by RossAscends

|

||||

|

||||

@@ -78,7 +90,7 @@ Get in touch with the developers directly:

|

||||

* Left = swipe left

|

||||

* Right = swipe right (NOTE: swipe hotkeys are disabled when chatbar has something typed into it)

|

||||

* Ctrl+Left = view locally stored variables (in the browser console window)

|

||||

* Enter (with chat bar selected) = send your message to AI

|

||||

* Enter (with chat bar selected) = send your message to AI

|

||||

* Ctrl+Enter = Regenerate the last AI response

|

||||

|

||||

* User Name Changes and Character Deletion no longer force the page to refresh.

|

||||

@@ -97,12 +109,12 @@ Get in touch with the developers directly:

|

||||

* Nav panel status of open or closed will also be saved across sessions.

|

||||

|

||||

* Customizable chat UI:

|

||||

* Play a sound when a new message arrives

|

||||

* Switch between round or rectangle avatar styles

|

||||

* Have a wider chat window on the desktop

|

||||

* Optional semi-transparent glass-like panels

|

||||

* Customizable page colors for 'main text', 'quoted text' 'italics text'.

|

||||

* Customizable UI background color and blur amount

|

||||

* Play a sound when a new message arrives

|

||||

* Switch between round or rectangle avatar styles

|

||||

* Have a wider chat window on the desktop

|

||||

* Optional semi-transparent glass-like panels

|

||||

* Customizable page colors for 'main text', 'quoted text' 'italics text'.

|

||||

* Customizable UI background color and blur amount

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -115,6 +127,27 @@ Get in touch with the developers directly:

|

||||

> DO NOT RUN START.BAT WITH ADMIN PERMISSIONS

|

||||

|

||||

### Windows

|

||||

|

||||

Installing via Git (recommended for easy updating)

|

||||

|

||||

Easy to follow guide with pretty pictures:

|

||||

<https://docs.alpindale.dev/pygmalion-extras/sillytavern/#windows-installation>

|

||||

|

||||

1. Install [NodeJS](https://nodejs.org/en) (latest LTS version is recommended)

|

||||

2. Install [GitHub Desktop](https://central.github.com/deployments/desktop/desktop/latest/win32)

|

||||

3. Open Windows Explorer (`Win+E`)

|

||||

4. Browse to or Create a folder that is not controlled or monitored by Windows. (ex: C:\MySpecialFolder\)

|

||||

5. Open a Command Prompt inside that folder by clicking in the 'Address Bar' at the top, typing `cmd`, and pressing Enter.

|

||||

6. Once the black box (Command Prompt) pops up, type ONE of the following into it and press Enter:

|

||||

|

||||

* for Main Branch: `git clone https://github.com/Cohee1207/SillyTavern -b main`

|

||||

* for Dev Branch: `git clone https://github.com/Cohee1207/SillyTavern -b dev`

|

||||

|

||||

7. Once everything is cloned, double click `Start.bat` to make NodeJS install its requirements.

|

||||

8. The server will then start, and SillyTavern will popup in your browser.

|

||||

|

||||

Installing via zip download

|

||||

|

||||

1. install [NodeJS](https://nodejs.org/en) (latest LTS version is recommended)

|

||||

2. download the zip from this GitHub repo

|

||||

3. unzip it into a folder of your choice

|

||||

@@ -122,6 +155,7 @@ Get in touch with the developers directly:

|

||||

5. Once the server has prepared everything for you, it will open a tab in your browser.

|

||||

|

||||

### Linux

|

||||

|

||||

1. Run the `start.sh` script.

|

||||

2. Enjoy.

|

||||

|

||||

@@ -138,41 +172,59 @@ In order to enable viewing your keys by clicking a button in the API block:

|

||||

|

||||

## Remote connections

|

||||

|

||||

Most often this is for people who want to use SillyTavern on their mobile phones while at home.

|

||||

If you want to enable other devices to connect to your TAI server, open 'config.conf' in a text editor, and change:

|

||||

Most often this is for people who want to use SillyTavern on their mobile phones while their PC runs the ST server on the same wifi network.

|

||||

|

||||

```

|

||||

const whitelistMode = true;

|

||||

```

|

||||

to

|

||||

```

|

||||

const whitelistMode = false;

|

||||

```

|

||||

Save the file.

|

||||

Restart your TAI server.

|

||||

However, it can be used to allow remote connections from anywhere as well.

|

||||

|

||||

You will now be able to connect from other devices.

|

||||

**IMPORTANT: SillyTavern is a single-user program, so anyone who logs in will be able to see all characters and chats, and be able to change any settings inside the UI.**

|

||||

|

||||

### Managing whitelisted IPs

|

||||

### 1. Managing whitelisted IPs

|

||||

|

||||

You can add or remove whitelisted IPs by editing the `whitelist` array in `config.conf`. You can also provide a `whitelist.txt` file in the same directory as `config.conf` with one IP address per line like:

|

||||

* Create a new text file inside your SillyTavern base install folder called `whitelist.txt`.

|

||||

* Open the file in a text editor, add a list of IPs you want to be allowed to connect.

|

||||

|

||||

*IP ranges are not accepted. Each IP must be listed individually like this:*

|

||||

```txt

|

||||

192.168.0.1

|

||||

192.168.0.2

|

||||

192.168.0.3

|

||||

192.168.0.4

|

||||

```

|

||||

* Save the `whitelist.txt` file.

|

||||

* Restart your TAI server.

|

||||

|

||||

The `whitelist` array in `config.conf` will be ignored if `whitelist.txt` exists.

|

||||

Now devices which have the IP specified in the file will be able to connect.

|

||||

|

||||

***Disclaimer: Anyone else who knows your IP address and TAI port number will be able to connect as well***

|

||||

*Note: `config.conf` also has a `whitelist` array, which you can use in the same way, but this array will be ignored if `whitelist.txt` exists.*

|

||||

|

||||

To connect over wifi you'll need your PC's local wifi IP address

|

||||

- (For Windows: windows button > type 'cmd.exe' in the search bar> type 'ipconfig' in the console, hit Enter > "IPv4" listing)

|

||||

if you want other people on the internet to connect, check [here](https://whatismyipaddress.com/) for 'IPv4'

|

||||

### 2. Connecting to ST from a remote device

|

||||

|

||||

After the whitelist has been setup, to connect over wifi you'll need the IP of the ST-hosting device.

|

||||

|

||||

If the ST-hosting device is on the same wifi network, you will point your remote device's browser to the ST-host's internal wifi IP:

|

||||

|

||||

* For Windows: windows button > type `cmd.exe` in the search bar > type `ipconfig` in the console, hit Enter > look for `IPv4` listing.

|

||||

|

||||

If you (or someone else) wants to connect to your hosted ST while not being on the same network, you will need the public IP of your ST-hosting device.

|

||||

|

||||

While using the ST-hosting device, access [this page](https://whatismyipaddress.com/) and look for for `IPv4`. This is what you would use to connect from the remote device.

|

||||

|

||||

### Opening your ST to all IPs

|

||||

|

||||

We do not reccomend doing this, but you can open `config.conf` and change `whitelist` to `false`.

|

||||

|

||||

You must remove (or rename) `whitelist.txt` in the SillyTavern base install folder, if it exists.

|

||||

|

||||

This is usually an insecure practice, so we require you to set a username and password when you do this.

|

||||

|

||||

The username and password are set in `config.conf`.

|

||||

|

||||

After restarting your ST server, any device will be able to connect to it, regardless of their IP as long as they know the username and password.

|

||||

|

||||

### Still Unable To Connect?

|

||||

- Create an inbound/outbound firewall rule for the port found in `config.conf`. Do NOT mistake this for portforwarding on your router, otherwise someone could find your chat logs and that's a big no-no.

|

||||

- Enable the Private Network profile type in Settings > Network and Internet > Ethernet. This is VERY important for Windows 11, otherwise you would be unable to connect even with the aforementioned firewall rules.

|

||||

|

||||

* Create an inbound/outbound firewall rule for the port found in `config.conf`. Do NOT mistake this for portforwarding on your router, otherwise someone could find your chat logs and that's a big no-no.

|

||||

* Enable the Private Network profile type in Settings > Network and Internet > Ethernet. This is VERY important for Windows 11, otherwise you would be unable to connect even with the aforementioned firewall rules.

|

||||

|

||||

## Performance issues?

|

||||

|

||||

@@ -198,9 +250,10 @@ We're moving to 100% original content only policy, so old background images have

|

||||

|

||||

You can find them archived here:

|

||||

|

||||

https://files.catbox.moe/1xevnc.zip

|

||||

<https://files.catbox.moe/1xevnc.zip>

|

||||

|

||||

## Screenshots

|

||||

|

||||

<img width="400" alt="image" src="https://user-images.githubusercontent.com/18619528/228649245-8061c60f-63dc-488e-9325-f151b7a3ec2d.png">

|

||||

<img width="400" alt="image" src="https://user-images.githubusercontent.com/18619528/228649856-fbdeef05-d727-4d5a-be80-266cbbc6b811.png">

|

||||

|

||||

@@ -215,13 +268,13 @@ GNU Affero General Public License for more details.**

|

||||

* Cohee's modifications and derived code: AGPL v3

|

||||

* RossAscends' additions: AGPL v3

|

||||

* Portions of CncAnon's TavernAITurbo mod: Unknown license

|

||||

* Waifu mode inspired by the work of PepperTaco (https://github.com/peppertaco/Tavern/)

|

||||

* Thanks Pygmalion University for being awesome testers and suggesting cool features!

|

||||

* Waifu mode inspired by the work of PepperTaco (<https://github.com/peppertaco/Tavern/>)

|

||||

* Thanks Pygmalion University for being awesome testers and suggesting cool features!

|

||||

* Thanks oobabooga for compiling presets for TextGen

|

||||

* poe-api client adapted from https://github.com/ading2210/poe-api (GPL v3)

|

||||

* GraphQL files for poe: https://github.com/muharamdani/poe (ISC License)

|

||||

* KoboldAI Presets from KAI Lite: https://lite.koboldai.net/

|

||||

* poe-api client adapted from <https://github.com/ading2210/poe-api> (GPL v3)

|

||||

* GraphQL files for poe: <https://github.com/muharamdani/poe> (ISC License)

|

||||

* KoboldAI Presets from KAI Lite: <https://lite.koboldai.net/>

|

||||

* Noto Sans font by Google (OFL license)

|

||||

* Icon theme by Font Awesome https://fontawesome.com (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License)

|

||||

* Icon theme by Font Awesome <https://fontawesome.com> (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License)

|

||||

* Linux startup script by AlpinDale

|

||||

* Thanks paniphons for providing a FAQ document

|

||||

|

||||

10

server.js

10

server.js

@@ -860,7 +860,7 @@ async function charaWrite(img_url, data, target_img, response = undefined, mes =

|

||||

let rawImg = await jimp.read(img_url);

|

||||

|

||||

// Apply crop if defined

|

||||

if (typeof crop == 'object') {

|

||||

if (typeof crop == 'object' && [crop.x, crop.y, crop.width, crop.height].every(x => typeof x === 'number')) {

|

||||

rawImg = rawImg.crop(crop.x, crop.y, crop.width, crop.height);

|

||||

}

|

||||

|

||||

@@ -2437,7 +2437,13 @@ app.post("/openai_bias", jsonParser, async function (request, response) {

|

||||

// Shamelessly stolen from Agnai

|

||||

app.post("/openai_usage", jsonParser, async function (request, response) {

|

||||

if (!request.body) return response.sendStatus(400);

|

||||

const key = request.body.key;

|

||||

const key = readSecret(SECRET_KEYS.OPENAI);

|

||||

|

||||

if (!key) {

|

||||

console.warn('Get key usage failed: Missing OpenAI API key.');

|

||||

return response.sendStatus(401);

|

||||

}

|

||||

|

||||