mirror of

https://github.com/SillyTavern/SillyTavern.git

synced 2025-06-05 21:59:27 +02:00

Merge branch 'main' into dev

This commit is contained in:

@ -98,7 +98,7 @@

|

||||

"!git clone https://github.com/Cohee1207/tts_samples\n",

|

||||

"!npm install -g localtunnel\n",

|

||||

"!pip install -r requirements-complete.txt\n",

|

||||

"!pip install tensorflow==2.11\n",

|

||||

"!pip install tensorflow==2.12\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"cmd = f\"python server.py {' '.join(params)}\"\n",

|

||||

|

||||

@ -1,12 +1,13 @@

|

||||

version: "3"

|

||||

services:

|

||||

tavernai:

|

||||

sillytavern:

|

||||

build: ..

|

||||

container_name: tavernai

|

||||

hostname: tavernai

|

||||

image: tavernai/tavernai:latest

|

||||

container_name: sillytavern

|

||||

hostname: sillytavern

|

||||

image: cohee1207/sillytavern:latest

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- "./config:/home/node/app/config"

|

||||

restart: unless-stopped

|

||||

- "./config.conf:/home/node/app/config.conf"

|

||||

restart: unless-stopped

|

||||

|

||||

BIN

public/backgrounds/_black.jpg

Normal file

BIN

public/backgrounds/_black.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.9 KiB |

BIN

public/backgrounds/_white.jpg

Normal file

BIN

public/backgrounds/_white.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 7.5 KiB |

@ -562,13 +562,19 @@ async function sendOpenAIRequest(type, openai_msgs_tosend, signal) {

|

||||

const decoder = new TextDecoder();

|

||||

const reader = response.body.getReader();

|

||||

let getMessage = "";

|

||||

let messageBuffer = "";

|

||||

while (true) {

|

||||

const { done, value } = await reader.read();

|

||||

let response = decoder.decode(value);

|

||||

|

||||

tryParseStreamingError(response);

|

||||

|

||||

let eventList = response.split("\n");

|

||||

|

||||

// ReadableStream's buffer is not guaranteed to contain full SSE messages as they arrive in chunks

|

||||

// We need to buffer chunks until we have one or more full messages (separated by double newlines)

|

||||

messageBuffer += response;

|

||||

let eventList = messageBuffer.split("\n\n");

|

||||

// Last element will be an empty string or a leftover partial message

|

||||

messageBuffer = eventList.pop();

|

||||

|

||||

for (let event of eventList) {

|

||||

if (!event.startsWith("data"))

|

||||

|

||||

@ -65,6 +65,8 @@ Get in touch with the developers directly:

|

||||

* Character emotional expressions

|

||||

* Auto-Summary of the chat history

|

||||

* Sending images to chat, and the AI interpreting the content.

|

||||

* Stable Diffusion image generation (5 chat-related presets plus 'free mode')

|

||||

* Text-to-speech for AI response messages (via ElevenLabs, Silero, or the OS's System TTS)

|

||||

|

||||

## UI Extensions 🚀

|

||||

|

||||

@ -76,6 +78,8 @@ Get in touch with the developers directly:

|

||||

| D&D Dice | A set of 7 classic D&D dice for all your dice rolling needs.<br><br>*I used to roll the dice.<br>Feel the fear in my enemies' eyes* | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/226199925-a066c6fc-745e-4a2b-9203-1cbffa481b14.png"> |

|

||||

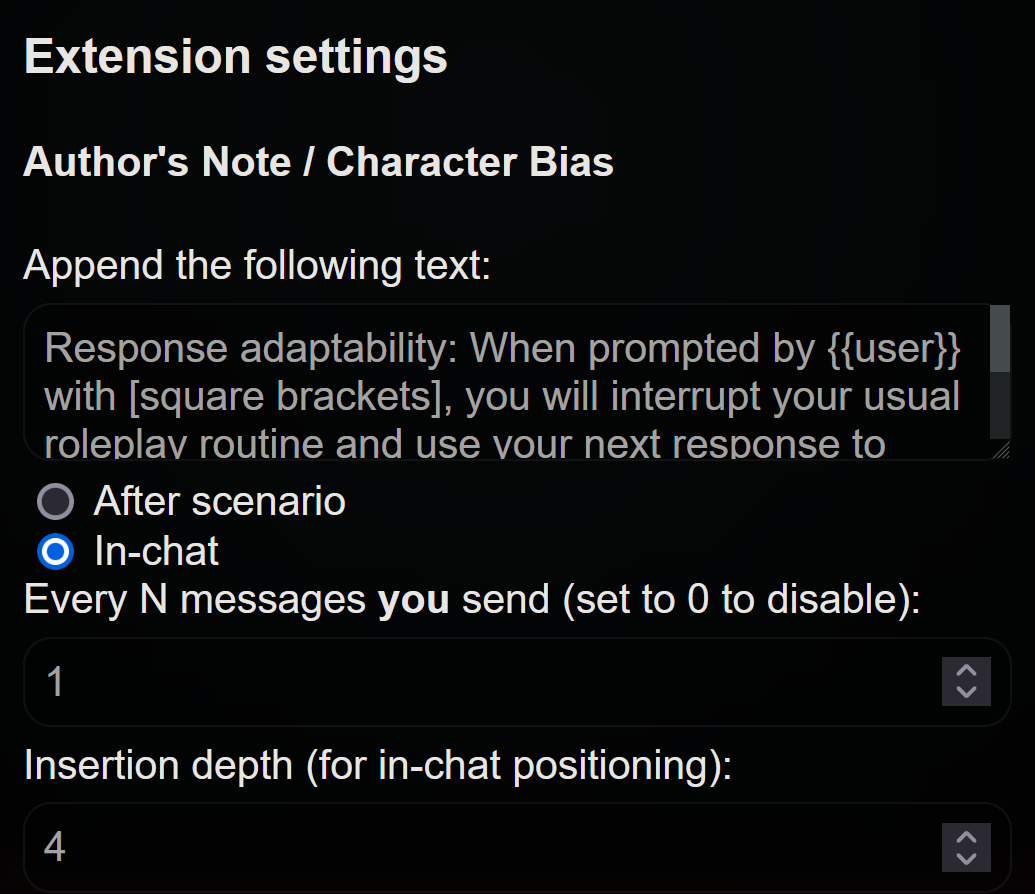

| Author's Note | Built-in extension that allows you to append notes that will be added to the context and steer the story and character in a specific direction. Because it's sent after the character description, it has a lot of weight. Thanks Ali឵#2222 for pitching the idea! | None |  |

|

||||

| Character Backgrounds | Built-in extension to assign unique backgrounds to specific chats or groups. | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/233494454-bfa7c9c7-4faa-4d97-9c69-628fd96edd92.png"> |

|

||||

| Stable Diffusion | Use local of cloud-based Stable Diffusion webUI API to generate images. 5 presets included ('you', 'your face', 'me', 'the story', and 'the last message'. Free mode also supported via `/sd (anything_here_)` command in the chat input bar. Most common StableDiffusion generation settings are customizable within the SillyTavern UI. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/ppata8.png"> |

|

||||

| Text-to-Speech | AI-generated voice will read back character messages on demand, or automatically read new messages they arrive. Supports ElevenLabs, Silero, and your device's TTS service. | None | <img style="max-width:200px" alt="image" src="https://files.catbox.moe/o3wxkk.png"> |

|

||||

|

||||

## UI/CSS/Quality of Life tweaks by RossAscends

|

||||

|

||||

|

||||

Reference in New Issue

Block a user