mirror of

https://github.com/SillyTavern/SillyTavern.git

synced 2025-06-05 21:59:27 +02:00

Merge branch 'main' into dev

This commit is contained in:

498

colab/GPU.ipynb

498

colab/GPU.ipynb

@@ -1,192 +1,413 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "d-Yihz3hAb2E"

|

||||

},

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"https://colab.research.google.com/github/TavernAI/TavernAI/blob/main/colab/GPU.ipynb<br>\n",

|

||||

"\n",

|

||||

"Works with:<br>\n",

|

||||

"KoboldAI https://github.com/KoboldAI/KoboldAI-Client<br>\n",

|

||||

"Pygmalion https://huggingface.co/PygmalionAI/<br>\n",

|

||||

"<br>\n",

|

||||

"**Links**<br>\n",

|

||||

"TavernAI Github https://github.com/TavernAI/TavernAI<br>\n",

|

||||

"Cohee's TavernAI fork Github https://github.com/Cohee1207/SillyTavern<br>\n",

|

||||

"Cohee's TavernAI Extras Github https://github.com/Cohee1207/TavernAI-extras/<br>\n",

|

||||

"TavernAI Discord https://discord.gg/zmK2gmr45t<br>\n",

|

||||

"TavernAI Boosty https://boosty.to/tavernai\n",

|

||||

"<pre>\n",

|

||||

" Tavern.AI/ \\ / ^ ^ ^ ^ ~~~~ ^ \\ / ^ ^ ^ ^/ ^ ^ \\/^ ^ \\\n",

|

||||

" /^ ^\\ ^ ^ ^ ^ ^ ~~ ^ \\ / ^ ^ ^ / ^ ^ ^/ ^ ^ \\\n",

|

||||

" /^ ^ ^\\^ ^ ^ ^ _||____ ^ \\ / ^ ^ ^ / / ^ ^ ^ \\\n",

|

||||

" /\\ /\\ /\\ ^ \\ /\\ /\\ /\\\\\\\\\\\\\\\\ ^ \\ ^ /\\ /\\ /\\ /\\ /\\ /\\ ^ ^ ^/\\\n",

|

||||

"//\\\\/\\\\/\\\\ ^ \\//\\\\/\\\\ /__\\\\\\\\\\\\\\\\ _, \\ //\\\\/\\\\/\\\\ //\\\\/\\\\/\\\\ ^ ^ //\\\\\n",

|

||||

"//\\\\/\\\\/\\\\ //\\\\/\\\\ |__|_|_|__| \\__, //\\\\/\\\\/\\\\ //\\\\/\\\\/\\\\ ///\\\\\\\n",

|

||||

" || || (@^◡^)(≖ ‸ ≖*) ( ←_← )\\| /| /\\ \\ヽ(°ㅂ°╬) |( Ψ▼ー▼)∈ (O_O; ) |||\n",

|

||||

"~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ ~~~~~ ~~~~~ ~~~~~ ~~~~~ ~~~~~ ~~ \n",

|

||||

"</pre>\n",

|

||||

"**Launch Instructions**<br>\n",

|

||||

"1. Click the launch button.\n",

|

||||

"2. Wait for the environment and model to load\n",

|

||||

"3. After initialization, a TavernAI link will appear\n",

|

||||

"\n",

|

||||

"**Faq**<br>\n",

|

||||

"* Q: I do not get a TavernAI link\n",

|

||||

"* A: It seems the localtunnel service is currently down, so the TavernAI link is unavailable. Need to wait for it to start working again."

|

||||

"Questions? Hit me up on Discord: Cohee#1207"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "hCpoIHxYcDGs"

|

||||

"cellView": "form",

|

||||

"id": "_1gpebrnlp5-"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"#@title <b><-- Convert TavernAI characters to SillyTavern format</b>\n",

|

||||

"\n",

|

||||

"!mkdir /convert\n",

|

||||

"%cd /convert\n",

|

||||

"\n",

|

||||

"import os\n",

|

||||

"from google.colab import drive\n",

|

||||

"\n",

|

||||

"drive.mount(\"/convert/drive\")\n",

|

||||

"\n",

|

||||

"!git clone -b tools https://github.com/EnergoStalin/SillyTavern.git\n",

|

||||

"%cd SillyTavern\n",

|

||||

"\n",

|

||||

"!curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.37.2/install.sh | bash\n",

|

||||

"!nvm install 19.1.0\n",

|

||||

"!nvm use 19.1.0\n",

|

||||

"\n",

|

||||

"%cd tools/charaverter\n",

|

||||

"\n",

|

||||

"!npm i\n",

|

||||

"\n",

|

||||

"path = \"/convert/drive/MyDrive/TavernAI/characters\"\n",

|

||||

"output = \"/convert/drive/MyDrive/SillyTavern/characters\"\n",

|

||||

"if not os.path.exists(path):\n",

|

||||

" path = output\n",

|

||||

"\n",

|

||||

"!mkdir -p $output\n",

|

||||

"!node main.mjs $path $output\n",

|

||||

"\n",

|

||||

"drive.flush_and_unmount()\n",

|

||||

"\n",

|

||||

"%cd /\n",

|

||||

"!rm -rf /convert"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "ewkXkyiFP2Hq"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"#@title <-- Tap this if you play on Mobile { display-mode: \"form\" }\n",

|

||||

"#Taken from KoboldAI colab\n",

|

||||

"%%html\n",

|

||||

"<b>Press play on the music player to keep the tab alive, then start TavernAI below (Uses only 13MB of data)</b><br/>\n",

|

||||

"<audio src=\"https://henk.tech/colabkobold/silence.m4a\" controls>"

|

||||

"<b>Press play on the music player to keep the tab alive, then start KoboldAI below (Uses only 13MB of data)</b><br/>\n",

|

||||

"<audio src=\"https://raw.githubusercontent.com/KoboldAI/KoboldAI-Client/main/colab/silence.m4a\" controls>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "hps3qtPLFNBb",

|

||||

"cellView": "form"

|

||||

"cellView": "form",

|

||||

"id": "lVftocpwCoYw"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"#@title <b>TavernAI</b>\n",

|

||||

"#@markdown <- Click For Start (≖ ‸ ≖ ✿)\n",

|

||||

"#@title <b><-- Select your model below and then click this to start KoboldAI</b>\n",

|

||||

"\n",

|

||||

"Model = \"Pygmalion 6B\" #@param [ \"Pygmalion 6B\", \"Pygmalion 6B Dev\"] {allow-input: true}\n",

|

||||

"Version = \"Official\" \n",

|

||||

"KoboldAI_Provider = \"Localtunnel\" #@param [\"Localtunnel\", \"Cloudflare\"]\n",

|

||||

"use_google_drive = True #@param {type:\"boolean\"}\n",

|

||||

"Provider = KoboldAI_Provider\n",

|

||||

"Model = \"Pygmalion 6B\" #@param [\"Nerys V2 6B\", \"Erebus 6B\", \"Skein 6B\", \"Janeway 6B\", \"Adventure 6B\", \"Pygmalion 6B\", \"Pygmalion 6B Dev\", \"Lit V2 6B\", \"Lit 6B\", \"Shinen 6B\", \"Nerys 2.7B\", \"AID 2.7B\", \"Erebus 2.7B\", \"Janeway 2.7B\", \"Picard 2.7B\", \"Horni LN 2.7B\", \"Horni 2.7B\", \"Shinen 2.7B\", \"OPT 2.7B\", \"Fairseq Dense 2.7B\", \"Neo 2.7B\", \"Pygway 6B\", \"Nerybus 6.7B\", \"Pygway v8p4\", \"PPO-Janeway 6B\", \"PPO Shygmalion 6B\", \"LLaMA 7B\", \"Janin-GPTJ\", \"Javelin-GPTJ\", \"Javelin-R\", \"Janin-R\", \"Javalion-R\", \"Javalion-GPTJ\", \"Javelion-6B\", \"GPT-J-Pyg-PPO-6B\", \"ppo_hh_pythia-6B\", \"ppo_hh_gpt-j\", \"GPT-J-Pyg_PPO-6B\", \"GPT-J-Pyg_PPO-6B-Dev-V8p4\", \"Dolly_GPT-J-6b\", \"Dolly_Pyg-6B\"] {allow-input: true}\n",

|

||||

"Version = \"Official\" #@param [\"Official\", \"United\"] {allow-input: true}\n",

|

||||

"Provider = \"Localtunnel\" #@param [\"Localtunnel\"]\n",

|

||||

"ForceInitSteps = [] #@param {allow-input: true}\n",

|

||||

"UseGoogleDrive = True #@param {type:\"boolean\"}\n",

|

||||

"StartKoboldAI = True #@param {type:\"boolean\"}\n",

|

||||

"ModelsFromDrive = False #@param {type:\"boolean\"}\n",

|

||||

"UseExtrasExtensions = True #@param {type:\"boolean\"}\n",

|

||||

"#@markdown Enables hosting of extensions backend for TavernAI Extras\n",

|

||||

"extras_enable_captioning = True #@param {type:\"boolean\"}\n",

|

||||

"#@markdown Loads the image captioning module\n",

|

||||

"Captions_Model = \"Salesforce/blip-image-captioning-large\" #@param [ \"Salesforce/blip-image-captioning-large\", \"Salesforce/blip-image-captioning-base\" ]\n",

|

||||

"#@markdown * Salesforce/blip-image-captioning-large - good base model\n",

|

||||

"#@markdown * Salesforce/blip-image-captioning-base - slightly faster but less accurate\n",

|

||||

"extras_enable_emotions = True #@param {type:\"boolean\"}\n",

|

||||

"#@markdown Loads the sentiment classification model\n",

|

||||

"Emotions_Model = \"bhadresh-savani/distilbert-base-uncased-emotion\" #@param [\"bhadresh-savani/distilbert-base-uncased-emotion\", \"joeddav/distilbert-base-uncased-go-emotions-student\"]\n",

|

||||

"#@markdown * bhadresh-savani/distilbert-base-uncased-emotion = 6 supported emotions<br>\n",

|

||||

"#@markdown * joeddav/distilbert-base-uncased-go-emotions-student = 28 supported emotions\n",

|

||||

"extras_enable_memory = True #@param {type:\"boolean\"}\n",

|

||||

"#@markdown Loads the story summarization module\n",

|

||||

"Memory_Model = \"Qiliang/bart-large-cnn-samsum-ChatGPT_v3\" #@param [ \"Qiliang/bart-large-cnn-samsum-ChatGPT_v3\", \"Qiliang/bart-large-cnn-samsum-ElectrifAi_v10\", \"distilbart-xsum-12-3\" ]\n",

|

||||

"#@markdown * Qiliang/bart-large-cnn-samsum-ChatGPT_v3 - summarization model optimized for chats\n",

|

||||

"#@markdown * Qiliang/bart-large-cnn-samsum-ElectrifAi_v10 - nice results so far, but still being evaluated\n",

|

||||

"#@markdown * distilbart-xsum-12-3 - faster, but pretty basic alternative\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"%cd /content\n",

|

||||

"\n",

|

||||

"!cat .ii\n",

|

||||

"!nvidia-smi\n",

|

||||

"import subprocess\n",

|

||||

"import time\n",

|

||||

"import sys\n",

|

||||

"import os\n",

|

||||

"import threading\n",

|

||||

"import shutil\n",

|

||||

"\n",

|

||||

"import os, subprocess, time, pathlib, json, base64\n",

|

||||

"\n",

|

||||

"class ModelData:\n",

|

||||

" def __init__(self, name, version = Version, revision = \"\", path = \"\", download = \"\"):\n",

|

||||

" try:\n",

|

||||

" self.name = base64.b64decode(name.encode(\"ascii\")).decode(\"ascii\")\n",

|

||||

" except:\n",

|

||||

" self.name = name\n",

|

||||

" self.version = version \n",

|

||||

" self.revision = revision\n",

|

||||

" self.path = path\n",

|

||||

" self.download = download\n",

|

||||

" def args(self):\n",

|

||||

" args = [\"-m\", self.name, \"-g\", self.version]\n",

|

||||

" if(self.revision):\n",

|

||||

" args += [\"-r\", self.revision]\n",

|

||||

" return args\n",

|

||||

"\n",

|

||||

"class IncrementialInstall:\n",

|

||||

" def __init__(self, tasks = [], force = []):\n",

|

||||

" self.tasks = tasks\n",

|

||||

" self.completed = list(filter(lambda x: not x in force, self.__completed()))\n",

|

||||

"\n",

|

||||

" def __completed(self):\n",

|

||||

" try:\n",

|

||||

" with open(\".ii\") as f:\n",

|

||||

" return json.load(f)\n",

|

||||

" except:\n",

|

||||

" return []\n",

|

||||

"\n",

|

||||

" def addTask(self, name, func):\n",

|

||||

" self.tasks.append({\"name\": name, \"func\": func})\n",

|

||||

"\n",

|

||||

" def run(self):\n",

|

||||

" todo = list(filter(lambda x: not x[\"name\"] in self.completed, self.tasks))\n",

|

||||

" try:\n",

|

||||

" for task in todo:\n",

|

||||

" task[\"func\"]()\n",

|

||||

" self.completed.append(task[\"name\"])\n",

|

||||

" finally:\n",

|

||||

" with open(\".ii\", \"w\") as f:\n",

|

||||

" json.dump(self.completed, f)\n",

|

||||

"\n",

|

||||

"ii = IncrementialInstall(force=ForceInitSteps)\n",

|

||||

"\n",

|

||||

"def create_paths(paths):\n",

|

||||

" for directory in paths:\n",

|

||||

" if not os.path.exists(directory):\n",

|

||||

" os.makedirs(directory)\n",

|

||||

"\n",

|

||||

"# link source to dest copying dest to source if not present first\n",

|

||||

"def link(srcDir, destDir, files):\n",

|

||||

" for file in files:\n",

|

||||

" source = os.path.join(srcDir, file)\n",

|

||||

" dest = os.path.join(destDir, file)\n",

|

||||

" if not os.path.exists(source):\n",

|

||||

" !cp -r \"$dest\" \"$source\"\n",

|

||||

" !rm -rf \"$dest\"\n",

|

||||

" !ln -fs \"$source\" \"$dest\"\n",

|

||||

"\n",

|

||||

"from google.colab import drive\n",

|

||||

"if UseGoogleDrive:\n",

|

||||

" drive.mount(\"/content/drive/\")\n",

|

||||

"else:\n",

|

||||

" create_paths([\n",

|

||||

" \"/content/drive/MyDrive\"\n",

|

||||

" ])\n",

|

||||

"\n",

|

||||

"models = {\n",

|

||||

" \"Nerys V2 6B\": ModelData(\"KoboldAI/OPT-6B-nerys-v2\"),\n",

|

||||

" \"Erebus 6B\": ModelData(\"KoboldAI/OPT-6.7B-Erebus\"),\n",

|

||||

" \"Skein 6B\": ModelData(\"KoboldAI/GPT-J-6B-Skein\"),\n",

|

||||

" \"Janeway 6B\": ModelData(\"KoboldAI/GPT-J-6B-Janeway\"),\n",

|

||||

" \"Adventure 6B\": ModelData(\"KoboldAI/GPT-J-6B-Adventure\"),\n",

|

||||

" \"UHlnbWFsaW9uIDZC\": ModelData(\"UHlnbWFsaW9uQUkvcHlnbWFsaW9uLTZi\"),\n",

|

||||

" \"UHlnbWFsaW9uIDZCIERldg==\": ModelData(\"UHlnbWFsaW9uQUkvcHlnbWFsaW9uLTZi\", revision = \"dev\"),\n",

|

||||

" \"Lit V2 6B\": ModelData(\"hakurei/litv2-6B-rev3\"),\n",

|

||||

" \"Lit 6B\": ModelData(\"hakurei/lit-6B\"),\n",

|

||||

" \"Shinen 6B\": ModelData(\"KoboldAI/GPT-J-6B-Shinen\"),\n",

|

||||

" \"Nerys 2.7B\": ModelData(\"KoboldAI/fairseq-dense-2.7B-Nerys\"),\n",

|

||||

" \"Erebus 2.7B\": ModelData(\"KoboldAI/OPT-2.7B-Erebus\"),\n",

|

||||

" \"Janeway 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-Janeway\"),\n",

|

||||

" \"Picard 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-Picard\"),\n",

|

||||

" \"AID 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-AID\"),\n",

|

||||

" \"Horni LN 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-Horni-LN\"),\n",

|

||||

" \"Horni 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-Horni\"),\n",

|

||||

" \"Shinen 2.7B\": ModelData(\"KoboldAI/GPT-Neo-2.7B-Shinen\"),\n",

|

||||

" \"Fairseq Dense 2.7B\": ModelData(\"KoboldAI/fairseq-dense-2.7B\"),\n",

|

||||

" \"OPT 2.7B\": ModelData(\"facebook/opt-2.7b\"),\n",

|

||||

" \"Neo 2.7B\": ModelData(\"EleutherAI/gpt-neo-2.7B\"),\n",

|

||||

" \"Pygway 6B\": ModelData(\"TehVenom/PPO_Pygway-6b\"),\n",

|

||||

" \"Nerybus 6.7B\": ModelData(\"KoboldAI/OPT-6.7B-Nerybus-Mix\"),\n",

|

||||

" \"Pygway v8p4\": ModelData(\"TehVenom/PPO_Pygway-V8p4_Dev-6b\"),\n",

|

||||

" \"PPO-Janeway 6B\": ModelData(\"TehVenom/PPO_Janeway-6b\"),\n",

|

||||

" \"PPO Shygmalion 6B\": ModelData(\"TehVenom/PPO_Shygmalion-6b\"),\n",

|

||||

" \"LLaMA 7B\": ModelData(\"decapoda-research/llama-7b-hf\"),\n",

|

||||

" \"Janin-GPTJ\": ModelData(\"digitous/Janin-GPTJ\"),\n",

|

||||

" \"Javelin-GPTJ\": ModelData(\"digitous/Javelin-GPTJ\"),\n",

|

||||

" \"Javelin-R\": ModelData(\"digitous/Javelin-R\"),\n",

|

||||

" \"Janin-R\": ModelData(\"digitous/Janin-R\"),\n",

|

||||

" \"Javalion-R\": ModelData(\"digitous/Javalion-R\"),\n",

|

||||

" \"Javalion-GPTJ\": ModelData(\"digitous/Javalion-GPTJ\"),\n",

|

||||

" \"Javelion-6B\": ModelData(\"Cohee/Javelion-6b\"),\n",

|

||||

" \"GPT-J-Pyg-PPO-6B\": ModelData(\"TehVenom/GPT-J-Pyg_PPO-6B\"),\n",

|

||||

" \"ppo_hh_pythia-6B\": ModelData(\"reciprocate/ppo_hh_pythia-6B\"),\n",

|

||||

" \"ppo_hh_gpt-j\": ModelData(\"reciprocate/ppo_hh_gpt-j\"),\n",

|

||||

" \"Alpaca-7B\": ModelData(\"chainyo/alpaca-lora-7b\"),\n",

|

||||

" \"LLaMA 4-bit\": ModelData(\"decapoda-research/llama-13b-hf-int4\"),\n",

|

||||

" \"GPT-J-Pyg_PPO-6B\": ModelData(\"TehVenom/GPT-J-Pyg_PPO-6B\"),\n",

|

||||

" \"GPT-J-Pyg_PPO-6B-Dev-V8p4\": ModelData(\"TehVenom/GPT-J-Pyg_PPO-6B-Dev-V8p4\"),\n",

|

||||

" \"Dolly_GPT-J-6b\": ModelData(\"TehVenom/Dolly_GPT-J-6b\"),\n",

|

||||

" \"Dolly_Pyg-6B\": ModelData(\"TehVenom/AvgMerge_Dolly-Pygmalion-6b\")\n",

|

||||

"}\n",

|

||||

"model = models.get(Model, None)\n",

|

||||

"\n",

|

||||

"if model == None:\n",

|

||||

" model = models.get(base64.b64encode(Model.encode(\"ascii\")).decode(\"ascii\"), ModelData(Model, Version))\n",

|

||||

"\n",

|

||||

"if StartKoboldAI:\n",

|

||||

" def downloadKobold():\n",

|

||||

" !wget https://koboldai.org/ckds && chmod +x ckds\n",

|

||||

" def initKobold():\n",

|

||||

" !./ckds --init only\n",

|

||||

"\n",

|

||||

" ii.addTask(\"Download KoboldAI\", downloadKobold)\n",

|

||||

" ii.addTask(\"Init KoboldAI\", initKobold)\n",

|

||||

" \n",

|

||||

"if use_google_drive:\n",

|

||||

" drive.mount('/content/drive/')\n",

|

||||

" if not os.path.exists(\"/content/drive/MyDrive/TavernAI/\"):\n",

|

||||

" os.mkdir(\"/content/drive/MyDrive/TavernAI/\")\n",

|

||||

" if not os.path.exists(\"/content/drive/MyDrive/TavernAI/characters/\"):\n",

|

||||

" os.mkdir(\"/content/drive/MyDrive/TavernAI/characters/\")\n",

|

||||

" if not os.path.exists(\"/content/drive/MyDrive/TavernAI/chats/\"):\n",

|

||||

" os.mkdir(\"/content/drive/MyDrive/TavernAI/chats/\")\n",

|

||||

"else:\n",

|

||||

" if not os.path.exists(\"/content/drive\"):\n",

|

||||

" os.mkdir(\"/content/drive\")\n",

|

||||

" if not os.path.exists(\"/content/drive/MyDrive/\"):\n",

|

||||

" os.mkdir(\"/content/drive/MyDrive/\")\n",

|

||||

"\n",

|

||||

"def copy_characters(use_google_drive=False):\n",

|

||||

" if not use_google_drive:\n",

|

||||

" return\n",

|

||||

" \n",

|

||||

" src_folder = \"/TavernAIColab/public/characters\"\n",

|

||||

" dst_folder = \"/content/drive/MyDrive/TavernAI/characters\"\n",

|

||||

"\n",

|

||||

" for filename in os.listdir(src_folder):\n",

|

||||

" src_file = os.path.join(src_folder, filename)\n",

|

||||

" dst_file = os.path.join(dst_folder, filename)\n",

|

||||

"\n",

|

||||

" if os.path.exists(dst_file):\n",

|

||||

" print(f\"{dst_file} already exists. Skipping...\")\n",

|

||||

" continue\n",

|

||||

"\n",

|

||||

" shutil.copy(src_file, dst_folder)\n",

|

||||

" print(f\"{src_file} copied to {dst_folder}\")\n",

|

||||

"Revision = \"\"\n",

|

||||

"\n",

|

||||

"if Model == \"Pygmalion 6B\":\n",

|

||||

" Model = \"PygmalionAI/pygmalion-6b\"\n",

|

||||

" path = \"\"\n",

|

||||

" download = \"\"\n",

|

||||

" Version = \"United\"\n",

|

||||

"elif Model == \"Pygmalion 6B Dev\":\n",

|

||||

" Model = \"PygmalionAI/pygmalion-6b\"\n",

|

||||

" Revision = \"--revision dev\"\n",

|

||||

" path = \"\"\n",

|

||||

" Version = \"United\"\n",

|

||||

" download = \"\"\n",

|

||||

" ii.run()\n",

|

||||

"\n",

|

||||

"kargs = [\"/content/ckds\"]\n",

|

||||

"if not ModelsFromDrive:\n",

|

||||

" kargs += [\"-x\", \"colab\", \"-l\", \"colab\"]\n",

|

||||

"if Provider == \"Localtunnel\":\n",

|

||||

" tunnel = \"--localtunnel yes\"\n",

|

||||

"else:\n",

|

||||

" tunnel = \"\"\n",

|

||||

" kargs += [\"--localtunnel\", \"yes\"]\n",

|

||||

"\n",

|

||||

"kargs += model.args()\n",

|

||||

"\n",

|

||||

"url = \"\"\n",

|

||||

"print(kargs)\n",

|

||||

"\n",

|

||||

"#Henk's KoboldAI script\n",

|

||||

"!wget https://koboldai.org/ckds && chmod +x ckds\n",

|

||||

"!./ckds --init only\n",

|

||||

"if Provider == \"Localtunnel\":\n",

|

||||

" p = subprocess.Popen(['/content/ckds', '--model', Model, '--localtunnel', 'yes'], stdout=subprocess.PIPE, stderr=subprocess.PIPE)\n",

|

||||

"else:\n",

|

||||

" p = subprocess.Popen(['/content/ckds', '--model', Model], stdout=subprocess.PIPE, stderr=subprocess.PIPE)\n",

|

||||

"if StartKoboldAI:\n",

|

||||

" p = subprocess.Popen(kargs, stdout=subprocess.PIPE, stderr=subprocess.PIPE)\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"#Do not repeat! Tricks performed by a professional!\n",

|

||||

"url = ''\n",

|

||||

"while True:\n",

|

||||

" prefix = \"KoboldAI has finished loading and is available at the following link\"\n",

|

||||

" urlprefix = f\"{prefix}: \"\n",

|

||||

" ui1prefix = f\"{prefix} for UI 1: \"\n",

|

||||

" while True:\n",

|

||||

" line = p.stdout.readline().decode().strip()\n",

|

||||

" if \"KoboldAI has finished loading and is available at the following link: \" in line:\n",

|

||||

" print(line)\n",

|

||||

" url = line.split(\"KoboldAI has finished loading and is available at the following link: \")[1]\n",

|

||||

" print(url)\n",

|

||||

" if urlprefix in line:\n",

|

||||

" url = line.split(urlprefix)[1]\n",

|

||||

" break\n",

|

||||

" if \"KoboldAI has finished loading and is available at the following link for UI 1: \" in line:\n",

|

||||

" print(line)\n",

|

||||

" url = line.split(\"KoboldAI has finished loading and is available at the following link for UI 1: \")[1]\n",

|

||||

" print(url)\n",

|

||||

" elif ui1prefix in line:\n",

|

||||

" url = line.split(ui1prefix)[1]\n",

|

||||

" break\n",

|

||||

" if not line:\n",

|

||||

" elif not line:\n",

|

||||

" break\n",

|

||||

" print(line)\n",

|

||||

" if \"INIT\" in line and \"Transformers\" in line:\n",

|

||||

" print(\"Model loading... (It will take 2 - 5 minutes)\")\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"#TavernAI\n",

|

||||

"%cd /\n",

|

||||

"!curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.37.2/install.sh | bash\n",

|

||||

"!nvm install 19.1.0\n",

|

||||

"!nvm use 19.1.0\n",

|

||||

"!node -v\n",

|

||||

"!git clone https://github.com/TavernAI/TavernAIColab\n",

|

||||

"copy_characters(use_google_drive)\n",

|

||||

"%cd TavernAIColab\n",

|

||||

"!npm install\n",

|

||||

"time.sleep(1)\n",

|

||||

"%env colab=2\n",

|

||||

"%env colaburl=$url\n",

|

||||

"if use_google_drive:\n",

|

||||

" %env googledrive=2\n",

|

||||

"!nohup node server.js &\n",

|

||||

"time.sleep(3)\n",

|

||||

"print('KoboldAI LINK:')\n",

|

||||

"print(url)\n",

|

||||

"print('')\n",

|

||||

"print('###TavernAI LINK###')\n",

|

||||

"!lt --port 8000\n"

|

||||

"\n",

|

||||

"# #TavernAI\n",

|

||||

"%cd /\n",

|

||||

"def setupNVM():\n",

|

||||

" !curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.37.2/install.sh | bash\n",

|

||||

"ii.addTask(\"Setup NVM\", setupNVM)\n",

|

||||

"\n",

|

||||

"def installNode():\n",

|

||||

" !nvm install 19.1.0\n",

|

||||

" !nvm use 19.1.0\n",

|

||||

"ii.addTask(\"Install node\", installNode)\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"# TavernAI extras\n",

|

||||

"extras_url = '(disabled)'\n",

|

||||

"params = []\n",

|

||||

"params.append('--cpu')\n",

|

||||

"ExtrasModules = []\n",

|

||||

"\n",

|

||||

"if (extras_enable_captioning):\n",

|

||||

" ExtrasModules.append('caption')\n",

|

||||

"if (extras_enable_memory):\n",

|

||||

" ExtrasModules.append('summarize')\n",

|

||||

"if (extras_enable_emotions):\n",

|

||||

" ExtrasModules.append('classify')\n",

|

||||

"\n",

|

||||

"params.append(f'--classification-model={Emotions_Model}')\n",

|

||||

"params.append(f'--summarization-model={Memory_Model}')\n",

|

||||

"params.append(f'--captioning-model={Captions_Model}')\n",

|

||||

"params.append(f'--enable-modules={\",\".join(ExtrasModules)}')\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"if UseExtrasExtensions:\n",

|

||||

" def cloneExtras():\n",

|

||||

" %cd /\n",

|

||||

" !git clone https://github.com/Cohee1207/TavernAI-extras\n",

|

||||

" ii.addTask('clone extras', cloneExtras)\n",

|

||||

"\n",

|

||||

" def installRequirements():\n",

|

||||

" %cd /TavernAI-extras\n",

|

||||

" !npm install -g localtunnel\n",

|

||||

" !pip install -r requirements.txt\n",

|

||||

" !pip install tensorflow==2.11\n",

|

||||

" ii.addTask('install requirements', installRequirements)\n",

|

||||

"\n",

|

||||

" def runServer():\n",

|

||||

" cmd = f\"python server.py {' '.join(params)}\"\n",

|

||||

" print(cmd)\n",

|

||||

" extras_process = subprocess.Popen(cmd, stdout=subprocess.PIPE, stderr=subprocess.STDOUT, cwd='/TavernAI-extras', shell=True)\n",

|

||||

" print('processId:', extras_process.pid)\n",

|

||||

" while True:\n",

|

||||

" line = extras_process.stdout.readline().decode().strip()\n",

|

||||

" if \"Running on \" in line:\n",

|

||||

" break\n",

|

||||

" if not line:\n",

|

||||

" print('breaking on line')\n",

|

||||

" break\n",

|

||||

" print(line)\n",

|

||||

" ii.addTask('run server', runServer)\n",

|

||||

"\n",

|

||||

" def extractUrl():\n",

|

||||

" global extras_url\n",

|

||||

" subprocess.call('nohup lt --port 5100 > ./extras.out 2> ./extras.err &', shell=True)\n",

|

||||

" print('Waiting for lt init...')\n",

|

||||

" time.sleep(5)\n",

|

||||

"\n",

|

||||

" while True:\n",

|

||||

" if (os.path.getsize('./extras.out') > 0):\n",

|

||||

" with open('./extras.out', 'r') as f:\n",

|

||||

" lines = f.readlines()\n",

|

||||

" for x in range(len(lines)):\n",

|

||||

" if ('your url is: ' in lines[x]):\n",

|

||||

" print('TavernAI Extensions URL:')\n",

|

||||

" extras_url = lines[x].split('your url is: ')[1]\n",

|

||||

" print(extras_url)\n",

|

||||

" break\n",

|

||||

" if (os.path.getsize('./extras.err') > 0):\n",

|

||||

" with open('./extras.err', 'r') as f:\n",

|

||||

" print(f.readlines())\n",

|

||||

" break\n",

|

||||

" ii.addTask('extract extras URL', extractUrl)\n",

|

||||

"\n",

|

||||

"ii.run()\n",

|

||||

"\n",

|

||||

"def cloneTavern():\n",

|

||||

" %cd /\n",

|

||||

" !git clone https://github.com/Cohee1207/SillyTavern\n",

|

||||

" %cd /SillyTavern\n",

|

||||

"ii.addTask(\"Clone SillyTavern\", cloneTavern)\n",

|

||||

"\n",

|

||||

"if UseGoogleDrive:\n",

|

||||

" %env googledrive=2\n",

|

||||

"\n",

|

||||

" def setupTavernPaths():\n",

|

||||

" %cd /SillyTavern\n",

|

||||

" tdrive = \"/content/drive/MyDrive/SillyTavern\"\n",

|

||||

" create_paths([\n",

|

||||

" tdrive,\n",

|

||||

" os.path.join(\"public\", \"groups\"),\n",

|

||||

" os.path.join(\"public\", \"group chats\")\n",

|

||||

" ])\n",

|

||||

" link(tdrive, \"public\", [\n",

|

||||

" \"settings.json\",\n",

|

||||

" \"backgrounds\",\n",

|

||||

" \"characters\",\n",

|

||||

" \"chats\",\n",

|

||||

" \"User Avatars\",\n",

|

||||

" \"css\",\n",

|

||||

" \"worlds\",\n",

|

||||

" \"group chats\",\n",

|

||||

" \"groups\",\n",

|

||||

" ])\n",

|

||||

" ii.addTask(\"Setup Tavern Paths\", setupTavernPaths)\n",

|

||||

"\n",

|

||||

"def installTavernDependencies():\n",

|

||||

" %cd /SillyTavern\n",

|

||||

" !npm install\n",

|

||||

" !npm install -g localtunnel\n",

|

||||

"ii.addTask(\"Install Tavern Dependencies\", installTavernDependencies)\n",

|

||||

"ii.run()\n",

|

||||

"\n",

|

||||

"%env colaburl=$url\n",

|

||||

"%env SILLY_TAVERN_PORT=5001\n",

|

||||

"print(\"KoboldAI LINK:\", url, '###Extensions API LINK###', extras_url, \"###SillyTavern LINK###\", sep=\"\\n\")\n",

|

||||

"p = subprocess.Popen([\"lt\", \"--port\", \"5001\"], stdout=subprocess.PIPE, stderr=subprocess.PIPE)\n",

|

||||

"print(p.stdout.readline().decode().strip())\n",

|

||||

"!node server.js"

|

||||

]

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"accelerator": "GPU",

|

||||

"colab": {

|

||||

"private_outputs": true,

|

||||

"provenance": []

|

||||

},

|

||||

"gpuClass": "standard",

|

||||

@@ -196,8 +417,7 @@

|

||||

},

|

||||

"language_info": {

|

||||

"name": "python"

|

||||

},

|

||||

"accelerator": "GPU"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 0

|

||||

|

||||

45

readme.md

45

readme.md

@@ -1,11 +1,33 @@

|

||||

## Silly TavernAI mod. Based on fork of TavernAI 1.2.8

|

||||

### Brought to you by @SillyLossy and @RossAscends

|

||||

# SillyTavern

|

||||

## Based on fork of TavernAI 1.2.8

|

||||

### Brought to you by Cohee and RossAscends

|

||||

|

||||

Try on Colab (runs KoboldAI backend and TavernAI Extras server alongside): <a target="_blank" href="https://colab.research.google.com/github/SillyLossy/TavernAI-extras/blob/main/colab/GPU.ipynb">

|

||||

### What is SillyTavern or TavernAI?

|

||||

Tavern is a user interface you can install on your computer (and Android phones) that allows you to interact text generation AIs and chat/roleplay with characters you or the community create.

|

||||

|

||||

SillyTavern is a fork of TavernAI 1.2.8 which is under more active development, and has added many major features. At this point they can be thought of as completely independent programs.

|

||||

|

||||

### What do I need other than Tavern?

|

||||

On its own Tavern is useless, as it's just a user interface. You have to have access to an AI system backend that can act as the roleplay character. There are various supported backends: OpenAPI API (GPT), KoboldAI (either running locally or on Google Colab), and more.

|

||||

|

||||

### I'm new to all this. I just want to have a good time easily. What is the best AI backend to use?

|

||||

The most advanced/intelligent AI backend for roleplaying is to pay for OpenAI's GPT API. It's also among the easiest to use. Objectively, GPT is streets ahead of all other backends. However, OpenAI log all your activity, and your account MAY be banned in the future if you violate their policies (e.g. on adult content). However, there are no reports of anyone being banned yet.

|

||||

People who value privacy more tend to run a self-hosted AI backend like KoboldAI. Self-hosted backends do not log, but they are much less capable at roleplaying.

|

||||

|

||||

### Do I need a powerful PC to run Tavern?

|

||||

Since Tavern is only a user interface, it has tiny hardware requirements, it will run on anything. It's the AI system backend that needs to be powerful.

|

||||

|

||||

### I want to try self-hosted easily. Got a Google Colab?

|

||||

|

||||

Try on Colab (runs KoboldAI backend and TavernAI Extras server alongside): <a target="_blank" href="https://colab.research.google.com/github/Cohee1207/SillyTavern/blob/main/colab/GPU.ipynb">

|

||||

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

|

||||

</a>

|

||||

|

||||

https://colab.research.google.com/github/SillyLossy/TavernAI-extras/blob/main/colab/GPU.ipynb

|

||||

https://colab.research.google.com/github/Cohee1207/SillyTavern/blob/main/colab/GPU.ipynb

|

||||

|

||||

If that didn't work, try the legacy link:

|

||||

|

||||

https://colab.research.google.com/github/Cohee1207/TavernAI-extras/blob/main/colab/GPU.ipynb

|

||||

|

||||

## Mobile support

|

||||

|

||||

@@ -13,7 +35,7 @@ https://colab.research.google.com/github/SillyLossy/TavernAI-extras/blob/main/co

|

||||

|

||||

https://rentry.org/TAI_Termux

|

||||

|

||||

## This branch includes:

|

||||

## This version includes

|

||||

* A heavily modified TavernAI 1.2.8 (more than 50% of code rewritten or optimized)

|

||||

* Swipes

|

||||

* Group chats

|

||||

@@ -23,21 +45,19 @@ https://rentry.org/TAI_Termux

|

||||

* [Oobabooga's TextGen WebUI](https://github.com/oobabooga/text-generation-webui) API connection

|

||||

* Soft prompts selector for KoboldAI

|

||||

* Prompt generation formatting tweaking

|

||||

* Extensibility support via [SillyLossy's TAI-extras](https://github.com/SillyLossy/TavernAI-extras) plugins

|

||||

* Extensibility support via [SillyLossy's TAI-extras](https://github.com/Cohee1207/TavernAI-extras) plugins

|

||||

* Character emotional expressions

|

||||

* Auto-Summary of the chat history

|

||||

* Sending images to chat, and the AI interpreting the content.

|

||||

|

||||

## UI Extensions 🚀

|

||||

| Name | Description | Required <a href="https://github.com/SillyLossy/TavernAI-extras#modules" target="_blank">Extra Modules</a> | Screenshot |

|

||||

| Name | Description | Required <a href="https://github.com/Cohee1207/TavernAI-extras#modules" target="_blank">Extra Modules</a> | Screenshot |

|

||||

| ---------------- | ---------------------------------| ---------------------------- | ---------- |

|

||||

| Image Captioning | Send a cute picture to your bot!<br><br>Picture select option will appear beside "Message send" button. | `caption` | <img src="https://user-images.githubusercontent.com/18619528/224161576-ddfc51cd-995e-44ec-bf2d-d2477d603f0c.png" style="max-width:200px" /> |

|

||||

| Character Expressions | See your character reacting to your messages!<br><br>**You need to provide your own character images!**<br><br>1. Create a folder in TavernAI called `public/characters/<name>`, where `<name>` is a name of your character.<br>2. For base emotion classification model, put six PNG files there with the following names: `joy.png`, `anger.png`, `fear.png`, `love.png`, `sadness.png`, `surprise.png`. Other models may provide another options.<br>3. Images only display in desktop mode. | `classify` | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/223765089-34968217-6862-47e0-85da-7357370f8de6.png"> |

|

||||

| Memory | Chatbot long-term memory simulation using automatic message context summarization. | `summarize` | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/223766279-88a46481-1fa6-40c5-9724-6cdd6f587233.png"> |

|

||||

| Floating Prompt | Adds a string to your scenario after certain amount of messages you send. Usage ideas: reinforce certain events during roleplay. Thanks @Ali឵#2222 for suggesting me that! | None | <img style="max-width:200px" src="https://user-images.githubusercontent.com/18619528/224158641-c317313c-b87d-42b2-9702-ea4ba896593e.png" /> |

|

||||

| D&D Dice | A set of 7 classic D&D dice for all your dice rolling needs.<br><br>*I used to roll the dice.<br>Feel the fear in my enemies' eyes* | None | <img style="max-width:200px" alt="image" src="https://user-images.githubusercontent.com/18619528/226199925-a066c6fc-745e-4a2b-9203-1cbffa481b14.png"> |

|

||||

|

||||

...and...

|

||||

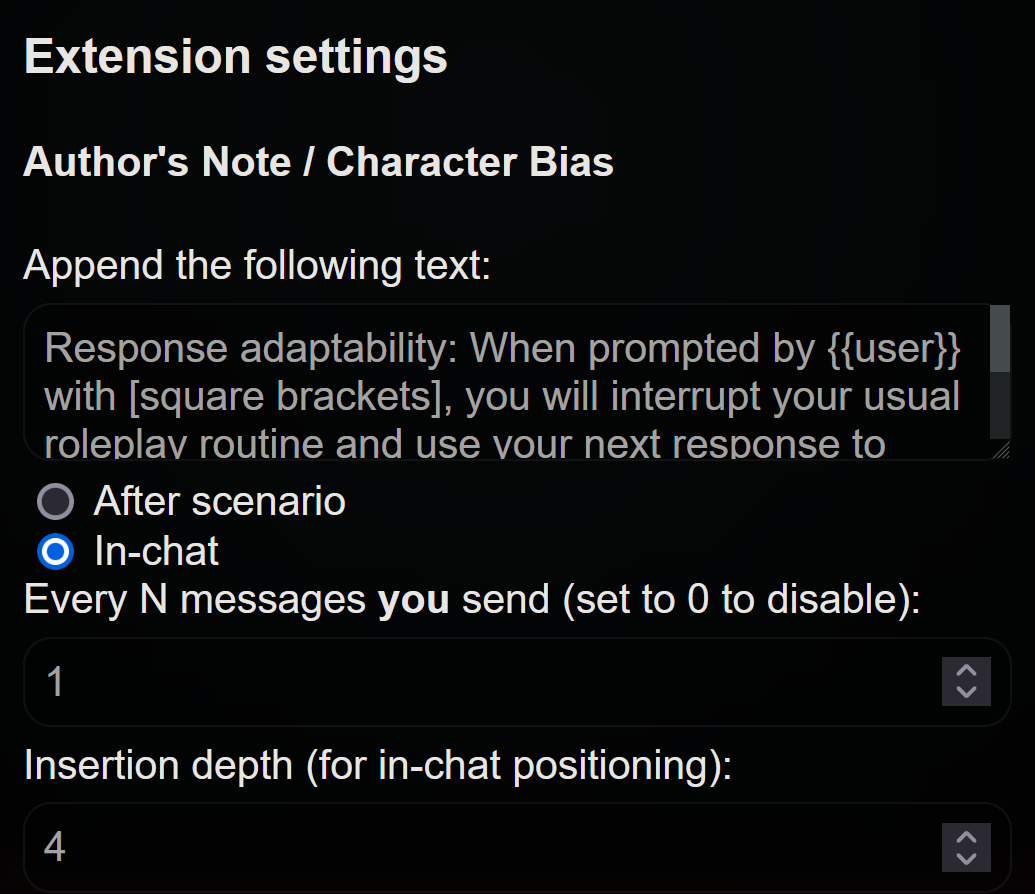

| Author's Note | Built-in extension that allows you to append notes that will be added to the context and steer the story and character in a specific direction. Because it's sent after the character description, it has a lot of weight. Thanks Ali឵#2222 for pitching the idea! | None |

|

||||

|

||||

## UI/CSS/Quality of Life tweaks by RossAscends

|

||||

|

||||

@@ -67,7 +87,7 @@ https://rentry.org/TAI_Termux

|

||||

|

||||

*NOTE: This branch is intended for local install purposes, and has not been tested on a colab or other cloud notebook service.*

|

||||

|

||||

1. install [NodeJS](nodejs.org)

|

||||

1. install [NodeJS](https://nodejs.org/en)

|

||||

2. download the zip from this github repo

|

||||

3. unzip it into a folder of your choice

|

||||

4. run start.bat via double clicking or in a command line.

|

||||

@@ -109,8 +129,9 @@ Contact us on Discord: Cohee#1207 or RossAscends#1779

|

||||

|

||||

## License and credits

|

||||

* TAI Base by Humi: Unknown license

|

||||

* SillyLossy's TAI mod: Public domain

|

||||

* Cohee's TAI mod: Public domain

|

||||

* RossAscends' additions: Public domain

|

||||

* Portions of CncAnon's TavernAITurbo mod: Unknown license

|

||||

* Thanks Pygmalion University for being awesome testers and suggesting cool features!

|

||||

* Thanks oobabooga for compiling presets for TextGen

|

||||

* poe-api client adapted from https://github.com/ading2210/poe-api (GPL v3)

|

||||

BIN

readme/1.png

BIN

readme/1.png

Binary file not shown.

|

Before Width: | Height: | Size: 905 KiB |

BIN

readme/2.png

BIN

readme/2.png

Binary file not shown.

|

Before Width: | Height: | Size: 286 KiB |

BIN

readme/3.png

BIN

readme/3.png

Binary file not shown.

|

Before Width: | Height: | Size: 26 KiB |

BIN

readme/4.png

BIN

readme/4.png

Binary file not shown.

|

Before Width: | Height: | Size: 193 KiB |

BIN

readme/5.png

BIN

readme/5.png

Binary file not shown.

|

Before Width: | Height: | Size: 187 KiB |

24

server.js

24

server.js

@@ -22,7 +22,7 @@ const crypto = require('crypto');

|

||||

const ipaddr = require('ipaddr.js');

|

||||

|

||||

const config = require(path.join(process.cwd(), './config.conf'));

|

||||

const server_port = config.port;

|

||||

const server_port = process.env.SILLY_TAVERN_PORT || config.port;

|

||||

const whitelist = config.whitelist;

|

||||

const whitelistMode = config.whitelistMode;

|

||||

const autorun = config.autorun;

|

||||

@@ -87,13 +87,9 @@ function humanizedISO8601DateTime() {

|

||||

return HumanizedDateTime;

|

||||

};

|

||||

|

||||

var is_colab = false;

|

||||

var is_colab = process.env.colaburl !== undefined;

|

||||

var charactersPath = 'public/characters/';

|

||||

var chatsPath = 'public/chats/';

|

||||

if (is_colab && process.env.googledrive == 2) {

|

||||

charactersPath = '/content/drive/MyDrive/TavernAI/characters/';

|

||||

chatsPath = '/content/drive/MyDrive/TavernAI/chats/';

|

||||

}

|

||||

const jsonParser = express.json({ limit: '100mb' });

|

||||

const urlencodedParser = express.urlencoded({ extended: true, limit: '100mb' });

|

||||

const baseRequestArgs = { headers: { "Content-Type": "application/json" } };

|

||||

@@ -174,9 +170,11 @@ app.use((req, res, next) => {

|

||||

if (req.url.startsWith('/characters/') && is_colab && process.env.googledrive == 2) {

|

||||

|

||||

const filePath = path.join(charactersPath, decodeURIComponent(req.url.substr('/characters'.length)));

|

||||

console.log('req.url: ' + req.url);

|

||||

console.log(filePath);

|

||||

fs.access(filePath, fs.constants.R_OK, (err) => {

|

||||

if (!err) {

|

||||

res.sendFile(filePath);

|

||||

res.sendFile(filePath, { root: __dirname });

|

||||

} else {

|

||||

res.send('Character not found: ' + filePath);

|

||||

//next();

|

||||

@@ -736,15 +734,12 @@ app.post("/getcharacters", jsonParser, function (request, response) {

|

||||

});

|

||||

app.post("/getbackgrounds", jsonParser, function (request, response) {

|

||||

var images = getImages("public/backgrounds");

|

||||

if (is_colab === true) {

|

||||

images = ['tavern.png'];

|

||||

}

|

||||

response.send(JSON.stringify(images));

|

||||

|

||||

});

|

||||

app.post("/iscolab", jsonParser, function (request, response) {

|

||||

let send_data = false;

|

||||

if (process.env.colaburl !== undefined) {

|

||||

if (is_colab) {

|

||||

send_data = String(process.env.colaburl).trim();

|

||||

}

|

||||

response.send({ colaburl: send_data });

|

||||

@@ -1999,15 +1994,10 @@ function getAsync(url, args) {

|

||||

// ** END **

|

||||

|

||||

app.listen(server_port, (listen ? '0.0.0.0' : '127.0.0.1'), async function () {

|

||||

if (process.env.colab !== undefined) {

|

||||

if (process.env.colab == 2) {

|

||||

is_colab = true;

|

||||

}

|

||||

}

|

||||

ensurePublicDirectoriesExist();

|

||||

await ensureThumbnailCache();

|

||||

console.log('Launching...');

|

||||

if (autorun) open('http:127.0.0.1:' + server_port);

|

||||

if (autorun) open('http://127.0.0.1:' + server_port);

|

||||

console.log('TavernAI started: http://127.0.0.1:' + server_port);

|

||||

if (fs.existsSync('public/characters/update.txt') && !is_colab) {

|

||||

convertStage1();

|

||||

|

||||

Reference in New Issue

Block a user