Switch to lifenjoiner's ewma variant

This commit is contained in:

parent

c08852feb1

commit

034d3bd424

|

|

@ -3,17 +3,17 @@ package main

|

|||

import (

|

||||

"sync"

|

||||

|

||||

"github.com/jedisct1/ewma"

|

||||

"github.com/lifenjoiner/ewma"

|

||||

)

|

||||

|

||||

type QuestionSizeEstimator struct {

|

||||

sync.RWMutex

|

||||

minQuestionSize int

|

||||

ewma ewma.MovingAverage

|

||||

ewma *ewma.EWMA

|

||||

}

|

||||

|

||||

func NewQuestionSizeEstimator() QuestionSizeEstimator {

|

||||

return QuestionSizeEstimator{minQuestionSize: InitialMinQuestionSize, ewma: &ewma.SimpleEWMA{}}

|

||||

return QuestionSizeEstimator{minQuestionSize: InitialMinQuestionSize, ewma: &ewma.EWMA{}}

|

||||

}

|

||||

|

||||

func (questionSizeEstimator *QuestionSizeEstimator) MinQuestionSize() int {

|

||||

|

|

|

|||

|

|

@ -16,9 +16,9 @@ import (

|

|||

"time"

|

||||

|

||||

"github.com/jedisct1/dlog"

|

||||

"github.com/jedisct1/ewma"

|

||||

clocksmith "github.com/jedisct1/go-clocksmith"

|

||||

stamps "github.com/jedisct1/go-dnsstamps"

|

||||

"github.com/lifenjoiner/ewma"

|

||||

"github.com/miekg/dns"

|

||||

"golang.org/x/crypto/ed25519"

|

||||

)

|

||||

|

|

@ -46,7 +46,7 @@ type DOHClientCreds struct {

|

|||

type ServerInfo struct {

|

||||

DOHClientCreds DOHClientCreds

|

||||

lastActionTS time.Time

|

||||

rtt ewma.MovingAverage

|

||||

rtt *ewma.EWMA

|

||||

Name string

|

||||

HostName string

|

||||

UDPAddr *net.UDPAddr

|

||||

|

|

@ -194,7 +194,6 @@ func (serversInfo *ServersInfo) refreshServer(proxy *Proxy, name string, stamp s

|

|||

dlog.Fatalf("[%s] != [%s]", name, newServer.Name)

|

||||

}

|

||||

newServer.rtt = ewma.NewMovingAverage(RTTEwmaDecay)

|

||||

newServer.rtt.SetWarmupSamples(1)

|

||||

newServer.rtt.Set(float64(newServer.initialRtt))

|

||||

isNew = true

|

||||

serversInfo.Lock()

|

||||

|

|

|

|||

6

go.mod

6

go.mod

|

|

@ -10,7 +10,6 @@ require (

|

|||

github.com/hashicorp/golang-lru v0.5.4

|

||||

github.com/hectane/go-acl v0.0.0-20190604041725-da78bae5fc95

|

||||

github.com/jedisct1/dlog v0.0.0-20210927135244-3381aa132e7f

|

||||

github.com/jedisct1/ewma v1.2.1-0.20220220223311-a30af446ecb9

|

||||

github.com/jedisct1/go-clocksmith v0.0.0-20210101121932-da382b963868

|

||||

github.com/jedisct1/go-dnsstamps v0.0.0-20210810213811-61cc83d2a354

|

||||

github.com/jedisct1/go-hpke-compact v0.0.0-20210930135406-0763750339f0

|

||||

|

|

@ -18,6 +17,7 @@ require (

|

|||

github.com/jedisct1/xsecretbox v0.0.0-20210927135450-ebe41aef7bef

|

||||

github.com/k-sone/critbitgo v1.4.0

|

||||

github.com/kardianos/service v1.2.1

|

||||

github.com/lifenjoiner/ewma v0.0.0-20210320054258-4f227d7eb8a2

|

||||

github.com/miekg/dns v1.1.46

|

||||

github.com/powerman/check v1.6.0

|

||||

golang.org/x/crypto v0.0.0-20220214200702-86341886e292

|

||||

|

|

@ -152,9 +152,9 @@ require (

|

|||

github.com/ultraware/whitespace v0.0.4 // indirect

|

||||

github.com/uudashr/gocognit v1.0.1 // indirect

|

||||

github.com/yeya24/promlinter v0.1.0 // indirect

|

||||

golang.org/x/mod v0.5.1 // indirect

|

||||

golang.org/x/mod v0.4.2 // indirect

|

||||

golang.org/x/text v0.3.7 // indirect

|

||||

golang.org/x/tools v0.1.9 // indirect

|

||||

golang.org/x/tools v0.1.6-0.20210726203631-07bc1bf47fb2 // indirect

|

||||

golang.org/x/xerrors v0.0.0-20200804184101-5ec99f83aff1 // indirect

|

||||

google.golang.org/genproto v0.0.0-20200707001353-8e8330bf89df // indirect

|

||||

google.golang.org/grpc v1.38.0 // indirect

|

||||

|

|

|

|||

13

go.sum

13

go.sum

|

|

@ -351,8 +351,6 @@ github.com/inconshreveable/mousetrap v1.0.0 h1:Z8tu5sraLXCXIcARxBp/8cbvlwVa7Z1NH

|

|||

github.com/inconshreveable/mousetrap v1.0.0/go.mod h1:PxqpIevigyE2G7u3NXJIT2ANytuPF1OarO4DADm73n8=

|

||||

github.com/jedisct1/dlog v0.0.0-20210927135244-3381aa132e7f h1:XICcphytniQKdtd4FGrK0b1ERzS7FBvFtVUCReSppmU=

|

||||

github.com/jedisct1/dlog v0.0.0-20210927135244-3381aa132e7f/go.mod h1:35aII3PkLMvmc8daWy0vcZXDU+a40lJczHHTFRJmnvw=

|

||||

github.com/jedisct1/ewma v1.2.1-0.20220220223311-a30af446ecb9 h1:U5QPCoM1KkMJ9RfEfP0joKNwwwIHG1oP9RzjvQTuh98=

|

||||

github.com/jedisct1/ewma v1.2.1-0.20220220223311-a30af446ecb9/go.mod h1:qCWdft6DX9wxyNsUS+sxS44UkxE7eQnNtBttTWoW0cU=

|

||||

github.com/jedisct1/go-clocksmith v0.0.0-20210101121932-da382b963868 h1:QZ79mRbNwYYYmiVjyv+X0NKgYE6nyN1yo3gtEFdzpiE=

|

||||

github.com/jedisct1/go-clocksmith v0.0.0-20210101121932-da382b963868/go.mod h1:SAINchklztk2jcLWJ4bpNF4KnwDUSUTX+cJbspWC2Rw=

|

||||

github.com/jedisct1/go-dnsstamps v0.0.0-20210810213811-61cc83d2a354 h1:sIB9mDh2spQdh95jeXF2h9uSNtObbehD0YbDCzmqbM8=

|

||||

|

|

@ -424,6 +422,8 @@ github.com/letsencrypt/pkcs11key/v4 v4.0.0/go.mod h1:EFUvBDay26dErnNb70Nd0/VW3tJ

|

|||

github.com/lib/pq v1.0.0/go.mod h1:5WUZQaWbwv1U+lTReE5YruASi9Al49XbQIvNi/34Woo=

|

||||

github.com/lib/pq v1.8.0/go.mod h1:AlVN5x4E4T544tWzH6hKfbfQvm3HdbOxrmggDNAPY9o=

|

||||

github.com/lib/pq v1.9.0/go.mod h1:AlVN5x4E4T544tWzH6hKfbfQvm3HdbOxrmggDNAPY9o=

|

||||

github.com/lifenjoiner/ewma v0.0.0-20210320054258-4f227d7eb8a2 h1:eD3+F7WMC7wryFGBrLSyzoRqK+kR7nCT/9VT2E3XJzc=

|

||||

github.com/lifenjoiner/ewma v0.0.0-20210320054258-4f227d7eb8a2/go.mod h1:SJvYtJnDKXqTrIvyRocCJmuNuM3bUb4krn9UbZXj+tw=

|

||||

github.com/logrusorgru/aurora v0.0.0-20181002194514-a7b3b318ed4e/go.mod h1:7rIyQOR62GCctdiQpZ/zOJlFyk6y+94wXzv6RNZgaR4=

|

||||

github.com/magiconair/properties v1.8.0/go.mod h1:PppfXfuXeibc/6YijjN8zIbojt8czPbwD3XqdrwzmxQ=

|

||||

github.com/magiconair/properties v1.8.1 h1:ZC2Vc7/ZFkGmsVC9KvOjumD+G5lXy2RtTKyzRKO2BQ4=

|

||||

|

|

@ -693,7 +693,6 @@ github.com/yuin/goldmark v1.1.27/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9de

|

|||

github.com/yuin/goldmark v1.1.32/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9decYSb74=

|

||||

github.com/yuin/goldmark v1.2.1/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9decYSb74=

|

||||

github.com/yuin/goldmark v1.3.5/go.mod h1:mwnBkeHKe2W/ZEtQ+71ViKU8L12m81fl3OWwC1Zlc8k=

|

||||

github.com/yuin/goldmark v1.4.1/go.mod h1:mwnBkeHKe2W/ZEtQ+71ViKU8L12m81fl3OWwC1Zlc8k=

|

||||

go.etcd.io/bbolt v1.3.2/go.mod h1:IbVyRI1SCnLcuJnV2u8VeU0CEYM7e686BmAb1XKL+uU=

|

||||

go.etcd.io/bbolt v1.3.3/go.mod h1:IbVyRI1SCnLcuJnV2u8VeU0CEYM7e686BmAb1XKL+uU=

|

||||

go.etcd.io/bbolt v1.3.4/go.mod h1:G5EMThwa9y8QZGBClrRx5EY+Yw9kAhnjy3bSjsnlVTQ=

|

||||

|

|

@ -759,9 +758,8 @@ golang.org/x/mod v0.2.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

|||

golang.org/x/mod v0.3.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

golang.org/x/mod v0.4.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

golang.org/x/mod v0.4.1/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

golang.org/x/mod v0.4.2 h1:Gz96sIWK3OalVv/I/qNygP42zyoKp3xptRVCWRFEBvo=

|

||||

golang.org/x/mod v0.4.2/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

golang.org/x/mod v0.5.1 h1:OJxoQ/rynoF0dcCdI7cLPktw/hR2cueqYfjm43oqK38=

|

||||

golang.org/x/mod v0.5.1/go.mod h1:5OXOZSfqPIIbmVBIIKWRFfZjPR0E5r58TLhUjH0a2Ro=

|

||||

golang.org/x/net v0.0.0-20180724234803-3673e40ba225/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

|

||||

golang.org/x/net v0.0.0-20180826012351-8a410e7b638d/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

|

||||

golang.org/x/net v0.0.0-20180906233101-161cd47e91fd/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

|

||||

|

|

@ -804,7 +802,6 @@ golang.org/x/net v0.0.0-20201202161906-c7110b5ffcbb/go.mod h1:sp8m0HH+o8qH0wwXwY

|

|||

golang.org/x/net v0.0.0-20210226172049-e18ecbb05110/go.mod h1:m0MpNAwzfU5UDzcl9v0D8zg8gWTRqZa9RBIspLL5mdg=

|

||||

golang.org/x/net v0.0.0-20210405180319-a5a99cb37ef4/go.mod h1:p54w0d4576C0XHj96bSt6lcn1PtDYWL6XObtHCRCNQM=

|

||||

golang.org/x/net v0.0.0-20210726213435-c6fcb2dbf985/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

|

||||

golang.org/x/net v0.0.0-20211015210444-4f30a5c0130f/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

|

||||

golang.org/x/net v0.0.0-20211112202133-69e39bad7dc2/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

|

||||

golang.org/x/net v0.0.0-20220127200216-cd36cc0744dd h1:O7DYs+zxREGLKzKoMQrtrEacpb0ZVXA5rIwylE2Xchk=

|

||||

golang.org/x/net v0.0.0-20220127200216-cd36cc0744dd/go.mod h1:CfG3xpIq0wQ8r1q4Su4UZFWDARRcnwPjda9FqA0JpMk=

|

||||

|

|

@ -884,7 +881,6 @@ golang.org/x/sys v0.0.0-20210615035016-665e8c7367d1/go.mod h1:oPkhp1MJrh7nUepCBc

|

|||

golang.org/x/sys v0.0.0-20210616094352-59db8d763f22/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20210630005230-0f9fa26af87c/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20210927094055-39ccf1dd6fa6/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20211019181941-9d821ace8654/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20211216021012-1d35b9e2eb4e/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

golang.org/x/sys v0.0.0-20220209214540-3681064d5158 h1:rm+CHSpPEEW2IsXUib1ThaHIjuBVZjxNgSKmBLFfD4c=

|

||||

golang.org/x/sys v0.0.0-20220209214540-3681064d5158/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

|

||||

|

|

@ -991,9 +987,8 @@ golang.org/x/tools v0.1.0/go.mod h1:xkSsbof2nBLbhDlRMhhhyNLN/zl3eTqcnHD5viDpcZ0=

|

|||

golang.org/x/tools v0.1.1/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

|

||||

golang.org/x/tools v0.1.2/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

|

||||

golang.org/x/tools v0.1.3/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

|

||||

golang.org/x/tools v0.1.6-0.20210726203631-07bc1bf47fb2 h1:BonxutuHCTL0rBDnZlKjpGIQFTjyUVTexFOdWkB6Fg0=

|

||||

golang.org/x/tools v0.1.6-0.20210726203631-07bc1bf47fb2/go.mod h1:o0xws9oXOQQZyjljx8fwUC0k7L1pTE6eaCbjGeHmOkk=

|

||||

golang.org/x/tools v0.1.9 h1:j9KsMiaP1c3B0OTQGth0/k+miLGTgLsAFUCrF2vLcF8=

|

||||

golang.org/x/tools v0.1.9/go.mod h1:nABZi5QlRsZVlzPpHl034qft6wpY4eDcsTt5AaioBiU=

|

||||

golang.org/x/xerrors v0.0.0-20190717185122-a985d3407aa7/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

|

||||

golang.org/x/xerrors v0.0.0-20191011141410-1b5146add898/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

|

||||

golang.org/x/xerrors v0.0.0-20191204190536-9bdfabe68543/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

|

||||

|

|

|

|||

|

|

@ -1,3 +0,0 @@

|

|||

.DS_Store

|

||||

.*.sw?

|

||||

/coverage.txt

|

||||

|

|

@ -1,3 +0,0 @@

|

|||

{

|

||||

"settingsInheritedFrom": "VividCortex/whitesource-config@master"

|

||||

}

|

||||

|

|

@ -1,145 +0,0 @@

|

|||

# EWMA

|

||||

|

||||

[](https://godoc.org/github.com/VividCortex/ewma)

|

||||

|

||||

[](https://codecov.io/gh/VividCortex/ewma)

|

||||

|

||||

This repo provides Exponentially Weighted Moving Average algorithms, or EWMAs for short, [based on our

|

||||

Quantifying Abnormal Behavior talk](https://vividcortex.com/blog/2013/07/23/a-fast-go-library-for-exponential-moving-averages/).

|

||||

|

||||

### Exponentially Weighted Moving Average

|

||||

|

||||

An exponentially weighted moving average is a way to continuously compute a type of

|

||||

average for a series of numbers, as the numbers arrive. After a value in the series is

|

||||

added to the average, its weight in the average decreases exponentially over time. This

|

||||

biases the average towards more recent data. EWMAs are useful for several reasons, chiefly

|

||||

their inexpensive computational and memory cost, as well as the fact that they represent

|

||||

the recent central tendency of the series of values.

|

||||

|

||||

The EWMA algorithm requires a decay factor, alpha. The larger the alpha, the more the average

|

||||

is biased towards recent history. The alpha must be between 0 and 1, and is typically

|

||||

a fairly small number, such as 0.04. We will discuss the choice of alpha later.

|

||||

|

||||

The algorithm works thus, in pseudocode:

|

||||

|

||||

1. Multiply the next number in the series by alpha.

|

||||

2. Multiply the current value of the average by 1 minus alpha.

|

||||

3. Add the result of steps 1 and 2, and store it as the new current value of the average.

|

||||

4. Repeat for each number in the series.

|

||||

|

||||

There are special-case behaviors for how to initialize the current value, and these vary

|

||||

between implementations. One approach is to start with the first value in the series;

|

||||

another is to average the first 10 or so values in the series using an arithmetic average,

|

||||

and then begin the incremental updating of the average. Each method has pros and cons.

|

||||

|

||||

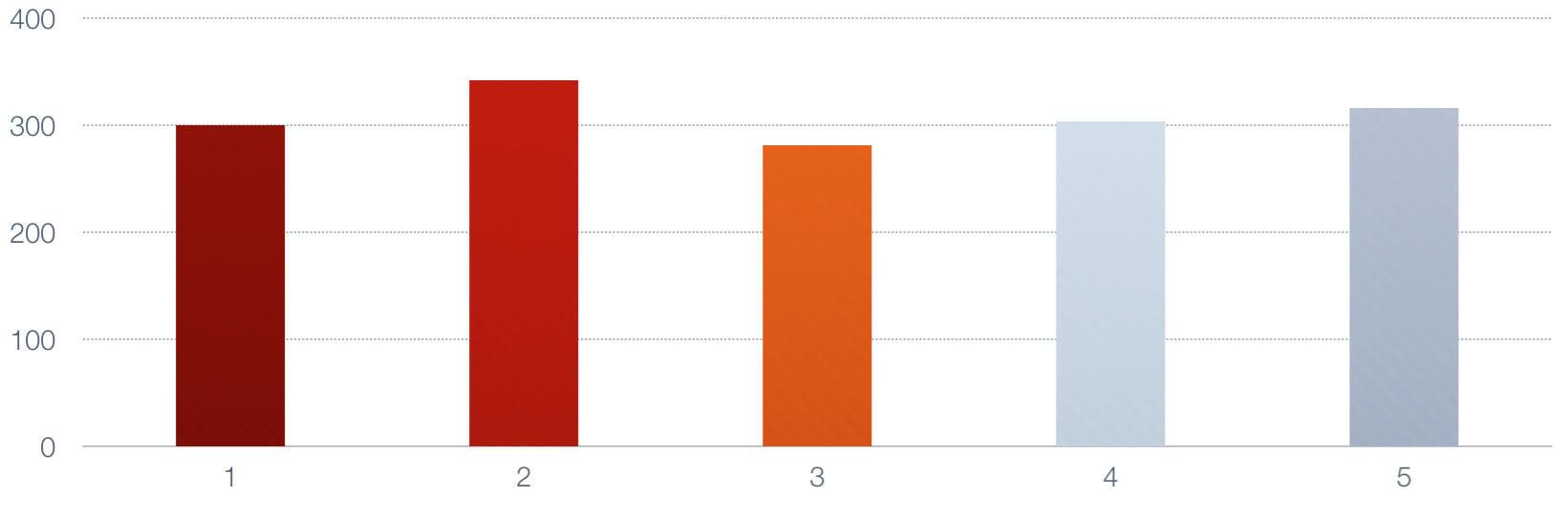

It may help to look at it pictorially. Suppose the series has five numbers, and we choose

|

||||

alpha to be 0.50 for simplicity. Here's the series, with numbers in the neighborhood of 300.

|

||||

|

||||

|

||||

|

||||

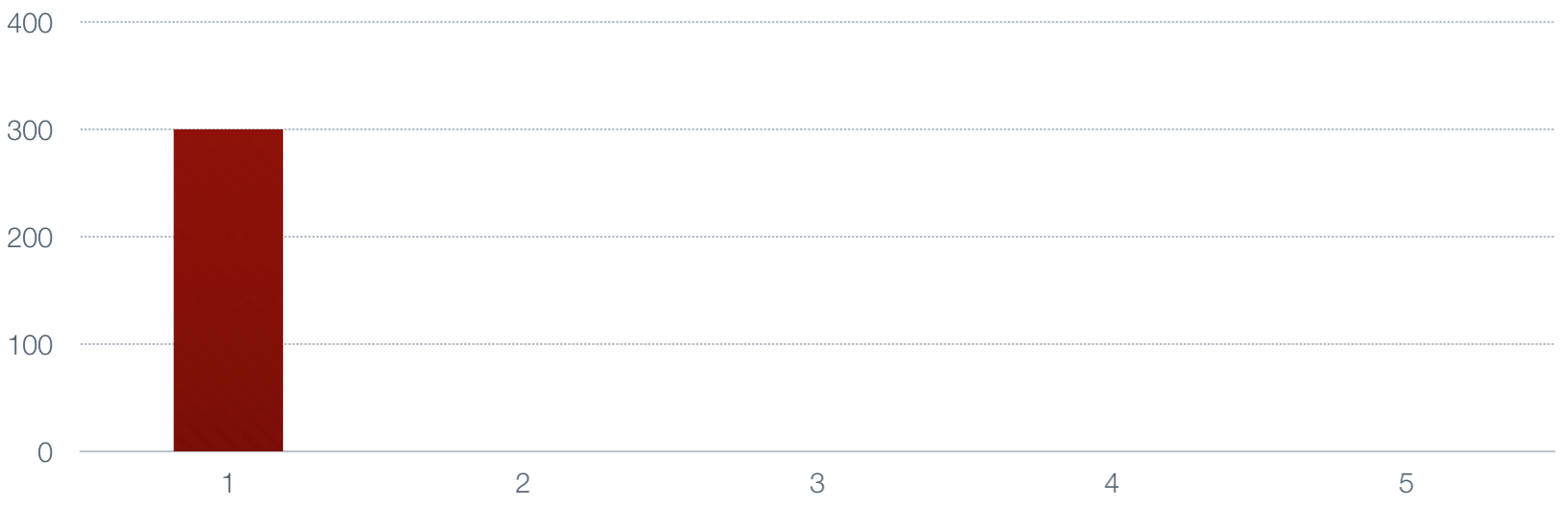

Now let's take the moving average of those numbers. First we set the average to the value

|

||||

of the first number.

|

||||

|

||||

|

||||

|

||||

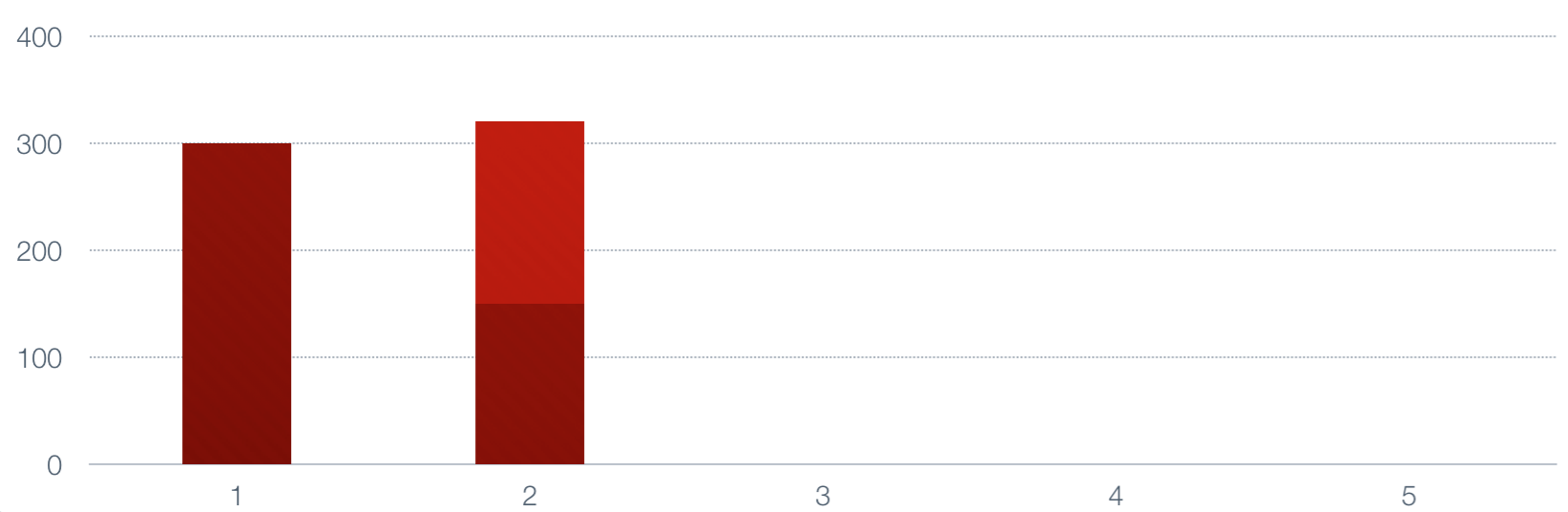

Next we multiply the next number by alpha, multiply the current value by 1-alpha, and add

|

||||

them to generate a new value.

|

||||

|

||||

|

||||

|

||||

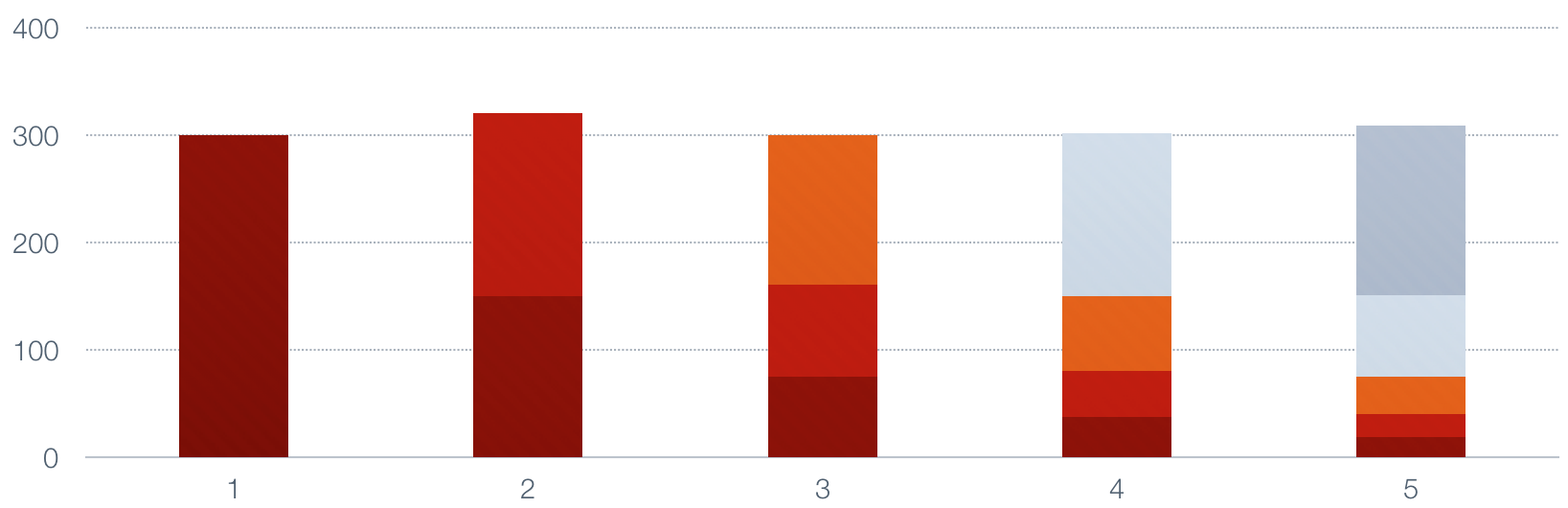

This continues until we are done.

|

||||

|

||||

|

||||

|

||||

Notice how each of the values in the series decays by half each time a new value

|

||||

is added, and the top of the bars in the lower portion of the image represents the

|

||||

size of the moving average. It is a smoothed, or low-pass, average of the original

|

||||

series.

|

||||

|

||||

For further reading, see [Exponentially weighted moving average](http://en.wikipedia.org/wiki/Moving_average#Exponential_moving_average) on wikipedia.

|

||||

|

||||

### Choosing Alpha

|

||||

|

||||

Consider a fixed-size sliding-window moving average (not an exponentially weighted moving average)

|

||||

that averages over the previous N samples. What is the average age of each sample? It is N/2.

|

||||

|

||||

Now suppose that you wish to construct a EWMA whose samples have the same average age. The formula

|

||||

to compute the alpha required for this is: alpha = 2/(N+1). Proof is in the book

|

||||

"Production and Operations Analysis" by Steven Nahmias.

|

||||

|

||||

So, for example, if you have a time-series with samples once per second, and you want to get the

|

||||

moving average over the previous minute, you should use an alpha of .032786885. This, by the way,

|

||||

is the constant alpha used for this repository's SimpleEWMA.

|

||||

|

||||

### Implementations

|

||||

|

||||

This repository contains two implementations of the EWMA algorithm, with different properties.

|

||||

|

||||

The implementations all conform to the MovingAverage interface, and the constructor returns

|

||||

that type.

|

||||

|

||||

Current implementations assume an implicit time interval of 1.0 between every sample added.

|

||||

That is, the passage of time is treated as though it's the same as the arrival of samples.

|

||||

If you need time-based decay when samples are not arriving precisely at set intervals, then

|

||||

this package will not support your needs at present.

|

||||

|

||||

#### SimpleEWMA

|

||||

|

||||

A SimpleEWMA is designed for low CPU and memory consumption. It **will** have different behavior than the VariableEWMA

|

||||

for multiple reasons. It has no warm-up period and it uses a constant

|

||||

decay. These properties let it use less memory. It will also behave

|

||||

differently when it's equal to zero, which is assumed to mean

|

||||

uninitialized, so if a value is likely to actually become zero over time,

|

||||

then any non-zero value will cause a sharp jump instead of a small change.

|

||||

|

||||

#### VariableEWMA

|

||||

|

||||

Unlike SimpleEWMA, this supports a custom age which must be stored, and thus uses more memory.

|

||||

It also has a "warmup" time when you start adding values to it. It will report a value of 0.0

|

||||

until you have added the required number of samples to it. It uses some memory to store the

|

||||

number of samples added to it. As a result it uses a little over twice the memory of SimpleEWMA.

|

||||

|

||||

## Usage

|

||||

|

||||

### API Documentation

|

||||

|

||||

View the GoDoc generated documentation [here](http://godoc.org/github.com/VividCortex/ewma).

|

||||

|

||||

```go

|

||||

package main

|

||||

|

||||

import "github.com/VividCortex/ewma"

|

||||

|

||||

func main() {

|

||||

samples := [100]float64{

|

||||

4599, 5711, 4746, 4621, 5037, 4218, 4925, 4281, 5207, 5203, 5594, 5149,

|

||||

}

|

||||

|

||||

e := ewma.NewMovingAverage() //=> Returns a SimpleEWMA if called without params

|

||||

a := ewma.NewMovingAverage(5) //=> returns a VariableEWMA with a decay of 2 / (5 + 1)

|

||||

|

||||

for _, f := range samples {

|

||||

e.Add(f)

|

||||

a.Add(f)

|

||||

}

|

||||

|

||||

e.Value() //=> 13.577404704631077

|

||||

a.Value() //=> 1.5806140565521463e-12

|

||||

}

|

||||

```

|

||||

|

||||

## Contributing

|

||||

|

||||

We only accept pull requests for minor fixes or improvements. This includes:

|

||||

|

||||

* Small bug fixes

|

||||

* Typos

|

||||

* Documentation or comments

|

||||

|

||||

Please open issues to discuss new features. Pull requests for new features will be rejected,

|

||||

so we recommend forking the repository and making changes in your fork for your use case.

|

||||

|

||||

## License

|

||||

|

||||

This repository is Copyright (c) 2013 VividCortex, Inc. All rights reserved.

|

||||

It is licensed under the MIT license. Please see the LICENSE file for applicable license terms.

|

||||

|

|

@ -1,6 +0,0 @@

|

|||

coverage:

|

||||

status:

|

||||

project:

|

||||

default:

|

||||

threshold: 15%

|

||||

patch: off

|

||||

|

|

@ -1,160 +0,0 @@

|

|||

// Package ewma implements exponentially weighted moving averages.

|

||||

package ewma

|

||||

|

||||

import "fmt"

|

||||

|

||||

// Copyright (c) 2013 VividCortex, Inc. All rights reserved.

|

||||

// Please see the LICENSE file for applicable license terms.

|

||||

|

||||

const (

|

||||

// By default, we average over a one-minute period, which means the average

|

||||

// age of the metrics in the period is 30 seconds.

|

||||

AVG_METRIC_AGE float64 = 30.0

|

||||

|

||||

// The formula for computing the decay factor from the average age comes

|

||||

// from "Production and Operations Analysis" by Steven Nahmias.

|

||||

DECAY float64 = 2 / (float64(AVG_METRIC_AGE) + 1)

|

||||

|

||||

// For best results, the moving average should not be initialized to the

|

||||

// samples it sees immediately. The book "Production and Operations

|

||||

// Analysis" by Steven Nahmias suggests initializing the moving average to

|

||||

// the mean of the first 10 samples. Until the VariableEwma has seen this

|

||||

// many samples, it is not "ready" to be queried for the value of the

|

||||

// moving average. This adds some memory cost.

|

||||

DEFAULT_WARMUP_SAMPLES uint8 = 10

|

||||

)

|

||||

|

||||

// MovingAverage is the interface that computes a moving average over a time-

|

||||

// series stream of numbers. The average may be over a window or exponentially

|

||||

// decaying.

|

||||

type MovingAverage interface {

|

||||

Add(float64)

|

||||

Value() float64

|

||||

Set(float64)

|

||||

SetWarmupSamples(uint8) error

|

||||

WarmupSamples() uint8

|

||||

}

|

||||

|

||||

// NewMovingAverage constructs a MovingAverage that computes an average with the

|

||||

// desired characteristics in the moving window or exponential decay. If no

|

||||

// age is given, it constructs a default exponentially weighted implementation

|

||||

// that consumes minimal memory. The age is related to the decay factor alpha

|

||||

// by the formula given for the DECAY constant. It signifies the average age

|

||||

// of the samples as time goes to infinity.

|

||||

func NewMovingAverage(age ...float64) MovingAverage {

|

||||

if len(age) == 0 || age[0] == AVG_METRIC_AGE {

|

||||

return new(SimpleEWMA)

|

||||

}

|

||||

return &VariableEWMA{

|

||||

decay: 2 / (age[0] + 1),

|

||||

warmup_samples: DEFAULT_WARMUP_SAMPLES,

|

||||

}

|

||||

}

|

||||

|

||||

// A SimpleEWMA represents the exponentially weighted moving average of a

|

||||

// series of numbers. It WILL have different behavior than the VariableEWMA

|

||||

// for multiple reasons. It has no warm-up period and it uses a constant

|

||||

// decay. These properties let it use less memory. It will also behave

|

||||

// differently when it's equal to zero, which is assumed to mean

|

||||

// uninitialized, so if a value is likely to actually become zero over time,

|

||||

// then any non-zero value will cause a sharp jump instead of a small change.

|

||||

// However, note that this takes a long time, and the value may just

|

||||

// decays to a stable value that's close to zero, but which won't be mistaken

|

||||

// for uninitialized. See http://play.golang.org/p/litxBDr_RC for example.

|

||||

type SimpleEWMA struct {

|

||||

// The current value of the average. After adding with Add(), this is

|

||||

// updated to reflect the average of all values seen thus far.

|

||||

value *float64

|

||||

}

|

||||

|

||||

// Add adds a value to the series and updates the moving average.

|

||||

func (e *SimpleEWMA) Add(value float64) {

|

||||

if e.value == nil { // this is a proxy for "uninitialized"

|

||||

e.value = &value

|

||||

} else {

|

||||

*e.value = (value * DECAY) + (e.Value() * (1 - DECAY))

|

||||

}

|

||||

}

|

||||

|

||||

// Value returns the current value of the moving average.

|

||||

func (e *SimpleEWMA) Value() float64 {

|

||||

if e.value == nil { // this is a proxy for "uninitialized"

|

||||

return 0

|

||||

} else {

|

||||

return *e.value

|

||||

}

|

||||

}

|

||||

|

||||

// Set sets the EWMA's value.

|

||||

func (e *SimpleEWMA) Set(value float64) {

|

||||

e.value = &value

|

||||

}

|

||||

|

||||

func (e *SimpleEWMA) WarmupSamples() uint8 {

|

||||

return 0

|

||||

}

|

||||

|

||||

func (e *SimpleEWMA) SetWarmupSamples(warmup_samples uint8) error {

|

||||

if warmup_samples > 0 {

|

||||

return fmt.Errorf("warmup samples must be 0")

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// VariableEWMA represents the exponentially weighted moving average of a series of

|

||||

// numbers. Unlike SimpleEWMA, it supports a custom age, and thus uses more memory.

|

||||

type VariableEWMA struct {

|

||||

// The multiplier factor by which the previous samples decay.

|

||||

decay float64

|

||||

// The current value of the average.

|

||||

value float64

|

||||

// The number of samples added to this instance.

|

||||

count uint8

|

||||

// The number of warmup samples

|

||||

warmup_samples uint8

|

||||

}

|

||||

|

||||

// Add adds a value to the series and updates the moving average.

|

||||

func (e *VariableEWMA) Add(value float64) {

|

||||

switch {

|

||||

case e.count < e.warmup_samples:

|

||||

e.count++

|

||||

e.value += value

|

||||

case e.count == e.warmup_samples:

|

||||

e.count++

|

||||

e.value = e.value / float64(e.warmup_samples)

|

||||

e.value = (value * e.decay) + (e.value * (1 - e.decay))

|

||||

default:

|

||||

e.value = (value * e.decay) + (e.value * (1 - e.decay))

|

||||

}

|

||||

}

|

||||

|

||||

// Value returns the current value of the average, or 0.0 if the series hasn't

|

||||

// warmed up yet.

|

||||

func (e *VariableEWMA) Value() float64 {

|

||||

if e.count <= e.warmup_samples {

|

||||

return 0.0

|

||||

}

|

||||

|

||||

return e.value

|

||||

}

|

||||

|

||||

// Set sets the EWMA's value.

|

||||

func (e *VariableEWMA) Set(value float64) {

|

||||

e.value = value

|

||||

if e.count <= e.warmup_samples {

|

||||

e.count = e.warmup_samples + 1

|

||||

}

|

||||

}

|

||||

|

||||

func (e *VariableEWMA) SetWarmupSamples(warmup_samples uint8) error {

|

||||

if warmup_samples < 1 {

|

||||

return fmt.Errorf("warmup samples must be between 1 and 255")

|

||||

}

|

||||

e.warmup_samples = warmup_samples

|

||||

return nil

|

||||

}

|

||||

|

||||

func (e *VariableEWMA) WarmupSamples() uint8 {

|

||||

return e.warmup_samples

|

||||

}

|

||||

|

|

@ -0,0 +1,15 @@

|

|||

# Binaries for programs and plugins

|

||||

*.exe

|

||||

*.exe~

|

||||

*.dll

|

||||

*.so

|

||||

*.dylib

|

||||

|

||||

# Test binary, built with `go test -c`

|

||||

*.test

|

||||

|

||||

# Output of the go coverage tool, specifically when used with LiteIDE

|

||||

*.out

|

||||

|

||||

# Dependency directories (remove the comment below to include it)

|

||||

# vendor/

|

||||

12

vendor/github.com/jedisct1/ewma/LICENSE → vendor/github.com/lifenjoiner/ewma/LICENSE

generated

vendored

12

vendor/github.com/jedisct1/ewma/LICENSE → vendor/github.com/lifenjoiner/ewma/LICENSE

generated

vendored

|

|

@ -1,6 +1,6 @@

|

|||

The MIT License

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2013 VividCortex

|

||||

Copyright (c) 2021 lifenjoiner

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

|

@ -9,13 +9,13 @@ to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

|||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in

|

||||

all copies or substantial portions of the Software.

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

||||

THE SOFTWARE.

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

# EWMA

|

||||

|

||||

EWMA: Exponentially Weighted Moving Average algorithms

|

||||

|

||||

This is a variant of [EWMA](https://github.com/VividCortex/ewma).

|

||||

|

||||

### Variant

|

||||

|

||||

During the "warmup" stage, it uses a just-in-time `alpha` to get a more reasonable average.

|

||||

|

||||

Just one form.

|

||||

|

||||

[EWMA Comparisons](https://github.com/lifenjoiner/ewma/issues/1)

|

||||

|

||||

### Implementation

|

||||

|

||||

https://github.com/lifenjoiner/ewma

|

||||

|

|

@ -0,0 +1,44 @@

|

|||

// Package ewma: exponentially weighted moving averages

|

||||

package ewma

|

||||

|

||||

// New EWMA by moving window size.

|

||||

func NewMovingAverage(slide int) *EWMA {

|

||||

return &EWMA{

|

||||

slide: slide,

|

||||

}

|

||||

}

|

||||

|

||||

type EWMA struct {

|

||||

// Too big slide is meaningless.

|

||||

slide int

|

||||

// Count before warmed up.

|

||||

count int

|

||||

// Decay by slide size.

|

||||

decay float64

|

||||

// The average.

|

||||

value float64

|

||||

}

|

||||

|

||||

// Add a value to the series and update the moving average.

|

||||

func (a *EWMA) Add(value float64) {

|

||||

switch {

|

||||

case a.count <= a.slide:

|

||||

a.count++

|

||||

a.decay = 2 / float64(a.count + 1)

|

||||

a.value = a.value * (1 - a.decay) + value * a.decay

|

||||

default:

|

||||

a.value = a.value * (1 - a.decay) + value * a.decay

|

||||

}

|

||||

}

|

||||

|

||||

// Return the current EWMA value.

|

||||

func (a *EWMA) Value() float64 {

|

||||

return a.value

|

||||

}

|

||||

|

||||

// Set the EWMA value for continuing.

|

||||

func (a *EWMA) Set(value float64) {

|

||||

a.value = value

|

||||

a.decay = 2 / float64(a.slide + 1)

|

||||

a.count = a.slide + 1

|

||||

}

|

||||

|

|

@ -194,15 +194,12 @@ func (x *FileSyntax) updateLine(line *Line, tokens ...string) {

|

|||

line.Token = tokens

|

||||

}

|

||||

|

||||

// markRemoved modifies line so that it (and its end-of-line comment, if any)

|

||||

// will be dropped by (*FileSyntax).Cleanup.

|

||||

func (line *Line) markRemoved() {

|

||||

func (x *FileSyntax) removeLine(line *Line) {

|

||||

line.Token = nil

|

||||

line.Comments.Suffix = nil

|

||||

}

|

||||

|

||||

// Cleanup cleans up the file syntax x after any edit operations.

|

||||

// To avoid quadratic behavior, (*Line).markRemoved marks the line as dead

|

||||

// To avoid quadratic behavior, removeLine marks the line as dead

|

||||

// by setting line.Token = nil but does not remove it from the slice

|

||||

// in which it appears. After edits have all been indicated,

|

||||

// calling Cleanup cleans out the dead lines.

|

||||

|

|

|

|||

|

|

@ -47,9 +47,8 @@ type File struct {

|

|||

|

||||

// A Module is the module statement.

|

||||

type Module struct {

|

||||

Mod module.Version

|

||||

Deprecated string

|

||||

Syntax *Line

|

||||

Mod module.Version

|

||||

Syntax *Line

|

||||

}

|

||||

|

||||

// A Go is the go statement.

|

||||

|

|

@ -58,6 +57,13 @@ type Go struct {

|

|||

Syntax *Line

|

||||

}

|

||||

|

||||

// A Require is a single require statement.

|

||||

type Require struct {

|

||||

Mod module.Version

|

||||

Indirect bool // has "// indirect" comment

|

||||

Syntax *Line

|

||||

}

|

||||

|

||||

// An Exclude is a single exclude statement.

|

||||

type Exclude struct {

|

||||

Mod module.Version

|

||||

|

|

@ -86,93 +92,6 @@ type VersionInterval struct {

|

|||

Low, High string

|

||||

}

|

||||

|

||||

// A Require is a single require statement.

|

||||

type Require struct {

|

||||

Mod module.Version

|

||||

Indirect bool // has "// indirect" comment

|

||||

Syntax *Line

|

||||

}

|

||||

|

||||

func (r *Require) markRemoved() {

|

||||

r.Syntax.markRemoved()

|

||||

*r = Require{}

|

||||

}

|

||||

|

||||

func (r *Require) setVersion(v string) {

|

||||

r.Mod.Version = v

|

||||

|

||||

if line := r.Syntax; len(line.Token) > 0 {

|

||||

if line.InBlock {

|

||||

// If the line is preceded by an empty line, remove it; see

|

||||

// https://golang.org/issue/33779.

|

||||

if len(line.Comments.Before) == 1 && len(line.Comments.Before[0].Token) == 0 {

|

||||

line.Comments.Before = line.Comments.Before[:0]

|

||||

}

|

||||

if len(line.Token) >= 2 { // example.com v1.2.3

|

||||

line.Token[1] = v

|

||||

}

|

||||

} else {

|

||||

if len(line.Token) >= 3 { // require example.com v1.2.3

|

||||

line.Token[2] = v

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// setIndirect sets line to have (or not have) a "// indirect" comment.

|

||||

func (r *Require) setIndirect(indirect bool) {

|

||||

r.Indirect = indirect

|

||||

line := r.Syntax

|

||||

if isIndirect(line) == indirect {

|

||||

return

|

||||

}

|

||||

if indirect {

|

||||

// Adding comment.

|

||||

if len(line.Suffix) == 0 {

|

||||

// New comment.

|

||||

line.Suffix = []Comment{{Token: "// indirect", Suffix: true}}

|

||||

return

|

||||

}

|

||||

|

||||

com := &line.Suffix[0]

|

||||

text := strings.TrimSpace(strings.TrimPrefix(com.Token, string(slashSlash)))

|

||||

if text == "" {

|

||||

// Empty comment.

|

||||

com.Token = "// indirect"

|

||||

return

|

||||

}

|

||||

|

||||

// Insert at beginning of existing comment.

|

||||

com.Token = "// indirect; " + text

|

||||

return

|

||||

}

|

||||

|

||||

// Removing comment.

|

||||

f := strings.TrimSpace(strings.TrimPrefix(line.Suffix[0].Token, string(slashSlash)))

|

||||

if f == "indirect" {

|

||||

// Remove whole comment.

|

||||

line.Suffix = nil

|

||||

return

|

||||

}

|

||||

|

||||

// Remove comment prefix.

|

||||

com := &line.Suffix[0]

|

||||

i := strings.Index(com.Token, "indirect;")

|

||||

com.Token = "//" + com.Token[i+len("indirect;"):]

|

||||

}

|

||||

|

||||

// isIndirect reports whether line has a "// indirect" comment,

|

||||

// meaning it is in go.mod only for its effect on indirect dependencies,

|

||||

// so that it can be dropped entirely once the effective version of the

|

||||

// indirect dependency reaches the given minimum version.

|

||||

func isIndirect(line *Line) bool {

|

||||

if len(line.Suffix) == 0 {

|

||||

return false

|

||||

}

|

||||

f := strings.Fields(strings.TrimPrefix(line.Suffix[0].Token, string(slashSlash)))

|

||||

return (len(f) == 1 && f[0] == "indirect" || len(f) > 1 && f[0] == "indirect;")

|

||||

}

|

||||

|

||||

func (f *File) AddModuleStmt(path string) error {

|

||||

if f.Syntax == nil {

|

||||

f.Syntax = new(FileSyntax)

|

||||

|

|

@ -212,15 +131,8 @@ var dontFixRetract VersionFixer = func(_, vers string) (string, error) {

|

|||

return vers, nil

|

||||

}

|

||||

|

||||

// Parse parses and returns a go.mod file.

|

||||

//

|

||||

// file is the name of the file, used in positions and errors.

|

||||

//

|

||||

// data is the content of the file.

|

||||

//

|

||||

// fix is an optional function that canonicalizes module versions.

|

||||

// If fix is nil, all module versions must be canonical (module.CanonicalVersion

|

||||

// must return the same string).

|

||||

// Parse parses the data, reported in errors as being from file,

|

||||

// into a File struct. It applies fix, if non-nil, to canonicalize all module versions found.

|

||||

func Parse(file string, data []byte, fix VersionFixer) (*File, error) {

|

||||

return parseToFile(file, data, fix, true)

|

||||

}

|

||||

|

|

@ -297,7 +209,6 @@ func parseToFile(file string, data []byte, fix VersionFixer, strict bool) (parse

|

|||

}

|

||||

|

||||

var GoVersionRE = lazyregexp.New(`^([1-9][0-9]*)\.(0|[1-9][0-9]*)$`)

|

||||

var laxGoVersionRE = lazyregexp.New(`^v?(([1-9][0-9]*)\.(0|[1-9][0-9]*))([^0-9].*)$`)

|

||||

|

||||

func (f *File) add(errs *ErrorList, block *LineBlock, line *Line, verb string, args []string, fix VersionFixer, strict bool) {

|

||||

// If strict is false, this module is a dependency.

|

||||

|

|

@ -348,17 +259,8 @@ func (f *File) add(errs *ErrorList, block *LineBlock, line *Line, verb string, a

|

|||

errorf("go directive expects exactly one argument")

|

||||

return

|

||||

} else if !GoVersionRE.MatchString(args[0]) {

|

||||

fixed := false

|

||||

if !strict {

|

||||

if m := laxGoVersionRE.FindStringSubmatch(args[0]); m != nil {

|

||||

args[0] = m[1]

|

||||

fixed = true

|

||||

}

|

||||

}

|

||||

if !fixed {

|

||||

errorf("invalid go version '%s': must match format 1.23", args[0])

|

||||

return

|

||||

}

|

||||

errorf("invalid go version '%s': must match format 1.23", args[0])

|

||||

return

|

||||

}

|

||||

|

||||

f.Go = &Go{Syntax: line}

|

||||

|

|

@ -369,11 +271,7 @@ func (f *File) add(errs *ErrorList, block *LineBlock, line *Line, verb string, a

|

|||

errorf("repeated module statement")

|

||||

return

|

||||

}

|

||||

deprecated := parseDeprecation(block, line)

|

||||

f.Module = &Module{

|

||||

Syntax: line,

|

||||

Deprecated: deprecated,

|

||||

}

|

||||

f.Module = &Module{Syntax: line}

|

||||

if len(args) != 1 {

|

||||

errorf("usage: module module/path")

|

||||

return

|

||||

|

|

@ -487,7 +385,7 @@ func (f *File) add(errs *ErrorList, block *LineBlock, line *Line, verb string, a

|

|||

})

|

||||

|

||||

case "retract":

|

||||

rationale := parseDirectiveComment(block, line)

|

||||

rationale := parseRetractRationale(block, line)

|

||||

vi, err := parseVersionInterval(verb, "", &args, dontFixRetract)

|

||||

if err != nil {

|

||||

if strict {

|

||||

|

|

@ -556,6 +454,58 @@ func (f *File) fixRetract(fix VersionFixer, errs *ErrorList) {

|

|||

}

|

||||

}

|

||||

|

||||

// isIndirect reports whether line has a "// indirect" comment,

|

||||

// meaning it is in go.mod only for its effect on indirect dependencies,

|

||||

// so that it can be dropped entirely once the effective version of the

|

||||

// indirect dependency reaches the given minimum version.

|

||||

func isIndirect(line *Line) bool {

|

||||

if len(line.Suffix) == 0 {

|

||||

return false

|

||||

}

|

||||

f := strings.Fields(strings.TrimPrefix(line.Suffix[0].Token, string(slashSlash)))

|

||||

return (len(f) == 1 && f[0] == "indirect" || len(f) > 1 && f[0] == "indirect;")

|

||||

}

|

||||

|

||||

// setIndirect sets line to have (or not have) a "// indirect" comment.

|

||||

func setIndirect(line *Line, indirect bool) {

|

||||

if isIndirect(line) == indirect {

|

||||

return

|

||||

}

|

||||

if indirect {

|

||||

// Adding comment.

|

||||

if len(line.Suffix) == 0 {

|

||||

// New comment.

|

||||

line.Suffix = []Comment{{Token: "// indirect", Suffix: true}}

|

||||

return

|

||||

}

|

||||

|

||||

com := &line.Suffix[0]

|

||||

text := strings.TrimSpace(strings.TrimPrefix(com.Token, string(slashSlash)))

|

||||

if text == "" {

|

||||

// Empty comment.

|

||||

com.Token = "// indirect"

|

||||

return

|

||||

}

|

||||

|

||||

// Insert at beginning of existing comment.

|

||||

com.Token = "// indirect; " + text

|

||||

return

|

||||

}

|

||||

|

||||

// Removing comment.

|

||||

f := strings.Fields(line.Suffix[0].Token)

|

||||

if len(f) == 2 {

|

||||

// Remove whole comment.

|

||||

line.Suffix = nil

|

||||

return

|

||||

}

|

||||

|

||||

// Remove comment prefix.

|

||||

com := &line.Suffix[0]

|

||||

i := strings.Index(com.Token, "indirect;")

|

||||

com.Token = "//" + com.Token[i+len("indirect;"):]

|

||||

}

|

||||

|

||||

// IsDirectoryPath reports whether the given path should be interpreted

|

||||

// as a directory path. Just like on the go command line, relative paths

|

||||

// and rooted paths are directory paths; the rest are module paths.

|

||||

|

|

@ -662,29 +612,10 @@ func parseString(s *string) (string, error) {

|

|||

return t, nil

|

||||

}

|

||||

|

||||

var deprecatedRE = lazyregexp.New(`(?s)(?:^|\n\n)Deprecated: *(.*?)(?:$|\n\n)`)

|

||||

|

||||

// parseDeprecation extracts the text of comments on a "module" directive and

|

||||

// extracts a deprecation message from that.

|

||||

//

|

||||

// A deprecation message is contained in a paragraph within a block of comments

|

||||

// that starts with "Deprecated:" (case sensitive). The message runs until the

|

||||

// end of the paragraph and does not include the "Deprecated:" prefix. If the

|

||||

// comment block has multiple paragraphs that start with "Deprecated:",

|

||||

// parseDeprecation returns the message from the first.

|

||||

func parseDeprecation(block *LineBlock, line *Line) string {

|

||||

text := parseDirectiveComment(block, line)

|

||||

m := deprecatedRE.FindStringSubmatch(text)

|

||||

if m == nil {

|

||||

return ""

|

||||

}

|

||||

return m[1]

|

||||

}

|

||||

|

||||

// parseDirectiveComment extracts the text of comments on a directive.

|

||||

// If the directive's line does not have comments and is part of a block that

|

||||

// does have comments, the block's comments are used.

|

||||

func parseDirectiveComment(block *LineBlock, line *Line) string {

|

||||

// parseRetractRationale extracts the rationale for a retract directive from the

|

||||

// surrounding comments. If the line does not have comments and is part of a

|

||||

// block that does have comments, the block's comments are used.

|

||||

func parseRetractRationale(block *LineBlock, line *Line) string {

|

||||

comments := line.Comment()

|

||||

if block != nil && len(comments.Before) == 0 && len(comments.Suffix) == 0 {

|

||||

comments = block.Comment()

|

||||

|

|

@ -863,12 +794,6 @@ func (f *File) AddGoStmt(version string) error {

|

|||

return nil

|

||||

}

|

||||

|

||||

// AddRequire sets the first require line for path to version vers,

|

||||

// preserving any existing comments for that line and removing all

|

||||

// other lines for path.

|

||||

//

|

||||

// If no line currently exists for path, AddRequire adds a new line

|

||||

// at the end of the last require block.

|

||||

func (f *File) AddRequire(path, vers string) error {

|

||||

need := true

|

||||

for _, r := range f.Require {

|

||||

|

|

@ -878,7 +803,7 @@ func (f *File) AddRequire(path, vers string) error {

|

|||

f.Syntax.updateLine(r.Syntax, "require", AutoQuote(path), vers)

|

||||

need = false

|

||||

} else {

|

||||

r.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(r.Syntax)

|

||||

*r = Require{}

|

||||

}

|

||||

}

|

||||

|

|

@ -890,290 +815,77 @@ func (f *File) AddRequire(path, vers string) error {

|

|||

return nil

|

||||

}

|

||||

|

||||

// AddNewRequire adds a new require line for path at version vers at the end of

|

||||

// the last require block, regardless of any existing require lines for path.

|

||||

func (f *File) AddNewRequire(path, vers string, indirect bool) {

|

||||

line := f.Syntax.addLine(nil, "require", AutoQuote(path), vers)

|

||||

r := &Require{

|

||||

Mod: module.Version{Path: path, Version: vers},

|

||||

Syntax: line,

|

||||

}

|

||||

r.setIndirect(indirect)

|

||||

f.Require = append(f.Require, r)

|

||||

setIndirect(line, indirect)

|

||||

f.Require = append(f.Require, &Require{module.Version{Path: path, Version: vers}, indirect, line})

|

||||

}

|

||||

|

||||

// SetRequire updates the requirements of f to contain exactly req, preserving

|

||||

// the existing block structure and line comment contents (except for 'indirect'

|

||||

// markings) for the first requirement on each named module path.

|

||||

//

|

||||

// The Syntax field is ignored for the requirements in req.

|

||||

//

|

||||

// Any requirements not already present in the file are added to the block

|

||||

// containing the last require line.

|

||||

//

|

||||

// The requirements in req must specify at most one distinct version for each

|

||||

// module path.

|

||||

//

|

||||

// If any existing requirements may be removed, the caller should call Cleanup

|

||||

// after all edits are complete.

|

||||

func (f *File) SetRequire(req []*Require) {

|

||||

type elem struct {

|

||||

version string

|

||||

indirect bool

|

||||

}

|

||||

need := make(map[string]elem)

|

||||

need := make(map[string]string)

|

||||

indirect := make(map[string]bool)

|

||||

for _, r := range req {

|

||||

if prev, dup := need[r.Mod.Path]; dup && prev.version != r.Mod.Version {

|

||||

panic(fmt.Errorf("SetRequire called with conflicting versions for path %s (%s and %s)", r.Mod.Path, prev.version, r.Mod.Version))

|

||||

}

|

||||

need[r.Mod.Path] = elem{r.Mod.Version, r.Indirect}

|

||||

need[r.Mod.Path] = r.Mod.Version

|

||||

indirect[r.Mod.Path] = r.Indirect

|

||||

}

|

||||

|

||||

// Update or delete the existing Require entries to preserve

|

||||

// only the first for each module path in req.

|

||||

for _, r := range f.Require {

|

||||

e, ok := need[r.Mod.Path]

|

||||

if ok {

|

||||

r.setVersion(e.version)

|

||||

r.setIndirect(e.indirect)

|

||||

if v, ok := need[r.Mod.Path]; ok {

|

||||

r.Mod.Version = v

|

||||

r.Indirect = indirect[r.Mod.Path]

|

||||

} else {

|

||||

r.markRemoved()

|

||||

*r = Require{}

|

||||

}

|

||||

delete(need, r.Mod.Path)

|

||||

}

|

||||

|

||||

// Add new entries in the last block of the file for any paths that weren't

|

||||

// already present.

|

||||

//

|

||||

// This step is nondeterministic, but the final result will be deterministic

|

||||

// because we will sort the block.

|

||||

for path, e := range need {

|

||||

f.AddNewRequire(path, e.version, e.indirect)

|

||||

}

|

||||

|

||||

f.SortBlocks()

|

||||

}

|

||||

|

||||

// SetRequireSeparateIndirect updates the requirements of f to contain the given

|

||||

// requirements. Comment contents (except for 'indirect' markings) are retained

|

||||

// from the first existing requirement for each module path. Like SetRequire,

|

||||

// SetRequireSeparateIndirect adds requirements for new paths in req,

|

||||

// updates the version and "// indirect" comment on existing requirements,

|

||||

// and deletes requirements on paths not in req. Existing duplicate requirements

|

||||

// are deleted.

|

||||

//

|

||||

// As its name suggests, SetRequireSeparateIndirect puts direct and indirect

|

||||

// requirements into two separate blocks, one containing only direct

|

||||

// requirements, and the other containing only indirect requirements.

|

||||

// SetRequireSeparateIndirect may move requirements between these two blocks

|

||||

// when their indirect markings change. However, SetRequireSeparateIndirect

|

||||

// won't move requirements from other blocks, especially blocks with comments.

|

||||

//

|

||||

// If the file initially has one uncommented block of requirements,

|

||||

// SetRequireSeparateIndirect will split it into a direct-only and indirect-only

|

||||

// block. This aids in the transition to separate blocks.

|

||||

func (f *File) SetRequireSeparateIndirect(req []*Require) {

|

||||

// hasComments returns whether a line or block has comments

|

||||

// other than "indirect".

|

||||

hasComments := func(c Comments) bool {

|

||||

return len(c.Before) > 0 || len(c.After) > 0 || len(c.Suffix) > 1 ||

|

||||

(len(c.Suffix) == 1 &&

|

||||

strings.TrimSpace(strings.TrimPrefix(c.Suffix[0].Token, string(slashSlash))) != "indirect")

|

||||

}

|

||||

|

||||

// moveReq adds r to block. If r was in another block, moveReq deletes

|

||||

// it from that block and transfers its comments.

|

||||

moveReq := func(r *Require, block *LineBlock) {

|

||||

var line *Line

|

||||

if r.Syntax == nil {

|

||||

line = &Line{Token: []string{AutoQuote(r.Mod.Path), r.Mod.Version}}

|

||||

r.Syntax = line

|

||||

if r.Indirect {

|

||||

r.setIndirect(true)

|

||||

}

|

||||

} else {

|

||||

line = new(Line)

|

||||

*line = *r.Syntax

|

||||

if !line.InBlock && len(line.Token) > 0 && line.Token[0] == "require" {

|

||||

line.Token = line.Token[1:]

|

||||

}

|

||||

r.Syntax.Token = nil // Cleanup will delete the old line.

|

||||

r.Syntax = line

|

||||

}

|

||||

line.InBlock = true

|

||||

block.Line = append(block.Line, line)

|

||||

}

|

||||

|

||||

// Examine existing require lines and blocks.

|

||||

var (

|

||||

// We may insert new requirements into the last uncommented

|

||||

// direct-only and indirect-only blocks. We may also move requirements

|

||||

// to the opposite block if their indirect markings change.

|

||||

lastDirectIndex = -1

|

||||

lastIndirectIndex = -1

|

||||

|

||||

// If there are no direct-only or indirect-only blocks, a new block may

|

||||

// be inserted after the last require line or block.

|

||||

lastRequireIndex = -1

|

||||

|

||||

// If there's only one require line or block, and it's uncommented,

|

||||

// we'll move its requirements to the direct-only or indirect-only blocks.

|

||||

requireLineOrBlockCount = 0

|

||||

|

||||

// Track the block each requirement belongs to (if any) so we can

|

||||

// move them later.

|

||||

lineToBlock = make(map[*Line]*LineBlock)

|

||||

)

|

||||

for i, stmt := range f.Syntax.Stmt {

|

||||

var newStmts []Expr

|

||||

for _, stmt := range f.Syntax.Stmt {

|

||||

switch stmt := stmt.(type) {

|

||||

case *Line:

|

||||

if len(stmt.Token) == 0 || stmt.Token[0] != "require" {

|

||||

continue

|

||||

case *LineBlock:

|

||||

if len(stmt.Token) > 0 && stmt.Token[0] == "require" {

|

||||

var newLines []*Line

|

||||

for _, line := range stmt.Line {

|

||||

if p, err := parseString(&line.Token[0]); err == nil && need[p] != "" {

|

||||

if len(line.Comments.Before) == 1 && len(line.Comments.Before[0].Token) == 0 {

|

||||

line.Comments.Before = line.Comments.Before[:0]

|

||||

}

|

||||

line.Token[1] = need[p]

|

||||

delete(need, p)

|

||||

setIndirect(line, indirect[p])

|

||||

newLines = append(newLines, line)

|

||||

}

|

||||

}

|

||||

if len(newLines) == 0 {

|

||||

continue // drop stmt

|

||||

}

|

||||

stmt.Line = newLines

|

||||

}

|

||||

lastRequireIndex = i

|

||||

requireLineOrBlockCount++

|

||||

if !hasComments(stmt.Comments) {

|

||||

if isIndirect(stmt) {

|

||||

lastIndirectIndex = i

|

||||

|

||||

case *Line:

|

||||

if len(stmt.Token) > 0 && stmt.Token[0] == "require" {

|

||||

if p, err := parseString(&stmt.Token[1]); err == nil && need[p] != "" {

|

||||

stmt.Token[2] = need[p]

|

||||

delete(need, p)

|

||||

setIndirect(stmt, indirect[p])

|

||||

} else {

|

||||

lastDirectIndex = i

|

||||

continue // drop stmt

|

||||

}

|

||||

}

|

||||

|

||||

case *LineBlock:

|

||||

if len(stmt.Token) == 0 || stmt.Token[0] != "require" {

|

||||

continue

|

||||

}

|

||||

lastRequireIndex = i

|

||||

requireLineOrBlockCount++

|

||||

allDirect := len(stmt.Line) > 0 && !hasComments(stmt.Comments)

|

||||

allIndirect := len(stmt.Line) > 0 && !hasComments(stmt.Comments)

|

||||

for _, line := range stmt.Line {

|

||||

lineToBlock[line] = stmt

|

||||

if hasComments(line.Comments) {

|

||||

allDirect = false

|

||||

allIndirect = false

|

||||

} else if isIndirect(line) {

|

||||

allDirect = false

|

||||

} else {

|

||||

allIndirect = false

|

||||

}

|

||||

}

|

||||

if allDirect {

|

||||

lastDirectIndex = i

|

||||

}

|

||||

if allIndirect {

|

||||

lastIndirectIndex = i

|

||||

}

|

||||

}

|

||||

newStmts = append(newStmts, stmt)

|

||||

}

|

||||

f.Syntax.Stmt = newStmts

|

||||

|

||||

oneFlatUncommentedBlock := requireLineOrBlockCount == 1 &&

|

||||

!hasComments(*f.Syntax.Stmt[lastRequireIndex].Comment())

|

||||

|

||||

// Create direct and indirect blocks if needed. Convert lines into blocks

|

||||

// if needed. If we end up with an empty block or a one-line block,

|

||||

// Cleanup will delete it or convert it to a line later.

|

||||

insertBlock := func(i int) *LineBlock {

|

||||

block := &LineBlock{Token: []string{"require"}}

|

||||

f.Syntax.Stmt = append(f.Syntax.Stmt, nil)

|

||||

copy(f.Syntax.Stmt[i+1:], f.Syntax.Stmt[i:])

|

||||

f.Syntax.Stmt[i] = block

|

||||

return block

|

||||

for path, vers := range need {

|

||||

f.AddNewRequire(path, vers, indirect[path])

|

||||

}

|

||||

|

||||

ensureBlock := func(i int) *LineBlock {

|

||||

switch stmt := f.Syntax.Stmt[i].(type) {

|

||||

case *LineBlock:

|

||||

return stmt

|

||||

case *Line:

|

||||

block := &LineBlock{

|

||||

Token: []string{"require"},

|

||||

Line: []*Line{stmt},

|

||||

}

|

||||

stmt.Token = stmt.Token[1:] // remove "require"

|

||||

stmt.InBlock = true

|

||||

f.Syntax.Stmt[i] = block

|

||||

return block

|

||||

default:

|

||||

panic(fmt.Sprintf("unexpected statement: %v", stmt))

|

||||

}

|

||||

}

|

||||

|

||||

var lastDirectBlock *LineBlock

|

||||

if lastDirectIndex < 0 {

|

||||

if lastIndirectIndex >= 0 {

|

||||

lastDirectIndex = lastIndirectIndex

|

||||

lastIndirectIndex++

|

||||

} else if lastRequireIndex >= 0 {

|

||||

lastDirectIndex = lastRequireIndex + 1

|

||||

} else {

|

||||

lastDirectIndex = len(f.Syntax.Stmt)

|

||||

}

|

||||

lastDirectBlock = insertBlock(lastDirectIndex)

|

||||

} else {

|

||||

lastDirectBlock = ensureBlock(lastDirectIndex)

|

||||

}

|

||||

|

||||

var lastIndirectBlock *LineBlock

|

||||

if lastIndirectIndex < 0 {

|

||||

lastIndirectIndex = lastDirectIndex + 1

|

||||

lastIndirectBlock = insertBlock(lastIndirectIndex)

|

||||

} else {

|

||||

lastIndirectBlock = ensureBlock(lastIndirectIndex)

|

||||

}

|

||||

|

||||

// Delete requirements we don't want anymore.

|

||||

// Update versions and indirect comments on requirements we want to keep.

|

||||

// If a requirement is in last{Direct,Indirect}Block with the wrong

|

||||

// indirect marking after this, or if the requirement is in an single

|

||||

// uncommented mixed block (oneFlatUncommentedBlock), move it to the

|

||||

// correct block.

|

||||

//

|

||||

// Some blocks may be empty after this. Cleanup will remove them.

|

||||

need := make(map[string]*Require)

|

||||

for _, r := range req {

|

||||

need[r.Mod.Path] = r

|

||||

}

|

||||

have := make(map[string]*Require)

|

||||

for _, r := range f.Require {

|

||||

path := r.Mod.Path

|

||||

if need[path] == nil || have[path] != nil {

|

||||

// Requirement not needed, or duplicate requirement. Delete.

|

||||

r.markRemoved()

|

||||

continue

|

||||

}

|

||||

have[r.Mod.Path] = r

|

||||

r.setVersion(need[path].Mod.Version)

|

||||

r.setIndirect(need[path].Indirect)

|

||||

if need[path].Indirect &&

|

||||

(oneFlatUncommentedBlock || lineToBlock[r.Syntax] == lastDirectBlock) {

|

||||

moveReq(r, lastIndirectBlock)

|

||||

} else if !need[path].Indirect &&

|

||||

(oneFlatUncommentedBlock || lineToBlock[r.Syntax] == lastIndirectBlock) {

|

||||

moveReq(r, lastDirectBlock)

|

||||

}

|

||||

}

|

||||

|

||||

// Add new requirements.

|

||||

for path, r := range need {

|

||||

if have[path] == nil {

|

||||

if r.Indirect {

|

||||

moveReq(r, lastIndirectBlock)

|

||||

} else {

|

||||

moveReq(r, lastDirectBlock)

|

||||

}

|

||||

f.Require = append(f.Require, r)

|

||||

}

|

||||

}

|

||||

|

||||

f.SortBlocks()

|

||||

}

|

||||

|

||||

func (f *File) DropRequire(path string) error {

|

||||

for _, r := range f.Require {

|

||||

if r.Mod.Path == path {

|

||||

r.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(r.Syntax)

|

||||

*r = Require{}

|

||||

}

|

||||

}

|

||||

|

|

@ -1204,7 +916,7 @@ func (f *File) AddExclude(path, vers string) error {

|

|||

func (f *File) DropExclude(path, vers string) error {

|

||||

for _, x := range f.Exclude {

|

||||

if x.Mod.Path == path && x.Mod.Version == vers {

|

||||

x.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(x.Syntax)

|

||||

*x = Exclude{}

|

||||

}

|

||||

}

|

||||

|

|

@ -1235,7 +947,7 @@ func (f *File) AddReplace(oldPath, oldVers, newPath, newVers string) error {

|

|||

continue

|

||||

}

|

||||

// Already added; delete other replacements for same.

|

||||

r.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(r.Syntax)

|

||||

*r = Replace{}

|

||||

}

|

||||

if r.Old.Path == oldPath {

|

||||

|

|

@ -1251,7 +963,7 @@ func (f *File) AddReplace(oldPath, oldVers, newPath, newVers string) error {

|

|||

func (f *File) DropReplace(oldPath, oldVers string) error {

|

||||

for _, r := range f.Replace {

|

||||

if r.Old.Path == oldPath && r.Old.Version == oldVers {

|

||||

r.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(r.Syntax)

|

||||

*r = Replace{}

|

||||

}

|

||||

}

|

||||

|

|

@ -1292,7 +1004,7 @@ func (f *File) AddRetract(vi VersionInterval, rationale string) error {

|

|||

func (f *File) DropRetract(vi VersionInterval) error {

|

||||

for _, r := range f.Retract {

|

||||

if r.VersionInterval == vi {

|

||||

r.Syntax.markRemoved()

|

||||

f.Syntax.removeLine(r.Syntax)

|

||||

*r = Retract{}

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -192,21 +192,6 @@ func (e *InvalidVersionError) Error() string {

|

|||

|

||||

func (e *InvalidVersionError) Unwrap() error { return e.Err }

|

||||

|

||||

// An InvalidPathError indicates a module, import, or file path doesn't

|

||||

// satisfy all naming constraints. See CheckPath, CheckImportPath,

|

||||

// and CheckFilePath for specific restrictions.

|

||||

type InvalidPathError struct {

|

||||

Kind string // "module", "import", or "file"

|

||||

Path string

|

||||

Err error

|

||||

}

|

||||

|

||||

func (e *InvalidPathError) Error() string {

|

||||

return fmt.Sprintf("malformed %s path %q: %v", e.Kind, e.Path, e.Err)

|

||||

}

|

||||

|

||||

func (e *InvalidPathError) Unwrap() error { return e.Err }

|

||||

|

||||

// Check checks that a given module path, version pair is valid.

|

||||

// In addition to the path being a valid module path

|

||||

// and the version being a valid semantic version,

|

||||

|

|

@ -311,36 +296,30 @@ func fileNameOK(r rune) bool {

|

|||

// this second requirement is replaced by a requirement that the path

|

||||